…here’s Tom with the Weather.

That right there is comedian/philosopher Bill Hicks, sadly no longer with us. One imagines he would be pleased and completely unsurprised to learn that serious scientific minds are considering and actually finding support for the theory that our reality could be a kind of simulation. That means, for example, a string of daisy-chained IBM Super-Deep-Blue Gene Quantum Watson computers from 2042 could be running a History of the Universe program, and depending on your solipsistic preferences, either you are or we are the character(s).

It’s been in the news a lot of late, but — no way, right?

Because dude, I’m totally real

Despite being utterly unable to even begin thinking about how to consider what real even means, the everyday average rational person would probably assign this to the sovereign realm of unemployable philosophy majors or under the Whatever, Who Cares? or Oh, That’s Interesting I Gotta Go Now! categories. Okay fine, but on the other side of the intellectual coin, vis-à-vis recent technological advancement, of late it’s actually being seriously considered by serious people using big words they’ve learned at endless college whilst collecting letters after their names and doin’ research and writin’ and gettin’ association memberships and such.

So… why now?

Well, basically, it’s getting hard to ignore.

It’s not a new topic, it’s been hammered by philosophy and religion since like, thought happened. But now it’s getting some actual real science to stir things up. And it’s complicated, occasionally obtuse stuff — theories are spread out across various disciplines, and no one’s really keeping a decent flowchart.

So, what follows is an effort to encapsulate these ideas![]() , and that’s daunting — it’s incredibly difficult to focus on writing when you’re wondering if you really have fingers or eyes. Along with links to some articles with links to some papers, what follows is Anthrobotic’s CliffsNotes on the intersection of physics, computer science, probability, and evidence for/against reality being real (and how that all brings us back to well, God).

, and that’s daunting — it’s incredibly difficult to focus on writing when you’re wondering if you really have fingers or eyes. Along with links to some articles with links to some papers, what follows is Anthrobotic’s CliffsNotes on the intersection of physics, computer science, probability, and evidence for/against reality being real (and how that all brings us back to well, God).

You know, light fare.

First — Maybe we know how the universe works: Fantastically simplified, as our understanding deepens, it appears more and more the case that, in a manner of speaking, the universe sort of “computes” itself based on the principles of quantum mechanics. Right now, humanity’s fastest and sexiest supercomputers can simulate only extremely tiny fractions of the natural universe as we understand it (contrasted to the macro-scale inferential Bolshoi Simulation). But of course we all know the brute power of our computational technology is increasing dramatically like every few seconds, and even awesomer, we are learning how to build quantum computers, machines that calculate based on the underlying principles of existence in our universe — this could thrust the game into superdrive. So, given ever-accelerating computing power, and given than we can already simulate tiny fractions of the universe, you logically have to consider the possibility: If the universe works in a way we can exactly simulate, and we give it a shot, then relatively speaking what we make ceases to be a simulation, i.e., we’ve effectively created a new reality, a new universe (ummm… God?). So, the question is how do we know that we haven’t already done that? Or, otherwise stated: what if our eventual ability to create perfect reality simulations with computers is itself a simulation being created by a computer? Well, we can’t answer this — we can’t know. Unless…

[New Scientist’s Special Reality Issue]

[D-Wave’s Quantum Computer]

[Possible Large-scale Quantum Computing]

Second — Maybe we see it working: The universe seems to be metaphorically “pixelated.” This means that even though it’s a 50 billion trillion gajillion megapixel JPEG, if we juice the zooming-in and drill down farther and farther and farther, we’ll eventually see a bunch of discreet chunks of matter, or quantums, as the kids call them — these are the so-called pixels of the universe. Additionally, a team of lab coats at the University of Bonn think they might have a workable theory describing the underlying lattice, or existential re-bar in the foundation of observable reality (upon which the “pixels” would be arranged). All this implies, in a way, that the universe is both designed and finite (uh-oh, getting closer to the God issue). Even at ferociously complex levels, something finite can be measured and calculated and can, with sufficiently hardcore computers, be simulated very, very well. This guy Rich Terrile, a pretty serious NASA scientist, sites the pixelation thingy and poses a video game analogy: think of any first-person shooter — you cannot immerse your perspective into the entirety of the game, you can only interact with what is in your bubble of perception, and everywhere you go there is an underlying structure to the environment. Kinda sounds like, you know, life — right? So, what if the human brain is really just the greatest virtual reality engine ever conceived, and your character, your life, is merely a program wandering around a massively open game map, playing… well, you?

[Lattice Theory from the U of Bonn]

[NASA guy Rich Terrile at Vice]

[Kurzweil AI’s Technical Take on Terrile]

Thirdly — Turns out there’s a reasonable likelihood: While the above discussions on the physical properties of matter and our ability to one day copy & paste the universe are intriguing, it also turns out there’s a much simpler and straightforward issue to consider: there’s this annoyingly simplistic yet valid thought exercise posited by Swedish philosopher/economist/futurist Nick Bostrum, a dude way smarter that most humans. Basically he says we’ve got three options: 1. Civilizations destroy themselves before reaching a level of technological prowess necessary to simulate the universe; 2. Advanced civilizations couldn’t give two shits about simulating our primitive minds; or 3. Reality is a simulation. Sure, a decent probability, but sounds way oversimplified, right?

Well go read it. Doing so might ruin your day, JSYK.

[Summary of Bostrum’s Simulation Hypothesis]

Lastly — Data against is lacking: Any idea how much evidence or objective justification we have for the standard, accepted-without-question notion that reality is like, you know… real, or whatever? None. Zero. Of course the absence of evidence proves nothing, but given that we do have decent theories on how/why simulation theory is feasible, it follows that blithely accepting that reality is not a simulation is an intrinsically more radical position. Why would a thinking being think that? Just because they know it’s true? Believing 100% without question that you are a verifiably physical, corporeal, technology-wielding carbon-based organic primate is a massive leap of completely unjustified faith.

Oh, Jesus. So to speak.

If we really consider simulation theory, we must of course ask: who built the first one? And was it even an original? Is it really just turtles all the way down, Professor Hawking?

Okay, okay — that means it’s God time now

Now let’s see, what’s that other thing in human life that, based on a wild leap of faith, gets an equally monumental evidentiary pass? Well, proving or disproving the existence of god is effectively the same quandary posed by simulation theory, but with one caveat: we actually do have some decent scientific observations and theories and probabilities supporting simulation theory. That whole God phenomenon is pretty much hearsay, anecdotal at best. However, very interestingly, rather than negating it, simulation theory actually represents a kind of back-door validation of creationism. Here’s the simple logic:

If humans can simulate a universe, humans are it’s creator.

Accept the fact that linear time is a construct.

The process repeats infinitely.

We’ll build the next one.

The loop is closed.

God is us.

Heretical speculation on iteration

Even wonder why older polytheistic religions involved the gods just kinda setting guidelines for behavior, and they didn’t necessarily demand the love and complete & total devotion of humans? Maybe those universes were 1st-gen or beta products. You know, like it used to take a team of geeks to run the building-sized ENIAC, the first universe simulations required a whole host of creators who could make some general rules but just couldn’t manage every single little detail.

Now, the newer religions tend to be monotheistic, and god wants you to love him and only him and no one else and dedicate your life to him. But just make sure to follow his rules, and take comfort that your’re right and everyone else is completely hosed and going to hell. The modern versions of god, both omnipotent and omniscient, seem more like super-lonely cosmically powerful cat ladies who will delete your ass if you don’t behave yourself and love them in just the right way. So, the newer universes are probably run as a background app on the iPhone 26, and managed by… individuals. Perhaps individuals of questionable character.

The home game:

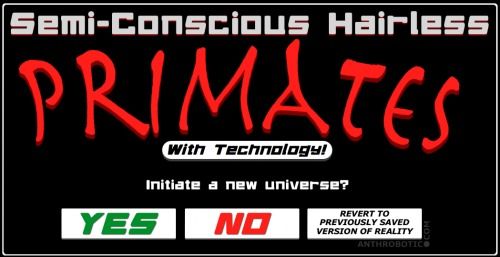

Latest title for the 2042 XBOX-Watson³ Quantum PlayStation Cube:*![]()

Crappy 1993 graphic design simulation: 100% Effective!

- *Manufacturer assumes no responsibility for inherently emergent anomalies, useless

inventions by game characters, or evolutionary cul de sacs including but not limited to:

The duck-billed platypus, hippies, meat in a can, reality TV, the TSA,

mayonaise, Sony VAIO products, natto, fundamentalist religious idiots,

people who don’t like homos, singers under 21, hangovers, coffee made

from cat shit, passionfruit iced tea, and the pacific garbage patch.

And hey, if true, it’s not exactly bad news

All these ideas are merely hypotheses, and for most humans the practical or theoretical proof or disproof would probably result in the same indifferent shrug. For those of us who like to rub a few brain cells together from time to time, attempting to both to understand the fundamental nature of our reality/simulation, and guess at whether or not we too might someday be capable of simulating ourselves, well — these are some goddamn profound ideas.

So, no need for hand wringing — let’s get on with our character arc and/or real lives. While simulation theory definitely causes reflexive revulsion, “just a simulation” isn’t necessarily pejorative. Sure, if we take a look at the current state of our own computer simulations and A.I. constructs, it is rather insulting. So if we truly are living in a simulation, you gotta give it up to the creator(s), because it’s a goddamn amazing piece of technological achievement.

Addendum: if this still isn’t sinking in, the brilliant

Dinosaur Comics might do a better job explaining:

(This post originally published I think like two days

ago at technosnark hub www.anthrobotic.com.)