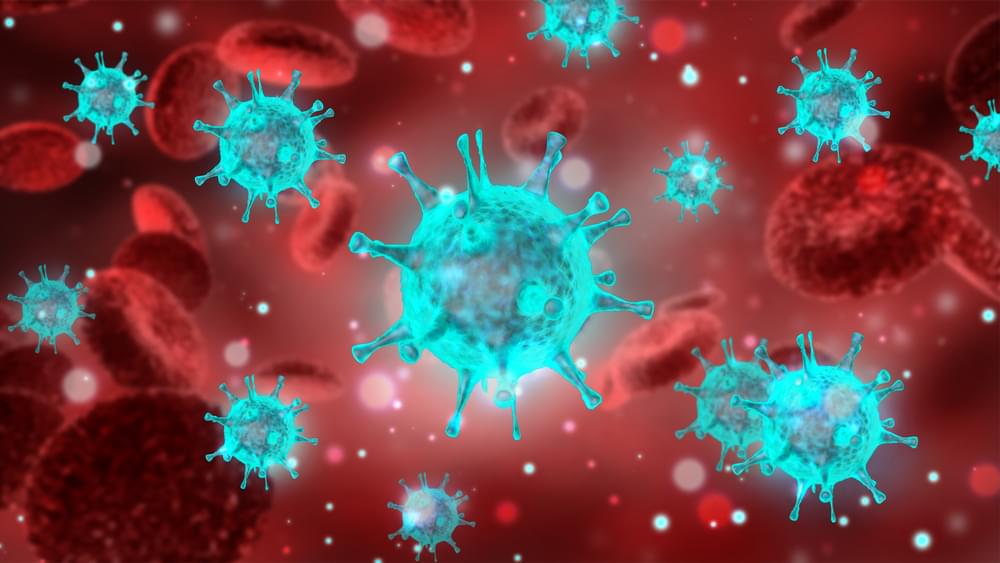

I am happy to say that my recently published computational COVID-19 research has been featured in a major news article by HPCwire! I led this research as CTO of Conduit. My team utilized one of the world’s top supercomputers (Frontera) to study the mechanisms by which the coronavirus’s M proteins and E proteins facilitate budding, an understudied part of the SARS-CoV-2 life cycle. Our results may provide the foundation for new ways of designing antiviral treatments which interfere with budding. Thank you to Ryan Robinson (Conduit’s CEO) and my computational team: Ankush Singhal, Shafat M., David Hill, Jr., Tamer Elkholy, Kayode Ezike, and Ricky Williams.

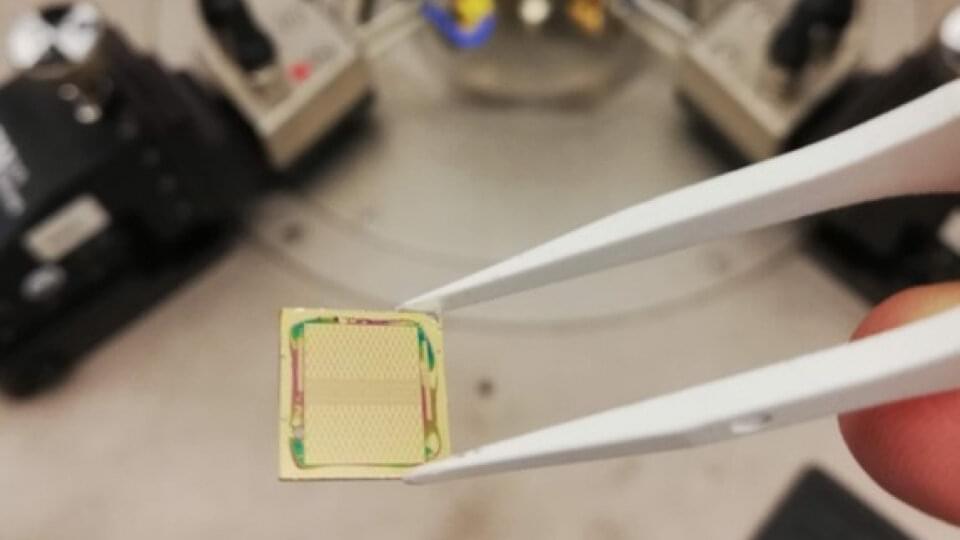

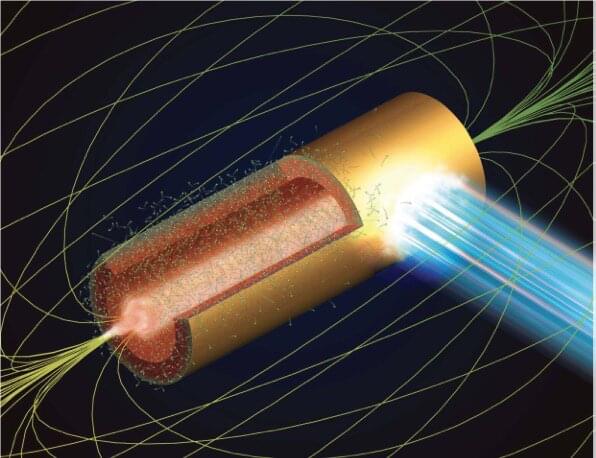

Conduit, created by MIT graduate (and current CEO) Ryan Robinson, was founded in 2017. But it might not have been until a few years later, when the pandemic started, that Conduit may have found its true calling. While Conduit s commercial division is busy developing a Covid-19 test called nanoSPLASH, its nonprofit arm was granted access to one of the most powerful supercomputers in the world Frontera, at the Texas Advanced Computing Center (TACC) to model the budding process of SARS-CoV-2.

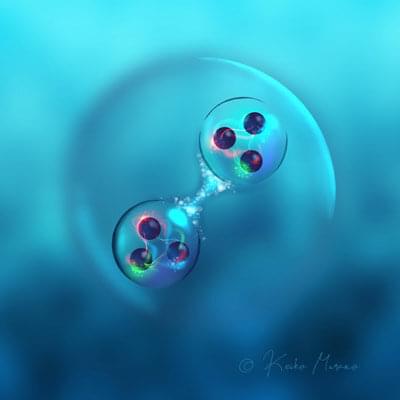

Budding, the researchers explained, is how the virus genetic material is encapsulated in a spherical envelope and the process is key to the virus ability to infect. Despite that, they say, it has hitherto been poorly understood:

The Conduit team comprised of Logan Thrasher Collins (CTO of Conduit), Tamer Elkholy, Shafat Mubin, David Hill, Ricky Williams, Kayode Ezike and Ankush Singhal sought to change that, applying for an allocation from the White House-led Covid-19 High-Performance Computing Consortium to model the budding process on a supercomputer.