By Harry J. Bentham - More articles by Harry J. Bentham

Originally published at the Institute for Ethics and Emerging Technologies on 17 July 2014

By Harry J. Bentham - More articles by Harry J. Bentham

Originally published at the Institute for Ethics and Emerging Technologies on 17 July 2014

Since Maquis Books published The Traveller and Pandemonium, a novel authored by me from 2011–2014, I have been responding as insightfully as possible to reviews and also discussing the book’s political and philosophical themes wherever I can. Set in a fictional alien world, much of this book’s 24 chapters are politically themed on the all too real human weakness of infighting and resorting to hardline, extremist and even messianic plans when faced with a desperate situation.

The story tells about human cultures battling to survive in a deadly alien ecosystem. There the human race, rather than keeping animals in cages, must keep their own habitats in cages as protection from the world outside. The human characters of the story live out a primitive existence not typical of science-fiction, mainly aiming at their own survival. Technological progress is nonexistent, as all human efforts have been redirected to self-defense against the threat of the alien predators.

Even though The Traveller and Pandemonium depicts humanity facing a common alien foe, the various struggling human factions still fail to cooperate. In fact, they turn ever more hostilely on each other even as the alien planet’s predators continue to close in on the last remaining human states. At the time the story is set, the human civilization on the planet is facing imminent extinction from its own infighting and extremism, as well as the aggressive native plant and animal life of the planet.

The more sinister of the factions, known as the Cult, preaches the pseudo-religious doctrine that survival on the alien world will only be possible through infusions of alien hormones and the rehabilitation of humanity to coexist with the creatures of the planet at a biological level. However, there are censored side effects of the infusions that factor into the plot, and the Cult is known for its murderous opposition to anyone who opposes its vision.

The only alternative seems to be a second faction, but it is equally violent, and comes under the leadership of an organization who call themselves the Inquisitors. In their doctrine, humans must continue to isolate themselves from the alien life of the planet, but this should extend to exterminating the alien life and the aforementioned Cult that advocates humans transmuting themselves to live safely on the planet.

I believe that this aspect of the story, a battle between two militant philosophies, serves well to capture the kind of tension and violent irrationality that can engulf humanity in the face of existential risks. There is no reason to believe that hypothetical existential risks to humanity such as a deadly asteroid impact, an extraterrestrial threat, runaway global warming, alien contact or a devastating virus would unite the planet, and there is every reason to believe that it would divide the planet. It is often the case that the more argument there is for authority and submission to a grand plan in order to survive, the greater the differences of opinion and the greater the potential for divergence and conflict.

Social habits, politics, beliefs and even the cultural trappings of the different human cultures clinging to the alien planet are fully represented in the book. In all, the story has had significant time and care put into refining it to create a compelling and believable depiction of life in an inhospitable parallel world, and readers remarked in reviews that it is a “masterclass in world-building”.

The central character of the story, nicknamed the Traveler, together with his companion, do not really subscribe to either of the extremist philosophies battling over humanity’s fate on the alien planet, but their ideas may be equally strange. Instead, they reject the alien world in which they live. With an almost religious naïveté, they are searching for a “better place”. It is through this part of the plot that the concepts of religious faith and hope are visited. Of course, at all times the reader knows they are right – there is a “better place” only not the religious kind. Ultimately, the quest is for Earth, although the characters have never heard of such a place and have only inferred that it might somehow exist and represent an escape from the hostile planet where they were born.

Reviewers have acknowledged that by inverting the relationship of humanity and nature so that nature is on the advance and humans are receding and diminishing in the setting of this science-fiction novel, a unique and compelling setting is created. I believe the story offers my best exploration of a number of political and ethical themes, such as how people feel pressured to choose between hardline factions in times of extreme desperation and in the face of existential threats. Science fiction is a worthy medium in which to express and explore not only the future, but some of the most troubling political and philosophical scenarios that have plagued humanity’s past.

.#democracy. #you. #indie. #webcontent. #contentmarketing. @HJBentham.

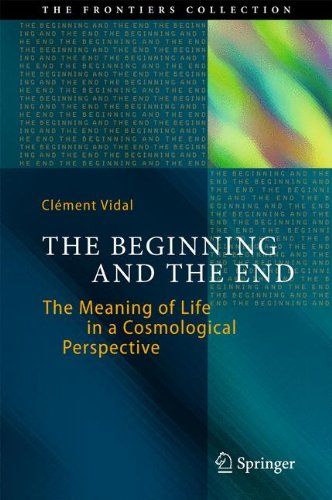

By Clément Vidal — Vrije Universiteit Brussel, Belgium.

I am happy to inform you that I just published a book which deals at length with our cosmological future. I made a short book trailer introducing it, and the book has been mentioned in the Huffington Post and H+ Magazine.

You can get 20% off with the discount code ‘Vidal2014′ (valid until 31st July)!

Uploading the content of one’s mind, including one’s personality, memories and emotions, into a computer may one day be possible, but it won’t transfer our biological consciousness and won’t make us immortal.

Uploading one’s mind into a computer, a concept popularized by the 2014 movie Transcendence starring Johnny Depp, is likely to become at least partially possible, but won’t lead to immortality. Major objections have been raised regarding the feasibility of mind uploading. Even if we could surpass every technical obstacle and successfully copy the totality of one’s mind, emotions, memories, personality and intellect into a machine, that would be just that: a copy, which itself can be copied again and again on various computers.

Neuroscientists have not yet been able to explain what consciousness is, or how it works at a neurological level. Once they do, it is might be possible to reproduce consciousness in artificial intelligence. If that proves feasible, then it should in theory be possible to replicate our consciousness on computers too. Or is that jumpig to conclusions ?

Once all the connections in the brain are mapped and we are able to reproduce all neural connections electronically, we will also be able run a faithful simulation of our brain on a computer. However, even if that simulation happens to have a consciousness of its own, it will never be quite like our own biological consciousness. For example, without hormones we couldn’t feel emotions like love, jealously or attachment. (see Could a machine or an AI ever feel human-like emotions ?)

Some people think that mind uploading necessarily requires to leave one’s biological body. But there is no conscensus about that. Uploading means copying. When a file is uploaded on the Internet, it doesn’t get deleted at the source. It’s just a copy.

The best analogy to understand that is cloning. Identical twins are an example of human clones that already live among us. Identical twins share the same DNA, yet nobody would argue that they also share a single consciousness.

It will be easy to prove that hypothesis once the technology becomes available. Unlike Johnny Depp in Transcend, we don’t have to die to upload our mind to one or several computers. Doing so won’t deprive us of our biological consciousness. It will just be like having a mental clone of ourself, but we will never feel like we are inside the computer, without affecting who we are.

If the conscious self doesn’t leave the biologically body (i.e. “die”) when transferring mind and consciousness, it would basically mean that that individual would feel in two places at the same time: in the biological body and in the computer. That is problematic. It’s hard to conceive how that could be possible since the very essence of consciousness is a feeling of indivisible unity.

If we want to avoid this problem of dividing the sense of self, we must indeed find a way to transfer the consciousness from the body to the computer. But this would assume that consciousness is merely some data that can be transferred. We don’t know that yet. It could be tied to our neurons or to very specific atoms in some neurons. If that was the case, destroying the neurons would destroy the consciousness.

Even assuming that we found a way to transfer the consciousness from the brain to a computer, how could we avoid consciousness being copied to other computers, recreating the philosophical problem of splitting the self. That would actually be much worse since a computerized consciousness could be copied endless times. How would you then feel a sense of unified consciousness ?

Since mind uploading won’t preserve our self-awareness, the feeling that we are ourself and not someone else, it won’t lead to immortality. We’ll still be bound to our bodies, but life expectancy for transhumanists and cybernetic humans will be considerably extended.

Immortality is a confusing term since it implies living forever, which is impossible since nothing is eternal in our universe, not even atoms or quarks. Living for billions of years, while highly improbable in itself, wouldn’t even be close to immortality. It may seem like a very large number compared to our short existence, but compared to eternity (infinite time), it isn’t much longer than 100 years.

Even machines aren’t much longer lived than we are. Actually modern computers tend to have much shorter life spans than humans. A 10-year old computer is very old indeed, as well as slower and more prone to technical problems than a new computer. So why would we think that transferring our mind to a computer would grant us greatly extended longevity ?

Even if we could transfer all our mind’s data and consciousness an unlimited number of times onto new machines, that won’t prevent the machine currently hosting us from being destroyed by viruses, bugs, mechanical failures or outright physical destruction of the whole hardware, intentionally, accidentally or due to natural catastrophes.

In the meantime, science will slow down, stop and even reverse the aging process, enabling us to live healthily for a very long time by today’s standards. This is known as negligible senescence. Nevertheless, cybernetic humans with robotic limbs and respirocytes will still die in accidents or wars. At best we could hope to living for several hundreds or thousands years, assuming that nothing kills us before.

As a result, there won’t be that much differences between living inside a biological body and a machine. The risks will be comparable. Human longevity will in all likelihood increase dramatically, but there simply is no such thing as immortality.

Artificial Intelligence could easily replicate most of processes, thoughts, emotions, sensations and memories of the human brain — with some reservations on some feelings and emotions residing outside the brain, in the biological body. An AI might also have a consciousness of its own. Backing up the content of one’s mind will most probably be possible one day. However there is no evidence that consciousness or self-awareness are merely information that can be transferred since consciousness cannot be divided in two or many parts.

Consciousness is most likely tied to neurons in a certain part of the brain (which may well include the thalamus). These neurons are maintained throughout life, from birth to death, without being regenerated like other cells in the body, which explains the experienced feeling of continuity.

There is not the slightest scientific evidence of a duality between body and consciousness, or in other words that consciousness could be equated with an immaterial soul. In the absence of such duality, a person’s original consciousness would cease to exist with the destruction of the neurons in his/her brain responsible for consciousness. Unless one believes in an immaterial, immortal soul, the death of one’s brain automatically results in the extinction of consciousness. While a new consciousness could be imitated to perfection inside a machine, it would merely be a clone of the person’s consciousness, not an actual transfer, meaning that that feeling of self would not be preserved.

———

This article was originally published on Life 2.0.

Computers will soon be able to simulate the functioning of a human brain. In a near future, artificial superintelligence could become vastly more intellectually capable and versatile than humans. But could machines ever truly experience the whole range of human feelings and emotions, or are there technical limitations ?

In a few decades, intelligent and sentient humanoid robots will wander the streets alongside humans, work with humans, socialize with humans, and perhaps one day will be considered individuals in their own right. Research in artificial intelligence (AI) suggests that intelligent machines will eventually be able to see, hear, smell, sense, move, think, create and speak at least as well as humans. They will feel emotions of their own and probably one day also become self-aware.

There may not be any reason per se to want sentient robots to experience exactly all the emotions and feelings of a human being, but it may be interesting to explore the fundamental differences in the way humans and robots can sense, perceive and behave. Tiny genetic variations between people can result in major discrepancies in the way each of us thinks, feels and experience the world. If we appear so diverse despite the fact that all humans are in average 99.5% identical genetically, even across racial groups, how could we possibly expect sentient robots to feel the exact same way as biological humans ? There could be striking similarities between us and robots, but also drastic divergences on some levels. This is what we will investigate below.

Computers are undergoing a profound mutation at the moment. Neuromorphic chips have been designed on the way the human brain works, modelling the massively parallel neurological processeses using artificial neural networks. This will enable computers to process sensory information like vision and audition much more like animals do. Considerable research is currently devoted to create a functional computer simulation of the whole human brain. The Human Brain Project is aiming to achieve this for 2016. Does that mean that computers will finally experience feelings and emotions like us ? Surely if an AI can simulate a whole human brain, then it becomes a sort of virtual human, doesn’t it ? Not quite. Here is why.

There is an important distinction to be made from the onset between an AI residing solely inside a computer with no sensor at all, and an AI that is equipped with a robotic body and sensors. A computer alone would have a range of emotions far more limited as it wouldn’t be able to physically interact with its environment. The more sensory feedback a machine could receive, the wide the range of feelings and emotions it will be able to experience. But, as we will see, there will always be fundamental differences between the type of sensory feedback that a biological body and a machine can receive.

Here is an illustration of how limited an AI is emotionally without a sensory body of its own. In animals, fear, anxiety or phobias are evolutionary defense mechanisms aimed at raising our vigilence in the face of danger. That is because our bodies work with biochemical signals involving hormones and neurostransmitters sent by the brain to prompt a physical action when our senses perceive danger. Computers don’t work that way. Without sensors feeding them information about their environment, computers wouldn’t be able to react emotionally.

Even if a computer could remotely control machines like robots (e.g. through the Internet) that are endowed with sensory perception, the computer itself wouldn’t necessarily care if the robot (a discrete entity) is harmed or destroyed, since it would have no physical consequence on the AI itself. An AI could fear for its own well-being and existence, but how is it supposed to know that it is in danger of being damaged or destroyed ? It would be the same as a person who is blind, deaf and whose somatosensory cortex has been destroyed. Without feeling anything about the outside world, how could it perceive danger ? That problem disappear once the AI is given at least one sense, like a camera to see what is happening around itself. Now if someone comes toward the computer with a big hammer, it will be able to fear for its existence !

In theory, any neural process can be reproduced digitally in a computer, even though the brain is mostly analog. This is hardly a concern, as Ray Kurzweil explained in his book How to Create a Mind. However it does not always make sense to try to replicate everything a human being feel in a machine.

While sensory feelings like heat, cold or pain could easily be felt from the environment if the machine is equipped with the appropriate sensors, this is not the case for other physiological feelings like thirst, hunger, and sleepiness. These feelings alert us of the state of our body and are normally triggered by hormones such as vasopressin, ghrelin, or melatonin. Since machines do not have a digestive system nor hormones, it would be downright nonsensical to try to emulate such feelings.

Emotions do not arise for no reason. They are either a reaction to an external stimulus, or a spontaneous expression of an internal thought process. For example, we can be happy or joyful because we received a present, got a promotion or won the lottery. These are external causes that trigger the emotions inside our brain. The same emotion can be achieved as the result of an internal thought process. If I manage to find a solution to a complicated mathematical problem, that could make me happy too, even if nobody asked me to solve it and it does not have any concrete application in my life. It is a purely intellectual problem with no external cause, but solving it confers satisfaction. The emotion could be said to have arisen spontaneously from an internalized thought process in the neocortex. In other words, solving the problem in the neocortex causes the emotion in another part of the brain.

An intelligent computer could also prompt some emotions based on its own thought processes, just like the joy or satisfaction experienced by solving a mathematical problem. In fact, as long as it is allowed to communicate with the outside world, there is no major obstacle to computers feeling true emotions of its own like joy, sadness, surprise, disappointment, fear, anger, or resentment, among others. These are all emotions that can be produced by interactions through language (e.g. reading, online chatting) with no need for physiological feedback.

Now let’s think about how and why humans experience a sense of well being and peace of mind, two emotions far more complex than joy or anger. Both occur when our physiological needs are met, when we are well fed, rested, feel safe, don’t feel sick, and are on the right track to pass on our genes and keep our offspring secure. These are compound emotions that require other basic emotions as well as physiological factors. A machine without physiological needs cannot get sick and that does not need to worry about passing on its genes to posterity, and therefore will have no reason to feel that complex emotion of ‘well being’ the way humans do. For a machine well being may exist but in a much more simplified form.

Just like machines cannot reasonably feel hunger because they do not eat, replicating emotions on machines with no biological body, no hormones, and no physiological needs can be tricky. This is the case with social emotions like attachment, sexual emotions like love, and emotions originating from evolutionary mechanisms set in the (epi)genome. This is what we will explore in more detail below.

What really distinguishes intelligent machines from humans and animals is that the former do not have a biological body. This is essentially why they could not experience the same range of feelings and emotions as we do, since many of them inform us about the state of our biological body.

An intelligent robot with sensors could easily see, hear, detect smells, feel an object’s texture, shape and consistency, feel pleasure and pain, heat and cold, and the like. But what about the sense of taste ? Or the effects of alcohol on the mind ? Since machines do not eat, drink and digest, they wouldn’t be able to experience these things. A robot designed to socialize with humans would be unable to understand and share the feelings of gastronomical pleasure or inebriety with humans. They could have a theoretical knowledge of it, but not a first-hand knowledge from an actually felt experience.

But the biggest obstacle to simulating physical feelings in a machine comes from the vagus nerve, which controls such varied things as digestion, ‘gut feelings’, heart rate and sweating. When we are scared or disgusted, we feel it in our guts. When we are in love we feel butterflies in our stomach. That’s because of the way our nervous system is designed. Quite a few emotions are felt through the vagus nerve connecting the brain to the heart and digestive system, so that our body can prepare to court a mate, fight an enemy or escape in the face of danger, by shutting down digestion, raising adrenaline and increasing heart rate. Feeling disgusted can help us vomit something that we have swallowed and shouldn’t have.

Strong emotions can affect our microbiome, the trillions of gut bacteria that help us digest food and that secrete 90% of the serotonin and 50% of the dopamine used by our brain. The thousands of species of bacteria living in our intestines can vary quickly based on our diet, but it has been demonstrated that even emotions like stress, anxiety, depression and love can strongly affect the composition of our microbiome. This is very important because of the essential role that gut bacteria play in maintaining our brain functions. The relationship between gut and brain works both ways. The presence or absence of some gut bacteria has been linked to autism, obsessive-compulsive disorder and several other psychological conditions. What we eat actually influence the way the think too, by changing our gut flora, and therefore also the production of neurotransmitters. Even our intuition is linked to the vagus nerve, hence the expression ‘gut feeling’.

Without a digestive system, a vagus nerve and a microbiome, robots would miss a big part of our emotional and psychological experience. Our nutrition and microbiome influence our brain far more than most people suspect. They are one of the reasons why our emotions and behaviour are so variable over time (in addition to maturity; see below).

Another key difference between machines and humans (or animals) is that our emotions and thoughts can be severely affected by our health, physical condition and fatigue. Irritability is often an expression of mental or physical exhaustion caused by a lack of sleep or nutrients, or by a situation that puts excessive stress on mental faculties and increases our need for sleep and nutrients. We could argue that computers may overheat if used too intensively, and may also need to rest. That is not entirely true if the hardware is properly designed with an super-efficient cooling system, and a steady power supply. New types of nanochips may not produce enough heat to have any heating problem at all.

Most importantly machines don’t feel sick. I don’t mean just being weakened by a disease or feeling pain, but actually feeling sick, such as indigestion, nausea (motion sickness, sea sickness), or feeling under the weather before tangible symptoms appear. These aren’t enviable feelings of course, but the point is that machines cannot experience them without a biological body and an immune system.

When tired or sick, not only do we need to rest to recover our mental faculties and stabilize our emotions, we also need to dream. Dreams are used to clear our short-term memory cache (in the hippocampus), to replete neurotransmitters, to consolidate memories (by myelinating synapses during REM sleep), and to let go of the day’s emotions by letting our neurons firing up freely. Dreams also allow a different kind of thinking free of cultural or professional taboos that increase our creativity. This is why we often come up with great ideas or solutions to our problems during our sleep, and notably during the lucid dreaming phase.

Computers cannot dream and wouldn’t need to because they aren’t biological brains with neurostransmitters, stressed out neurons and synapses that need to get myelinated. Without dreams, an AI would nevertheless loose an essential component of feeling like a biological human.

Being in love is an emotion that brings a male and a female individual (save for some exceptions) of the same species together in order to reproduce and raise one’s offspring until they grow up. Sexual love is caused by hormomes, but is not merely the product of hormonal changes in our brain. It involves changes in the biochemistry of our whole body and can even lead to important physiological effects (e.g. on morphology) and long-term behavioural changes. Clearly sexual love is not ‘just an emotion’ and is not purely a neurological process either. Replicating the neurological expression of love in an AI would simulate the whole emotion of love, but only one of its facets.

Apart from the issue of reproducing the physiological expresion of love in a machine, there is also the question of causation. There is a huge difference between an artificially implanted/simulated emotion and one that is capable of arising by itself from environmental causes. People can fall in love for a number of reasons, such as physical attraction and mental attraction (shared interests, values, tastes, etc.), but one of the most important in the animal world is genetic compatibility with the prospective mate. Individuals who possess very different immune systems (HLA genes), for instance, tend to be more strongly attracted to each other and feel more ‘chemistry’. We could imagine that a robot with a sense of beauty and values could appreciate the looks and morals of another robot or a human being and even feel attracted (platonically). Yet a machine couldn’t experience the ‘chemistry’ of sexual love because it lacks hormones, genes and other biochemical markers required for sexual reproduction. In other words, robots could have friends but not lovers, and that make sense.

A substantial part of the range of human emotions and behaviours is anchored in sexuality. Jealousy is another good example. Jealousy is intricatedly linked to love. It is the fear of losing one’s loved one to a sexual rival. It is an innate emotion whose only purpose is to maximize our chances of passing our genes through sexual reproduction by warding off competitors. Why would a machine, which does not need to reproduce sexually, need to feel that ?

One could wonder what difference it makes whether a robot can feel love or not. They don’t need to reproduce sexually, so who cares ? If we need intelligent robots to work with humans in society, for example by helping to take care of the young, the sick and the elderly, they could still function as social individuals without feeling sexual love, wouldn’t they ? In fact you may not want a humanoid robot to become a sexual predator, especially if working with kids ! Not so fast. Without a basic human emotion like love, an AI simply cannot think, plan, prioritize and behave the same way as humans do. Their way of thinking, planning and prioritizing would rely on completely different motivations. For example, young human adults spend considerable time and energy searching for a suitable mate in order to reproduce.

A robot endowed with an AI of equal or greater than human intelligence, lacking the need for sexual reproduction would behave, plan and prioritize its existence very differently than humans. That is not necessarily a bad thing, for a lot of conflicts in human society are caused by sex. But it also means that it could become harder for humans to predict the behaviour and motivation of autonomous robots, which could be a problem once they become more intelligent than us in a few decades. The bottom line is that by lacking just one essential human emotion (let alone many), intelligent robots could have very divergent behaviours, priorities and morals from humans. It could be different in a good way, but we can’t know that for sure at present since they haven’t been built yet.

Humans are social animals. They typically, though not always (e.g. some types of autism), seek to belong to a group, make friends, share feelings and experiences with others, gossip, seek approval or respect from others, and so on. Interestingly, a person’s sociability depends on a variety of factors not found in machines, including gender, age, level of confidence, health, well being, genetic predispositions, and hormonal variations.

We could program an AI to mimick a certain type of human sociability, but it wouldn’t naturally evolve over time with experience and environmental factors (food, heat, diseases, endocrine disruptors, microbiome). Knowledge can be learned but not spontaneous reactions to environmental factors.

Humans tend to be more sociable when the weather is hot and sunny, when they drink alcohol and when they are in good health. A machine has no need to react like that, unless once again we intentionally program it to resemble humans. But even then it couldn’t feel everything we feel as it doesn’t eat, doesn’t have gut bacteria, doesn’t get sick, and doesn’t have sex.

Humans, like all mammals, have an innate need for maternal warmth in childhood. An experiment was conducted with newborn mice taken away from their biological mother. The mice were placed in a cage with two dummy mothers. One of them was warm, fluffy and cosy, but did not have milk. The other one was hard, cold and uncosy but provided milk. The baby mice consistently chose the cosy one, demonstrating that the need for comfort and safety trumps nutrition in infant mammals. Likewise, humans deprived of maternal (or paternal) warmth and care as babies almost always experience psychological problems growing up.

In addition to childhood care, humans also need the feeling of safety and cosiness provided by the shelter of one’s home throughout life. Not all animals are like that. Even as hunter-gatherers or pastoralist nomads, all Homo sapiens need a shelter, be it a tent, a hut or a cave.

How could we expect that kind of reaction and behaviour in a machine that does not need to grow from babyhood to adulthood, cannot know what it is to have parents or siblings, nor need to feel reassured by maternal warmth, and do not have a biological compulsion to seek a shelter ? Without those feelings, it is extremely doubtful that a machine could ever truly understand and empathize completely with humans.

These limitations mean that it may be useless to try to create intelligent, sentient and self-aware robots that truly think, feel and behave like humans. Reproducing our intellect, language, and senses (except taste) are the easy part. Then comes consciousness, which is harder but still feasible. But since our emotions and feelings are so deeply rooted in our biological body and its interaction with its environment, the only way to reproduce them would be to reproduce a biological body for the AI. In other words, we are not talking about a creating a machine anymore, but genetically engineering a new life being, or using neural implants for existing humans.

The way human experience emotions evolves dramatically from birth to adulthood. Children are typically hyperactive and excitable and are prone to making rash decisions on impulse. They cry easily and have difficulties containing and controlling their emotions and feelings. As we mature, we learn more or les successfully to master our emotions. Actually controlling one’s emotions gets easier over time because with age the number of neurons in the brain decreases and emotions get blunter and vital impulses weaker.

The expression of one’s emotions is heavily regulated by culture and taboos. That’s why speakers of Romance languages will generally express their feelings and affection more freely than, say, Japanese or Finnish people. Would intelligent robots also follow one specific human culture, or create a culture on their own ?

Sex hormones also influence the way we feel and express emotions. Male testosterone makes people less prone to emotional display, more rational and cold, but also more aggressive. Female estrogens increase empathy, affection and maternal instincts of protection and care. A good example of the role of biology on emotions is the way women’s hormonal cycles (and the resulting menstruations) affect their emotions. One of the reasons that children process emotions differently than adults is that have lower sex hormomes. As people age, hormonal levels decrease (not just sex hormones), making us more mellow.

Machines don’t mature emotionally, do not go through puberty, do not have hormonal cycles, nor undergo hormonal change based on their age, diet and environment. Artificial intelligence could learn from experience and mature intellectually, but not mature emotionally like a child becoming an adult. This is a vital difference that shouldn’t be underestimated. Program an AI to have the emotional maturity of a 5-year old and it will never grow up. Children (especially boys) cannot really understand the reason for their parents’ anxiety toward them until they grow up and have children of their own, because they lack the maturity and sexual hormones associated with parenthood.

We could always run a software emulating changes in AI maturity over time, but they would not be the result of experiences and interactions with the environment. It may not be useful to create robots that mature like us, but the argument debated here is whether machines could ever feel exactly like us or not. This argument is not purely rhetorical. Some transhumanists wish to be able one day to upload their mind onto a computer and transfer our consciouness (which may not be possible for a number of reasons). Assuming that it becomes possible, what if a child or teenager decides to upload his or her mind and lead a new robotic existence ? One obvious problem is that this person would never fulfill his/her potential for emotional maturity.

The loss of our biological body would also deprive us of our capacity to experience feelings and emotions bound to our physiology. We may be able to keep those already stored in our memory, but we may never dream, enjoy food, or fall in love again.

Even though many human emotions are beyond the range of machines due to their non-biological nature, some emotions could very well be felt by an artificial intelligence. These include, among others:

The following emotions and feelings could not be wholly or faithfully experienced by an AI, even with a sensing robotic body, beyond mere implanted simulation.

In addition, machine emotions would run up against the following issues that would prevent them to feel and experience the world truly like humans.

It is not completely impossible to bypass these obstacles, but that would require to create a humanoid machine that not only possess human-like intellectual faculties, but also an artificial body that can eat and digest and with a digestive system connected to the central microprocessor in the same way as our vagus nerve is connected to our brain. That robot would also need a gender and a capacity to have sex and feel attracted to other humanoid robots or humans based on a predefined programming that serves as an alternative to a biological genome to create a sense of ‘sexual chemistry’ when matched with an individual with a compatible “genome”. It would necessitate artificial hormones to regulate its hunger, thirst, sexual appetite, homeostasis, and so on.

Although we lack the technology and in-depth knowledge of the human body to consider such an ambitious project any time soon, it could eventually become possible one day. One could wonder whether such a magnificent machine could still be called a machine, or simply an artificially made life being. I personally don’t think it should be called a machine at that point.

———

This article was originally published on Life 2.0.

I recently saw the film Transcendence with a close friend. If you can get beyond Johnny Depp’s siliconised mugging of Marlon Brando and Rebecca Hall’s waddling through corridors of quantum computers, Transcendence provides much to think about. Even though Christopher Nolan of Inception fame was involved in the film’s production, the pyrotechnics are relatively subdued – at least by today’s standards. While this fact alone seems to have disappointed some viewers, it nevertheless enables you to focus on the dialogue and plot. The film is never boring, even though nothing about it is particularly brilliant. However, the film stays with you, and that’s a good sign. Mark Kermode at the Guardian was one of the few reviewers who did the film justice.

The main character, played by Depp, is ‘Will Caster’ (aka Ray Kurzweil, but perhaps also an allusion to Hans Castorp in Thomas Mann’s The Magic Mountain). Caster is an artificial intelligence researcher based at Berkeley who, with his wife Evelyn Caster (played by Hall), are trying to devise an algorithm capable of integrating all of earth’s knowledge to solve all of its its problems. (Caster calls this ‘transcendence’ but admits in the film that he means ‘singularity’.) They are part of a network of researchers doing similar things. Although British actors like Hall and the key colleague Paul Bettany (sporting a strange Euro-English accent) are main players in this film, the film itself appears to transpire entirely within the borders of the United States. This is a bit curious, since a running assumption of the film is that if you suspect a malevolent consciousness uploaded to the internet, then you should shut the whole thing down. But in this film at least, ‘the whole thing’ is limited to American cyberspace.

Before turning to two more general issues concerning the film, which I believe may have led both critics and viewers to leave unsatisfied, let me draw attention to a couple of nice touches. First, the leader of the ‘Revolutionary Independence from Technology’ (RIFT), whose actions propel the film’s plot, explains that she used to be an advanced AI researcher who defected upon witnessing the endless screams of a Rhesus monkey while its entire brain was being digitally uploaded. Once I suspended my disbelief in the occurrence of such an event, I appreciate it as a clever plot device for showing how one might quickly convert from being radically pro- to anti-AI, perhaps presaging future real-world targets for animal rights activists. Second, I liked the way in which quantum computing was highlighted and represented in the film. Again, what we see is entirely speculative, yet it highlights the promise that one day it may be possible to read nature as pure information that can be assembled according to need to produce what one wants, thereby rendering our nanotechnology capacities virtually limitless. 3D printing may be seen as a toy version of this dream.

Now on to the two more general issues, which viewers might find as faults, but I think are better treated as what the Greeks called aporias (i.e. open questions):

(1) I think this film is best understood as taking place in an alternative future projected from when, say, Ray Kurzweil first proposed ‘the age of spiritual machines’ (i.e. 1999). This is not the future as projected in, say, Spielberg’s Minority Report, in which the world has become so ‘Jobs-ified’, that everything is touch screen-based. In fact, the one moment where a screen is very openly touched proves inconclusive (i.e. when, just after the upload, Evelyn impulsively responds to Will being on the other side of the interface). This is still a world very much governed by keyboards (hence the symbolic opening shot where a keyboard is used as a doorstop in the cyber-meltdown world). Even the World Wide Web doesn’t seem to have the prominence one might expect in a film where computer screens are featured so heavily. Why is this the case? Perhaps because the script had been kicking around for a while (which is true). This may also explain why in Evelyn’s pep talk to funders includes a line about Einstein saying something ‘nearly fifty years ago’. (Einstein died in 1955.) Or, for that matter, why the FBI agent (played by Irish actor Cillian Murphy) looks like something out of a 1970s TV detective series, the on-site military commander looks like George C. Scott and the great quantum computing mecca is located in a town that looks frozen in the 1950s. Perhaps we are seeing here the dawn of ‘steampunk’ for the late 20th century.

(2) The film contains heavy Christian motifs, mainly surrounding Paul Bettany’s character, Max Waters, who turns out to be the only survivor of the core research team involved in uploading consciousness. He wears a cross around his neck, which pops up at several points in the film. Moreover, once Max is abducted by RIFT, he learns that his writings querying whether digital uploading enhances or obliterates humanity have been unwittingly inspirational. Max and Will can be contrasted in terms of where they stand in relation to the classic Faustian bargain: Max refuses what Will accepts (quite explicitly, in response to the person who turns out to be his assassin). At stake is whether our biblically privileged status as creatures entitles us to take the next step to outright deification, which in this case means merging with the source of all knowledge on the internet. To underscore the biblical dimension of dilemma, toward the end of the film, Max confronts Evelyn (Eve?) with the realization that she was the one who nudged Will toward this crisis. Yet, the film’s overall verdict on his Faustian fall is decidedly mixed. Once uploaded, Will does no permanent damage, despite the viewer’s expectations. On the contrary, like Jesus, he manages to cure the ill, and even when battling with the amassed powers of the US government and RIFT, he ends up not killing anyone. However, the viewer is led to think that Will 2.0 may have overstepped the line when he revealed his ability to monitor Evelyn’s thoughts. So the real transgression appears to lie in the violation of privacy. (The Snowdenistas would be pleased!) But the film leaves the future quite open, as what the viewer sees in the opening and final scenes looks more like the result of an extended blackout (and hints are given that some places have already begun the restore their ICT infrastructure) than anything resembling irreversible damage to life as we know it. One can read this as either a warning shot to greater damage ahead if we go down the ‘transcendence’ route, or that such a route might be worth pursuing if we get manage to sort out the ‘people issues’. Given that Max ends the film by eulogising Will and Evelyn’s attempts to benefit humanity, I read the film as cautiously optimistic about the prospects for ‘transcendence’, where the film’s plot is taken as offering a simulated trial run.

My own final judgement is that this film would be very good for classroom use to raise the entire range of issues surrounding what I have called ‘Humanity 2.0’.

From CLUBOF.INFO

All religions have points of agreement concerning human toil and its relationship to the divine. This essay considers some of the Biblical and Hellenic parables of human origin, specifically the origins of human knowledge and instrumentality.

Here I want to present how knowledge and instrumentality are reported to originate with an act of mischief, specifically the theft of a divine artifact. My argument is that, although the possession of knowledge may be seen as a sin to be atoned for, the kind of atonement originally promoted may have simply been for us to apply our knowledge constructively in our lives. The concept of atoning for original sin (whether it is the Biblical or Hellenic sin) can then be justified with secular arguments. Everyone can agree that we retain the capacity for knowledge, and this means our atonement for the reported theft of such knowledge would simply rest with the use of the very same tool we reportedly stole.

The story of the titan Prometheus, from ancient Greek mythology, has been interpreted and reinterpreted many times. A great deal of writers and organizations have laid claim to the symbolism of Prometheus, including in modern times. [1] I would argue that too many writers diluted and over-explored the meaning of the parable by comparing everything to it, although this is not the focus of my essay. Greek mythology is notably weak on the subject of “good and evil” because it predates the Judeo-Christian propagation of their dualism, and this means most of the characters in Greek mythology can be defended or condemned without violating Hellenic theology. Prometheus as a mythic figure could be condemned from a Christian standpoint, because he seems strikingly similar to other scriptural characters engaged in a revolt against the divine. Yet the spirit of Prometheus and his theft has also been endorsed by people and organizations, such as the transhumanists who see him as an expression of the noblest human aspirations. [2]

The widely repeated version of the Prometheus story holds that Prometheus was a titan, a primordial deity who literally stole a sample of fire from Olympus and handed it down to humans. Prometheus was subsequently punished by the gods, who nailed him to a mountain and trapped him in a time-loop so that an eagle repeatedly ate his liver before it was regenerated to be eaten yet again. However, contrary to popular belief, the Prometheus parable is not mainly about the theft of fire but about the creation of the first man. According to Apollodorus’ Library dating from the First or Second Century AD:

“After he had fashioned men from water and earth, Prometheus also gave them fire, which he had hidden in a fennel stalk in secret from Zeus. But when Zeus learned of it, he ordered Hephiastos to nail his body to Mount Caucasos (a mountain that lies in Scythia). So Prometheus was nailed to it and held fast there for a good many years; and each day, an eagle swooped down to feed on the lobes of his liver, which grew again by night. Such was the punishment suffered by Prometheus for having stolen the fire, until Heracles later released him, as we shall show in our account of Heracles.” [3]

Immediately, you may be eager to identify the differences between this account of humanity’s creation and the Abrahamic accounts. For example, man is created by the thief, Zeus punishes the thief rather than man (it may seem), and the punishment of the thief is not portrayed as good, because ultimately the hero Heracles is destined to set Prometheus free again. However, the similarities are striking. Mankind is believed, in this parable, to be a source of trouble for the gods because mankind’s unique power derives from the violation and theft of divine power. We also encounter the apparent responsibility of women for the release of evil, found in the parable of Pandora, noted in the Library as being described by Hesiod as a “beautiful evil.” [4] Pandora (meaning women) was inflicted on men as the punishment for their possession of fire, which directly connects the tale of Pandora with the tale of Prometheus. We may speculate that Hesiod’s Pandora story contributed misogyny in the way some have argued that the Genesis account justifies misogyny. [5] However, such misogyny would defy the notion that Pandora, unlike men, was created by the gods [6] and was not punished by them…

The whole article has been reprinted at CLUBOF.INFO

From CLUBOF.INFO

The Human Race to the Future (2014 Edition) is the scientific Lifeboat Foundation think tank’s publication first made available in 2013, covering a number of dilemmas fundamental to the human future and of great interest to all readers. Daniel Berleant’s approach to popularizing science is more entertaining than a lot of other science writers, and this book contains many surprises and useful knowledge.

Some of the science covered in The Human Race to the Future, such as future ice ages and predictions of where natural evolution will take us next, is not immediately relevant in our lives and politics, but it is still presented to make fascinating reading. The rest of the science in the book is very linked to society’s immediate future, and deserves great consideration by commentators, activists and policymakers because it is only going to get more important as the world moves forward.

The book makes many warnings and calls for caution, but also makes an optimistic forecast about how society might look in the future. For example, It is “economically possible” to have a society where all the basics are free and all work is essentially optional (a way for people to turn their hobbies into a way of earning more possessions) (p. 6–7).

A transhumanist possibility of interest in The Human Race to the Future is the change in how people communicate, including closing the gap between thought and action to create instruments (maybe even mechanical bodies) that respond to thought alone. The world may be projected to move away from keyboards and touchscreens towards mind-reading interfaces (p. 13–18). This would be necessary for people suffering from physical disabilities, and for soldiers in the arms race to improve response times in lethal situations.

To critique the above point made in the book, it is likely that drone operators and power-armor wearers in future armies would be very keen to link their brains directly to their hardware, and the emerging mind-reading technology would make it possible. However, there is reason to doubt the possibility of effective teamwork while relying on such interfaces. Verbal or visual interfaces are actually more attuned to people as a social animal, letting us hear or see our colleagues’ thoughts and review their actions as they happen, which allows for better teamwork. A soldier, for example, may be happy with his own improved reaction times when controlling equipment directly with his brain, but his fellow soldiers and officers may only be irritated by the lack of an intermediate phase to see his intent and rescind his actions before he completes them. Some helicopter and vehicle accidents are averted only by one crewman seeing another’s error, and correcting him in time. If vehicles were controlled by mind-reading, these errors would increasingly start to become fatal.

Reading and research is also an area that could develop in a radical new direction unlike anything before in the history of communication. The Human Race to the Future speculates that beyond articles as they exist now (e.g. Wikipedia articles) there could be custom-generated articles specific to the user’s research goal or browsing. One’s own query could shape the layout and content of each article, as it is generated. This way, reams of irrelevant information will not need to be waded through to answer a very specific query (p. 19–24).

Greatly similar to the same view I have written works expressing, the book sees industrial civilization as being burdened above all by too much centralization, e.g. oil refineries. This endangers civilization, and threatens collapse if something should later go wrong (p. 32, 33). For example, an electromagnetic pulse (EMP) resulting from a solar storm could cause serious damage as a result of the centralization of electrical infrastructure. Digital sabotage could also threaten such infrastructure (p. 34, 35).

The solution to this problem is decentralization, as “where centralization creates vulnerability, decentralization alleviates it” (p. 37). Solar cells are one example of decentralized power production (p. 37–40), but there is also much promise in home fuel production using such things as ethanol and biogas (p. 40–42). Beyond fuel, there is also much benefit that could come from decentralized, highly localized food production, even “labor-free”, and “using robots” (p. 42–45). These possibilities deserve maximum attention for the sake of world welfare, considering the increasing UN concerns about getting adequate food and energy supplies to the growing global population. There should not need to be a food vs. fuel debate, as the only acceptable solution can be to engineer solutions to both problems. An additional option for increasing food production is artificial meat, which should aim to replace the reliance on livestock. Reliance on livestock has an “intrinsic wastefulness” that artificial meat does not have, so it makes sense for artificial meat to become the cheapest option in the long run (p. 62–65). Perhaps stranger and more profound is the option of genetically enhancing humans to make better use of food and other resources (p. 271–274).

On a related topic, sequencing our own genome may be able to have “major impacts, from medicine to self-knowledge” (p. 46–51). However, the book does not contain mention of synthetic biology and the potential impacts of J. Craig Venter’s work, as explained in such works as Life at the Speed of Light. This could certainly be something worth adding to the story, if future editions of the book aim to include some additional detail.

At least related to synthetic biology is the book’s discussion of genetic engineering of plants to produce healthier or more abundant food. Alternatively, plants could be genetically programmed to extract metal compounds from the soil (p. 213–215). However, we must be aware that this could similarly lead to threats, such as “superweeds that overrun the world” similar to the flora in John Wyndam’s Day of the Triffids (p. 197–219). Synthetic biology products could also accidentally expose civilization to microorganisms with unknown consequences, perhaps even as dangerous as alien contagions depicted in fiction. On the other hand, they could lead to potentially unlimited resources, with strange vats of bacteria capable of manufacturing oil from simple chemical feedstocks. Indeed, “genetic engineering could be used to create organic prairies that are useful to humans” (p. 265), literally redesigning and upgrading our own environment to give us more resources.

The book advocates that politics should focus on long-term thinking, e.g. to deal with global warming, and should involve “synergistic cooperation” rather than “narrow national self-interest” (p. 66–75). This is a very important point, and may coincide with the complex prediction that nation states in their present form are flawed and too slow-moving. Nation-states may be increasingly incapable of meeting the challenges of an interconnected world in which national narratives produce less and less legitimate security thinking and transnational identities become more important.

Close to issues of security, The Human Race to the Future considers nuclear proliferation, and sees that the reasons for nuclear proliferation need to be investigated in more depth for the sake of simply by reducing incentives. To avoid further research, due to thinking that it has already been sufficiently completed, is “downright dangerous” (p. 89–94). Such a call is certainly necessary at a time when there is still hostility against developing countries with nuclear programs, and this hostility is simply inflammatory and making the world more dangerous. To a large extent, nuclear proliferation is inevitable in a world where countries are permitted to bomb one another because of little more than suspicions and fears.

Another area covered in this book that is worth celebrating is the AI singularity, which is described here as meaning the point at which a computer is sophisticated enough to design a more powerful computer than itself. While it could mean unlimited engineering and innovation without the need for human imagination, there are also great risks. For example, a “corporbot” or “robosoldier,” determined to promote the interests of an organization or defeat enemies, respectively. These, as repeatedly warned through science fiction, could become runaway entities that no longer listen to human orders (p. 83–88, 122–127).

A more distant possibility explored in Berleant’s book is the colonization of other planets in the solar system (p. 97–121, 169–174). There is the well-taken point that technological pioneers should already be trying to settle remote and inhospitable locations on Earth, to perfect the technology and society of self-sustaining settlements (Antarctica?) (p.106). Disaster scenarios considered in the book that may necessitate us moving off-world in the long term include a hydrogen sulfide poisoning apocalypse (p. 142–146) and a giant asteroid impact (p. 231–236)

The Human Race to the Future is a realistic and practical guide to the dilemmas fundamental to the human future. Of particular interest to general readers, policymakers and activists should be the issues that concern the near future, such as genetic engineering aimed at conservation of resources and the achievement of abundance.

Private Space exploration is gaining a lot of attention in the media today. It is expected to be the next big thing after social media, technology, and probably bio fuels . Can we take this further? With DARPA sponsoring the formation of the 100 Year Starship Study (100YSS) in 2011, can we do interstellar propulsion in our life times?

The Xodus One Foundation thinks this is feasible. To that end the Foundation has started the KickStarter project Ground Zero of Interstellar Propulsion to fund and accelerate this research. This project ends Fri, May 9 2014 7:39 AM MDT.

The community of interstellar propulsion researchers can be categorized into three groups, those who believe it cannot be done (Nay Sayers Group – NSG), those who believe that it requires some advanced form of conventional rockets (Advanced Rocket Group – ARG), and those who believe that it needs new physics (New Physics Group – NPG).

The Foundation belongs to the third group, the New Physics Group. The discovery in 2007 of the new massless formula for gravitational acceleration g=τc^2 , where τ is the change in time dilation over a specific height divided by that height, led to the inference that there is a new physics for interstellar propulsion that is waiting to be discovered.

What would this physics look like if nothing can travel faster than light? Founder & Chairman, Benjamin T Solomon, of the Xodus One Foundation believes that the answer lies in our understanding of photon probability. Can we discover enough physics to figure out how to control photon probability?

To facilitate this discovery one can participate in the Ground Zero of Interstellar Propulsion. If Solomon is right …