A team of researchers from the Harbin Institute of Technology along with partners at the First Affiliated Hospital of Harbin Medical University, both in China, has developed a tiny robot that can ferry cancer drugs through the blood-brain barrier (BBB) without setting off an immune reaction. In their paper published in the journal Science Robotics, the group describes their robot and tests with mice. Junsun Hwang and Hongsoo Choi, with the Daegu Gyeongbuk Institute of Science and Technology in Korea, have published a Focus piece in the same journal issue on the work done by the team in China.

For many years, medical scientists have sought ways to deliver drugs to the brain to treat health conditions such as brain cancers. Because the brain is protected by the skull, it is extremely difficult to inject them directly. Researchers have also been stymied in their efforts by the BBB—a filtering mechanism in the capillaries that supply blood to the brain and spinal cord that blocks foreign substances from entering. Thus, simply injecting drugs into the bloodstream is not an option. In this new effort, the researchers used a defense cell type that naturally passes through the BBB to carry drugs to the brain.

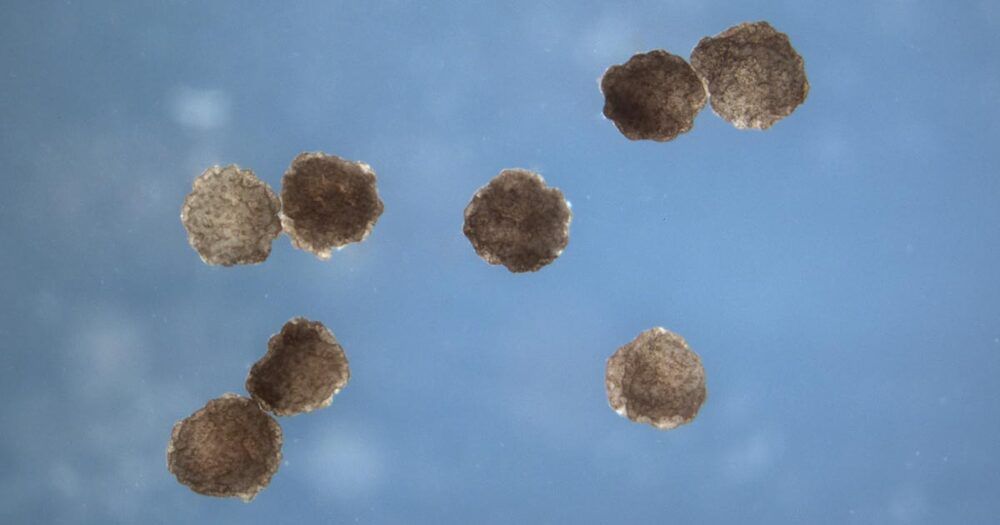

To build their tiny robots, the researchers exposed groups of white blood cells called neutrophils to tiny bits of magnetic nanogel particles coated with fragments of E. coli material. Upon exposure, the neutrophils naturally encased the tiny robots, believing them to be nothing but E. coli bacteria. The microrobots were then injected into the bloodstream of a test mouse with a cancerous tumor. The team then applied a magnetic field to the robots to direct them through the BBB, where they were not attacked, as the immune system identified them as normal neutrophils, and into the brain and the tumor. Once there, the robots released their cancer-fighting drugs.