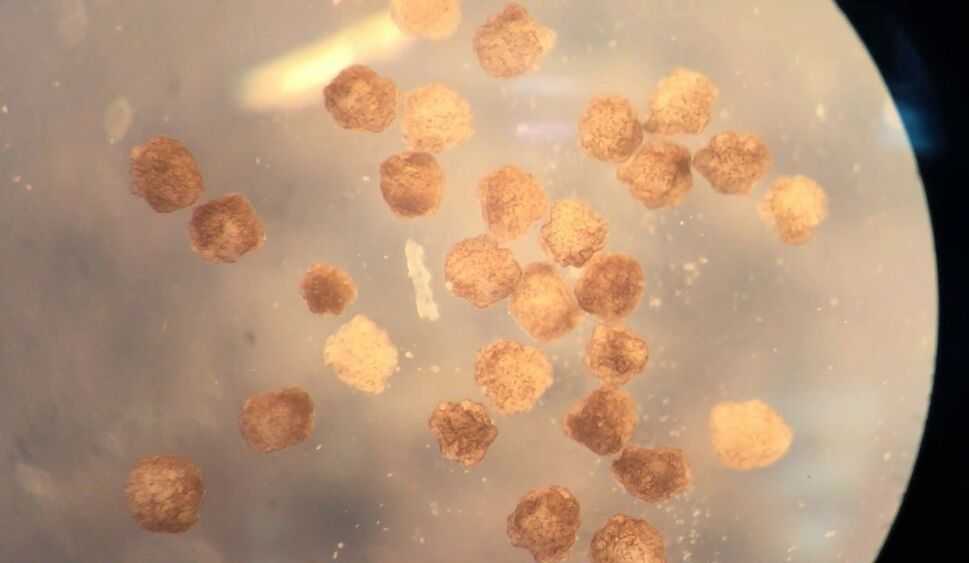

TAE Technologies, the California, USA-based fusion energy technology company, has announced that its proprietary beam-driven field-reversed configuration (FRC) plasma generator has produced stable plasma at over 50 million degrees Celsius. The milestone has helped the company raise USD280 million in additional funding.

Norman — TAE’s USD150 million National Laboratory-scale device named after company founder, the late Norman Rostoker — was unveiled in May 2017 and reached first plasma in June of that year. The device achieved the latest milestone as part of a “well-choreographed sequence of campaigns” consisting of over 25000 fully-integrated fusion reactor core experiments. These experiments were optimised with the most advanced computing processes available, including machine learning from an ongoing collaboration with Google (which produced the Optometrist Algorithm) and processing power from the US Department of Energy’s INCITE programme that leverages exascale-level computing.

Plasma must be hot enough to enable sufficiently forceful collisions to cause fusion and sustain itself long enough to harness the power at will. These are known as the ‘hot enough’ and ‘long enough’ milestone. TAE said it had proved the ‘long enough’ component in 2015, after more than 100000 experiments. A year later, the company began building Norman, its fifth-generation device, to further test plasma temperature increases in pursuit of ‘hot enough’.