Interesting.

Like other militaries, the Israel Defense Forces for years looked like one organization from the outside, but its services were balkanized in using different networks and data services, an IDF digital leader said in an interview.

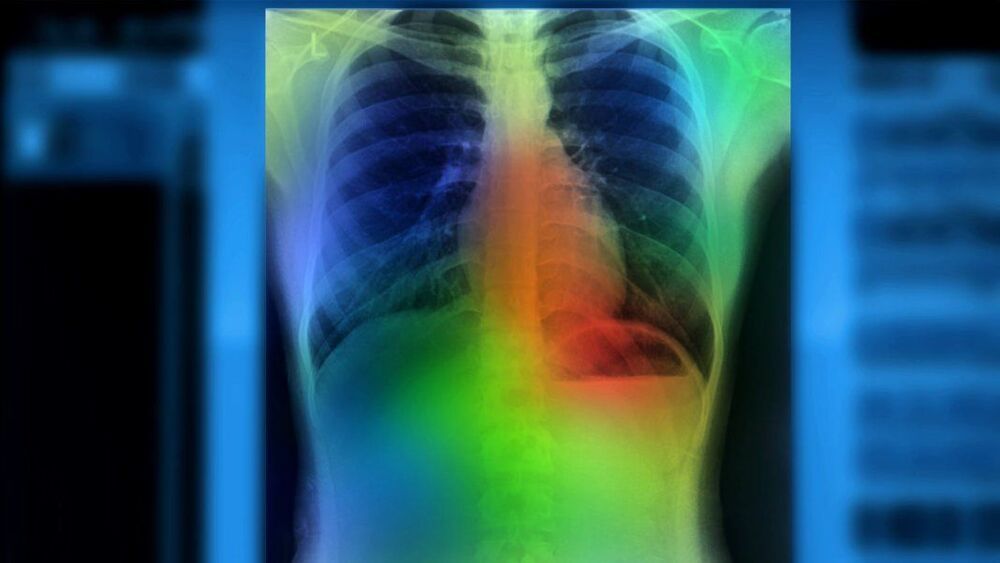

A transformative artificial intelligence (AI) tool called AlphaFold, which has been developed by Google’s sister company DeepMind in London, has predicted the structure of nearly the entire human proteome (the full complement of proteins expressed by an organism). In addition, the tool has predicted almost complete proteomes for various other organisms, ranging from mice and maize (corn) to the malaria parasite.

The more than 350000 protein structures, which are available through a public database, vary in their accuracy. But researchers say the resource — which is set to grow to 130 million structures by the end of the year — has the potential to revolutionize the life sciences.

“Killer Robots” may seem far fetched, but as @AlexGatopoulos explains, the use of autonomous machines and other military applications of artificial intelligence are a growing reality of modern warfare.

Follow us on Twitter https://twitter.com/AJEnglish.

Find us on Facebook https://www.facebook.com/aljazeera.

Check our website: http://www.aljazeera.com/

#Aljazeeraenglish.

#Project_force.

#Al_Jazeera_Digital_Conten

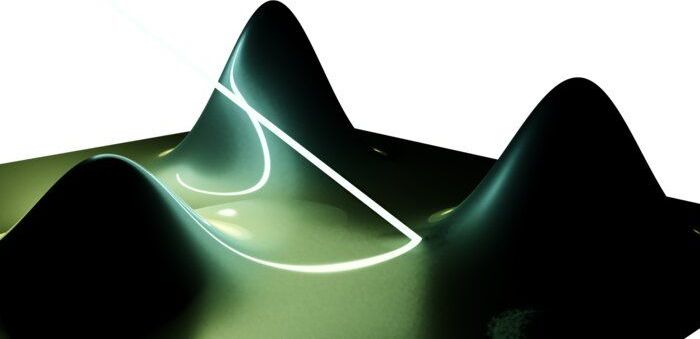

Experimental facilities around the globe are facing a challenge: their instruments are becoming increasingly powerful, leading to a steady increase in the volume and complexity of the scientific data they collect. At the same time, these tools demand new, advanced algorithms to take advantage of these capabilities and enable ever-more intricate scientific questions to be asked—and answered. For example, the ALS-U project to upgrade the Advanced Light Source facility at Lawrence Berkeley National Laboratory (Berkeley Lab) will result in 100 times brighter soft X-ray light and feature superfast detectors that will lead to a vast increase in data-collection rates.

To make full use of modern instruments and facilities, researchers need new ways to decrease the amount of data required for scientific discovery and address data acquisition rates humans can no longer keep pace with. A promising route lies in an emerging field known as autonomous discovery, where algorithms learn from a comparatively little amount of input data and decide themselves on the next steps to take, allowing multi-dimensional parameter spaces to be explored more quickly, efficiently, and with minimal human intervention.

“More and more experimental fields are taking advantage of this new optimal and autonomous data acquisition because, when it comes down to it, it’s always about approximating some function, given noisy data,” said Marcus Noack, a research scientist in the Center for Advanced Mathematics for Energy Research Applications (CAMERA) at Berkeley Lab and lead author on a new paper on Gaussian processes for autonomous data acquisition published July 28 in Nature Reviews Physics. The paper is the culmination of a multi-year, multinational effort led by CAMERA to introduce innovative autonomous discovery techniques across a broad scientific community.

Wiliot — the IoT startup that has developed a new kind of processor that is ultra thin and light and runs on ambient power but possesses all the power of a “computer” — has picked up a huge round of growth funding on the back of strong interest in its technology, and a strategy aimed squarely at scale.

The company has raised $200 million, a Series C that it will use toward its next steps as a business. In the coming months, it will make a move into a SaaS model — which Wiliot likes to say refers not to “software as a service,” but “sensing as a service,” using its AI to read and translate different signals on the object attached to the chip — to run and sell its software. This will be combined with a shift to a licensing model for its chip hardware, so that they can be produced by multiple third parties. Wiliot says that it already has several agreements in place for the chip licensing part. The plan is for this, in turn, to lead to a new range of sizes and form factors for the chips down the line.

Softbank’s Vision Fund 2 led the financing, with previous backers — it’s a pretty illustrious list that speaks of the opportunities ahead — including 83North, Amazon Web Services, Inc. (AWS), Avery Dennison, Grove Ventures, M Ventures, the corporate VC of Merck KGaA, Maersk Growth, Norwest Venture Partners, NTT DOCOMO Ventures, Qualcomm Ventures LLC, Samsung Venture Investment Corp., Vintage Investment Partners and Verizon Ventures.

And it did so on its own without a tether.

Cassie, a bipedal robot that’s all legs, has successfully run five kilometers on a single charge, all without having a tether. The machine serves as the basis for Agility Robotics’ delivery robot Digit, as TechCrunch notes, though you may also remember it for “blindly” navigating a set of stairs. Oregon State University engineers were able to train Cassie in a simulator to enable it to go up and down a flight of stairs without the use of cameras or LIDAR. Now, engineers from the same team were able to train Cassie to run using a deep reinforcement learning algorithm.

According to the team, Cassie teaching itself using the technique gave it the capability to stay upright without a tether by shifting its balance while running. The robot had to learn to make infinite subtle adjustments to be able to accomplish the feat. Yesh Godse, an undergrad from the OSU Dynamic Robotics Laboratory, explained: “Deep reinforcement learning is a powerful method in AI that opens up skills like running, skipping and walking up and down stairs.”

The team first tested Cassie’s capability by having it run on turn for five kilometers, which it finished with a time of 43 minutes and 49 seconds. Cassie finished its run across the OSU campus in 53:03. It took a bit longer because it included six and a half minutes of dealing with technical issues. The robot fell once due to a computer overheating and then again after it executed a turn too quickly. But Jeremy Dao, another team member from the lab, said they were able to “reach the limits of the hardware and show what it can do.” The work the team does will help expand the understanding of legged locomotion and could help make bipedal robots become more common in the future.

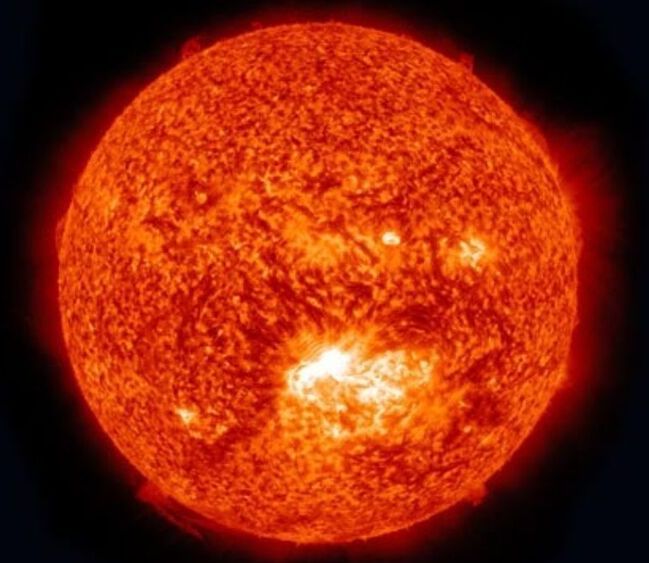

Researchers at the National Aeronautics and Space Administration (NASA) recently announced that it is using artificial intelligence to calibrate images of the Sun.

NASA launched its Solar Dynamics Observatory (SDO) back in early 2010 to conduct research and capture high-definition images of the Sun.

The new artificial intelligence-powered technology is now helping scientists to precisely calibrate captured images at a quick pace in order to generate accurate, usable data. NASA uses the Atmospheric Imagery Assembly (AIA) present at the SDO to capture the Sun’s images across various wavelengths of ultraviolet light every 12 seconds.

A trio of researchers at Cornell University has found that it is possible to hide malware code inside of AI neural networks. Zhi Wang, Chaoge Liu and Xiang Cui have posted a paper describing their experiments with injecting code into neural networks on the arXiv preprint server.

As computer technology grows ever more complex, so do attempts by criminals to break into machines running new technology for their own purposes, such as destroying data or encrypting it and demanding payment from users for its return. In this new study, the team has found a new way to infect certain kinds of computer systems running artificial intelligence applications.

AI systems do their work by processing data in ways similar to the human brain. But such networks, the research trio found, are vulnerable to infiltration by foreign code.