The overturned ruling bucks the trend we’ve previously seen in the US and UK.

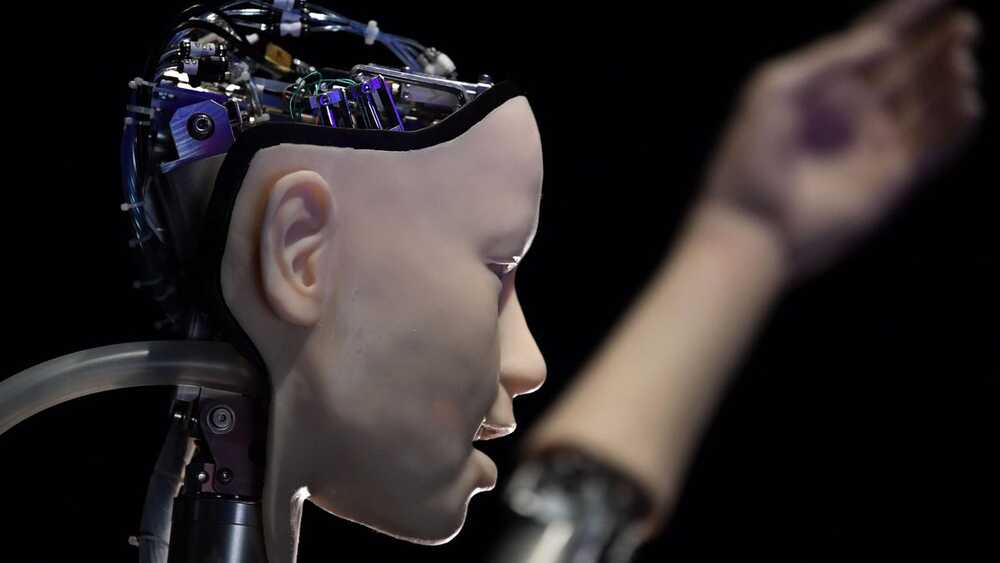

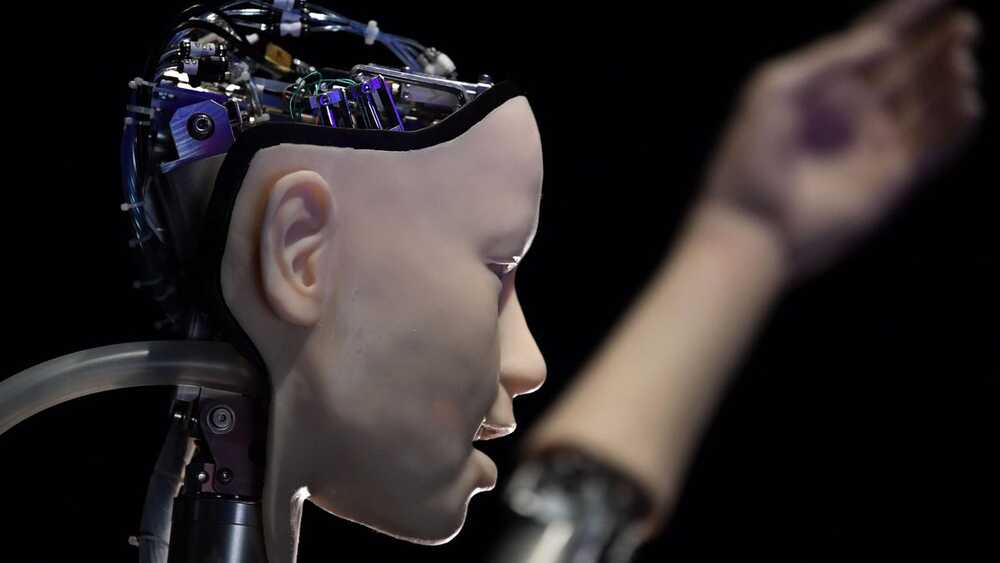

Granted, it’s a little different for a robot, since they don’t have lungs or a heart. But they do have a “brain” (software), “muscles” (hardware), and “fuel” (a battery), and these all had to work together for Cassie to be able to run.

The brunt of the work fell to the brain—in this case, a machine learning algorithm developed by students at Oregon State University’s Dynamic Robotics Laboratory. Specifically, they used deep reinforcement learning, a method that mimics the way humans learn from experience by using a trial-and-error process guided by feedback and rewards. Over many repetitions, the algorithm uses this process to learn how to accomplish a set task. In this case, since it was trying to learn to run, it may have tried moving the robot’s legs varying distances or at distinct angles while keeping it upright.

Once Cassie got a good gait down, completing the 5K was as much a matter of battery life as running prowess. The robot covered the whole distance (a course circling around the university campus) on a single battery charge in just over 53 minutes, but that did include six and a half minutes of troubleshooting; the computer had to be reset after it overheated, as well as after Cassie fell during a high-speed turn. But hey, an overheated computer getting reset isn’t so different from a human runner pausing to douse their head and face with a cup of water to cool off, or chug some water to rehydrate.

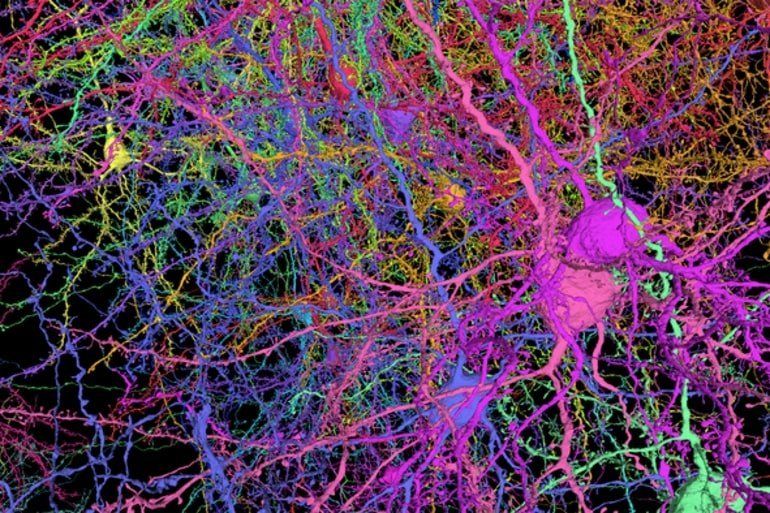

Summary: Researchers have compiled a new, highly detailed 3D brain map that captures the shapes and activity of neurons in the visual neocortex of mice. The map is freely available for neuroscience researchers and artificial intelligence specialists to utilize.

Source: Allen Institute

Researchers from the University of Reading, in the UK, are using drones to give clouds an electrical charge, which could help increase rainfall in water-stressed regions.

At this point i think the US government is going to get stuck paying to develop human level robotic hands.

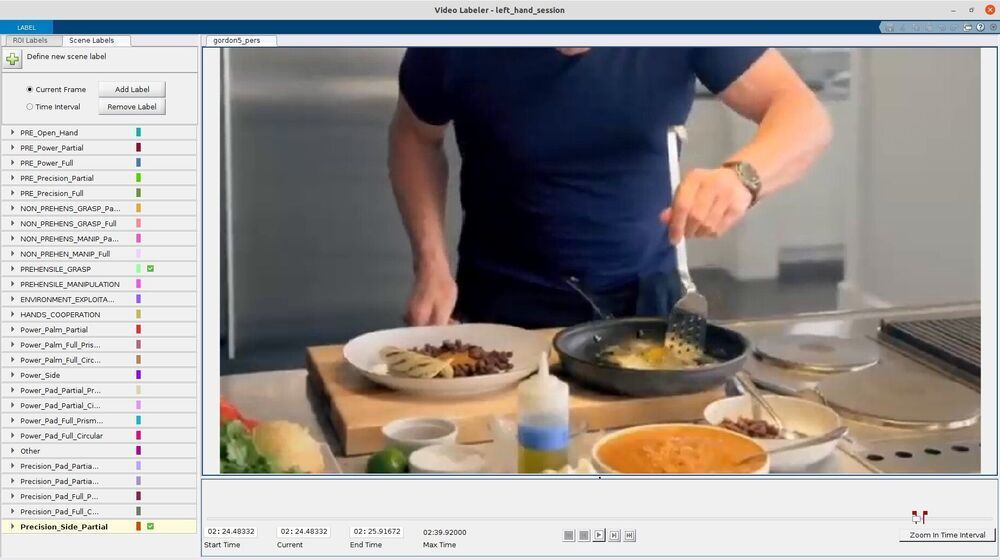

Over the past few decades, roboticists and computer scientists have developed a variety of data-based techniques for teaching robots how to complete different tasks. To achieve satisfactory results, however, these techniques should be trained on reliable and large datasets, preferably labeled with information related to the task they are learning to complete.

For instance, when trying to teach robots to complete tasks that involve the manipulation of objects, these techniques could be trained on videos of humans manipulating objects, which should ideally include information about the types of grasps they are using. This allows the robots to easily identify the strategies they should employ to grasp or manipulate specific objects.

Researchers at University of Pisa, Istituto Italiano di Tecnologia, Alpen-Adria-Universitat Klagenfurt and TU Delft recently developed a new taxonomy to label videos of humans manipulating objects. This grasp classification method, introduced in a paper published in IEEE Robotics and Automation Letters, accounts for movements prior to the grasping of objects, for bi-manual grasps and for non-prehensile strategies.

Researchers at the University of Sydney and quantum control startup Q-CTRL today announced a way to identify sources of error in quantum computers through machine learning, providing hardware developers the ability to pinpoint performance degradation with unprecedented accuracy and accelerate paths to useful quantum computers.

A joint scientific paper detailing the research, titled “Quantum Oscillator Noise Spectroscopy via Displaced Cat States,” has been published in the Physical Review Letters, the world’s premier physical science research journal and flagship publication of the American Physical Society (APS Physics).

Focused on reducing errors caused by environmental “noise”—the Achilles’ heel of quantum computing —the University of Sydney team developed a technique to detect the tiniest deviations from the precise conditions needed to execute quantum algorithms using trapped ion and superconducting quantum computing hardware. These are the core technologies used by world-leading industrial quantum computing efforts at IBM, Google, Honeywell, IonQ, and others.

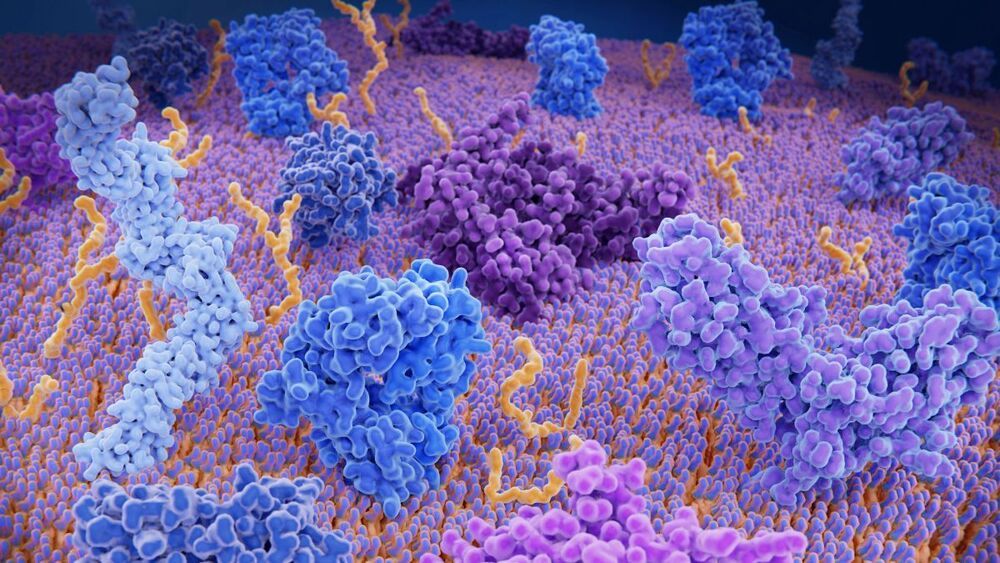

The predicted shapes still need to be confirmed in the lab, Ellis told Technology Review. If the results hold up, they will rapidly push forward the study of the proteome, or the proteins in a given organism. DeepMind researchers published their open-source code and laid out the method in two peer-reviewed papers published in Nature last week.

And in 20 other animals often studied by science, too.

CORVALLIS, Ore. – A two-legged robot invented at Oregon State University completed a 5K in just over 52 minutes. Cassie the robot, created by OSU spinout company Agility Robotics, made history with the successful trot. “Cassie, the first bipedal robot to use machine learning to control a running gait on outdoor terrain, completed the 5K on Oregon State’s campus untethered and on a single battery charge,” according to OSU. But it didn’t go off without a hitch.

AlphaFold 2 paper and code is finally released. This post aims to inspire new generations of Machine Learning (ML) engineers to focus on foundational biological problems.

This post is a collection of core concepts to finally grasp AlphaFold2-like stuff. Our goal is to make this blog post as self-complete as possible in terms of biology. Thus in this article, you will learn about:

Only a few years ago, this might have sounded crazy, but it’s here now – the first head-to-head, high-speed race without the actual racing drivers. Autonomous vehicles will soon be competing against each other at the Indy Autonomous Challenge, an event that will probably be remembered for years to come.