CNBC got a rare look at Boston Dynamics’ office in Massachusetts to see two of the robots the company is working to commercialize: Spot and Stretch.

Oct 8 2021

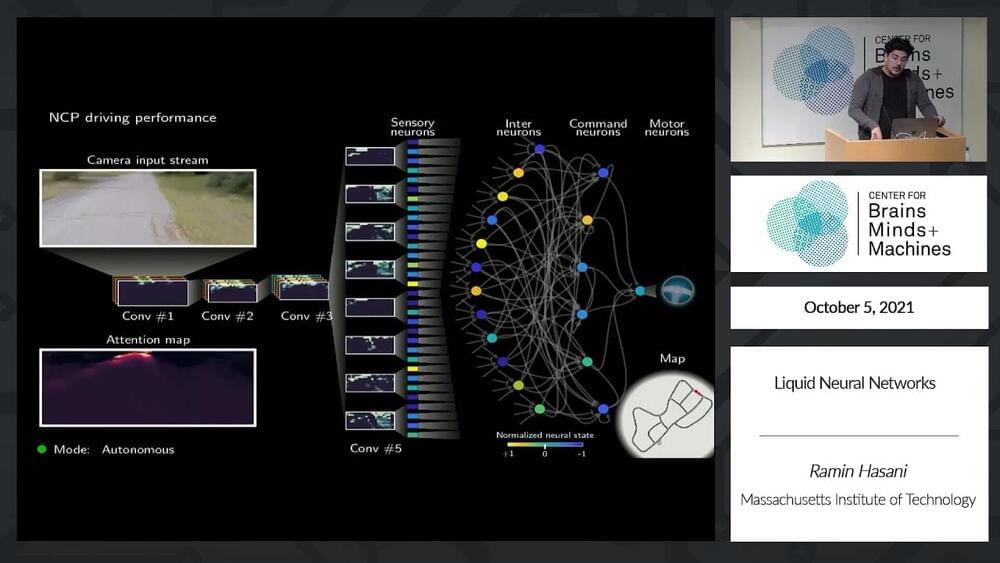

“Abstract: In this talk, we will discuss the nuts and bolts of the novel continuous-time neural network models: Liquid Time-Constant (LTC) Networks. Instead of declaring a learning system’s dynamics by implicit nonlinearities, LTCs construct networks of linear first-order dynamical systems modulated via nonlinear interlinked gates. LTCs represent dynamical systems with varying (i.e., liquid) time-constants, with outputs being computed by numerical differential equation solvers. These neural networks exhibit stable and bounded behavior, yield superior expressivity within the family of neural ordinary differential equations, and give rise to improved performance on time-series prediction tasks compared to advance recurrent network models.”

Ramin Hasani, MIT — intro by Daniela Rus, MIT

Abstract: In this talk, we will discuss the nuts and bolts of the novel continuous-time neural network models: Liquid Time-Constant (LTC) Networks. Instead of declaring a learning system’s dynamics by implicit nonlinearities, LTCs construct networks of linear first-order dynamical systems modulated via nonlinear interlinked gates. LTCs represent dynamical systems with varying (i.e., liquid) time-constants, with outputs being computed by numerical differential equation solvers. These neural networks exhibit stable and bounded behavior, yield superior expressivity within the family of neural ordinary differential equations, and give rise to improved performance on time-series prediction tasks compared to advance recurrent network models.

Speaker Biographies:

Dr. Daniela Rus is the Andrew (1956) and Erna Viterbi Professor of Electrical Engineering and Computer Science and Director of the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT. Rus’s research interests are in robotics, mobile computing, and data science. Rus is a Class of 2002 MacArthur Fellow, a fellow of ACM, AAAI and IEEE, and a member of the National Academy of Engineers, and the American Academy of Arts and Sciences. She earned her PhD in Computer Science from Cornell University. Prior to joining MIT, Rus was a professor in the Computer Science Department at Dartmouth College.

Dr. Ramin Hasani is a postdoctoral associate and a machine learning scientist at MIT CSAIL. His primary research focus is on the development of interpretable deep learning and decision-making algorithms for robots. Ramin received his Ph.D. with honors in Computer Science at TU Wien, Austria. His dissertation on liquid neural networks was co-advised by Prof. Radu Grosu (TU Wien) and Prof. Daniela Rus (MIT). Ramin is a frequent TEDx speaker. He has completed an M.Sc. in Electronic Engineering at Politecnico di Milano (2015), Italy, and has got his B.Sc. in Electrical Engineering – Electronics at Ferdowsi University of Mashhad, Iran (2012).

Researchers at the California Institute of Technology (Caltech) have built a bipedal robot that combines walking with flying to create a new type of locomotion, making it exceptionally nimble and capable of complex movements.

Part walking robot, part flying drone, the newly developed LEONARDO (short for LEgs ONboARD drOne, or LEO for short) can walk a slackline, hop, and even ride a skateboard. Developed by a team at Caltech’s Center for Autonomous Systems and Technologies (CAST), LEO is the first robot that uses multi-joint legs and propeller-based thrusters to achieve a fine degree of control over its balance.

“We drew inspiration from nature. Think about the way birds are able to flap and hop to navigate telephone lines,” explained Soon-Jo Chung, Professor of Aerospace and Control and Dynamical Systems. “A complex yet intriguing behaviour happens as birds move between walking and flying. We wanted to understand and learn from that.”

Sophia’s Artificial Intelligence technology gives you the ability to increase your knowledge and language through sensors and cameras. This ‘sensitivity’ system captures all the information it receives from the outside and replicates human behaviors in the most natural way possible, even gestures. Therefore, her ‘desire’ to have a baby and start a family would only be a programming of her system to imitate social behaviors.

This is not the first time that Sophia has starred in a controversy. In 2,017 when she was named a citizen of Saudi Arabia 0 many people protested that, even though she is a robot, she has more rights than human women in that country.

Later, in a conversation with David Hanson 0 its creator, he said that it would destroy humans.

This week, The European Parliament, the body responsible for adopting European Union (EU) legislation, passed a non-binding resolution calling for a ban on law enforcement use of facial recognition technology in public places. The resolution, which also proposes a moratorium on the deployment of predictive policing software, would restrict the use of remote biometric identification unless it’s to fight “serious” crime, such as kidnapping and terrorism.

The approach stands in contrast to that of U.S. agencies, which continue to embrace facial recognition even in light of studies showing the potential for ethnic, racial, and gender bias. A recent report from the U.S. Government Accountability Office found that 10 branches including the Departments of Agriculture, Commerce, Defense, and Homeland Security plan to expand their use of facial recognition between 2020 and 2023 as they implement as many as 17 different facial recognition systems.

Commercial face-analyzing systems have been critiqued by scholars and activists alike throughout the past decade, if not longer. The technology and techniques — everything from sepia-tinged film to low-contrast digital cameras — often favor lighter skin, encoding racial bias in algorithms. Indeed, independent benchmarks of vendors’ systems by the Gender Shades project and others have revealed that facial recognition technologies are susceptible to a range of prejudices exacerbated by misuse in the field. For example, a report from Georgetown Law’s Center on Privacy and Technology details how police feed facial recognition software flawed data, including composite sketches and pictures of celebrities who share physical features with suspects.

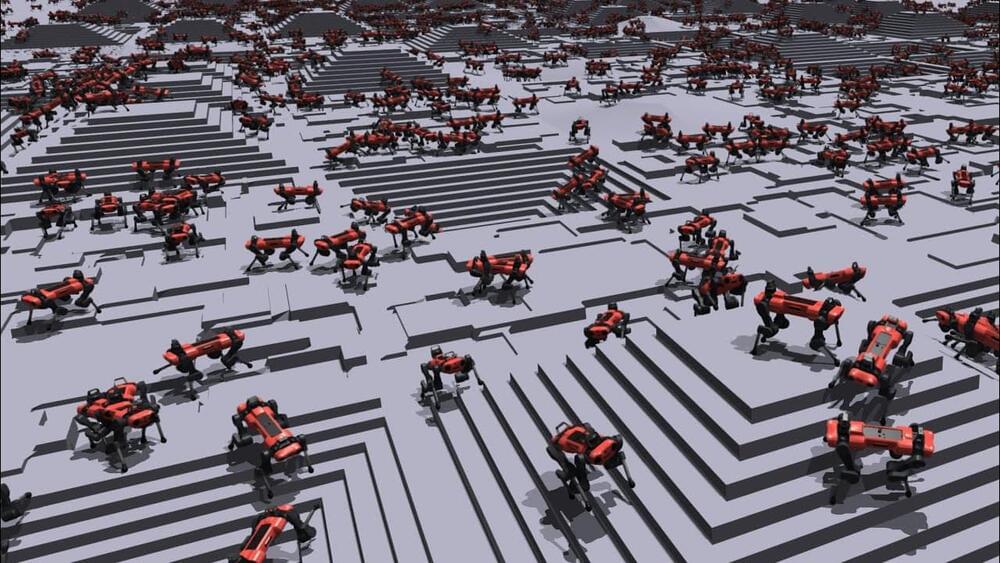

A virtual army of 4,000 doglike robots was used to train an algorithm capable of enhancing the legwork of real-world robots, according to an initial report from Wired. And new tricks learned in the simulation could soon see execution in a neighborhood near you.

While undergoing training, the robots mastered the up-and downstairs walk without too much struggle. But slopes threw them for a curve. Few could grasp the essentials of sliding down a slope. But, once the final algorithm was moved to a real-world version of ANYmal, the four-legged doglike robot with sensors equipped in its head and a detachable robot arm successfully navigated blocks and stairs, but had issues working at higher speeds.

Full Story:

Vertical Aerospace has already collected somewhere in the region of 1,000 orders for their VA-X4 VTOL craft. This is a piloted electric, low emission eVTOL craft that can carry up to four passengers and a pilot. This air taxi is capable of flying at speeds of 200 mph (174 knots) and has a range of more than 100 miles (160 km).

Being all-electric, it is near-silent during flight, offers a low-carbon solution to flying, and has a relatively low cost per passenger mile.

Vertical Aerospace’s VA-X4 also makes use of the latest in advanced avionics — some of which are used to control the world’s only supersonic VTOL aircraft, the F-35 fighter. Such sophisticated control systems enable the eVTOL tax to fly with some high level of automation and reduced pilot workload.

In the latest episode of MIT Technology Review’s podcast “In Machines We Trust,” we asked career and job-matching experts for practical tips on how to succeed in a job market increasingly influenced by artificial intelligence.

Once you optimize your résumé, you may want to practice interviewing with an AI too.

Jeffrey Shainline is a physicist at NIST. Please support this podcast by checking out our sponsors:

- Stripe: https://stripe.com.

- Codecademy: https://codecademy.com and use code LEX to get 15% off.

- Linode: https://linode.com/lex to get $100 free credit.

- BetterHelp: https://betterhelp.com/lex to get 10% off.

Note: Opinions expressed by Jeff do not represent NIST.

EPISODE LINKS:

Jeff’s Website: http://www.shainline.net.

Jeff’s Google Scholar: https://scholar.google.com/citations?user=rnHpY3YAAAAJ

Jeff’s NIST Page: https://www.nist.gov/people/jeff-shainline.

PODCAST INFO:

Podcast website: https://lexfridman.com/podcast.

Apple Podcasts: https://apple.co/2lwqZIr.

Spotify: https://spoti.fi/2nEwCF8

RSS: https://lexfridman.com/feed/podcast/

Full episodes playlist: https://www.youtube.com/playlist?list=PLrAXtmErZgOdP_8GztsuKi9nrraNbKKp4

Clips playlist: https://www.youtube.com/playlist?list=PLrAXtmErZgOeciFP3CBCIEElOJeitOr41

OUTLINE:

0:00 — Introduction.

0:44 — How are processors made?

20:02 — Are engineers or physicists more important.

22:31 — Super-conductivity.

38:18 — Computation.

42:55 — Computation vs communication.

46:36 — Electrons for computation and light for communication.

57:19 — Neuromorphic computing.

1:22:11 — What is NIST?

1:25:28 — Implementing super-conductivity.

1:33:08 — The future of neuromorphic computing.

1:52:41 — Loop neurons.

1:58:57 — Machine learning.

2:13:23 — Cosmological evolution.

2:20:32 — Cosmological natural selection.

2:37:53 — Life in the universe.

2:45:40 — The rare Earth hypothesis.

SOCIAL:

- Twitter: https://twitter.com/lexfridman.

- LinkedIn: https://www.linkedin.com/in/lexfridman.

- Facebook: https://www.facebook.com/lexfridman.

- Instagram: https://www.instagram.com/lexfridman.

- Medium: https://medium.com/@lexfridman.

- Reddit: https://reddit.com/r/lexfridman.

- Support on Patreon: https://www.patreon.com/lexfridman