DNA contains the genetic information that influences everything from eye color to illness and disorder susceptibility. Genes, which are around 20,000 pieces of DNA in the human body, perform various vital tasks in our cells. Despite this, these genes comprise up less than 2% of the genome. The remaining base pairs in the genome are referred to as “non-coding.” They include less well-understood instructions on when and where genes should be created or expressed in the human body.

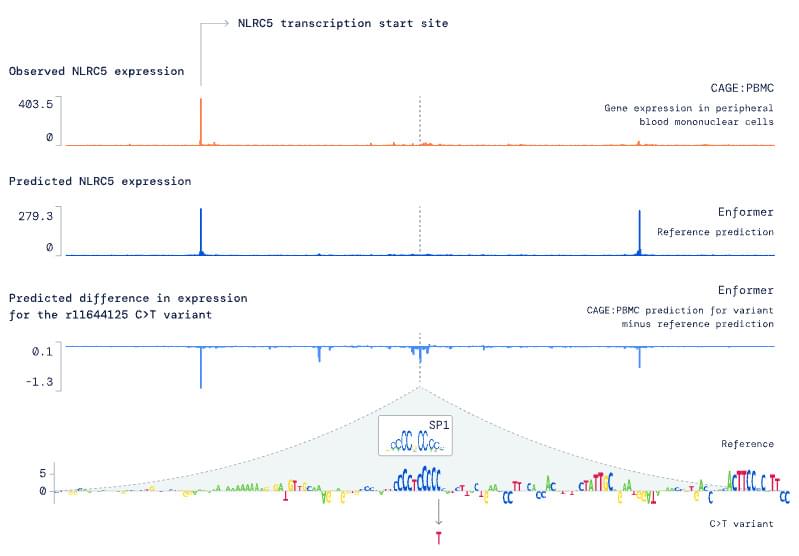

DeepMind, in collaboration with their Alphabet colleagues at Calico, introduces Enformer, a neural network architecture that accurately predicts gene expression from DNA sequences.

Earlier studies on gene expression used convolutional neural networks as key building blocks. However, their accuracy and usefulness have been hampered by problems in modeling the influence of distal enhancers on gene expression. The proposed new method is based on Basenji2, a program that can predict regulatory activity from DNA sequences of up to 40,000 base pairs.