Introduction

Moore’s Law says that the number of transistors per square inch will double approximately every 18 months. This article will show how many technologies are providing us with a new Virtual Moore’s Law that proves computer performance will at least double every 18 months for the foreseeable future thanks to many new technological developments.

This Virtual Moore’s Law is propelling us towards the Singularity where the invention of artificial superintelligence will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization.

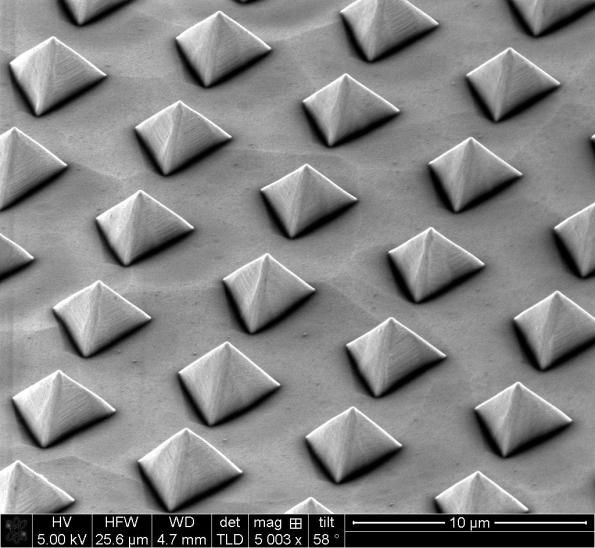

Going Vertical

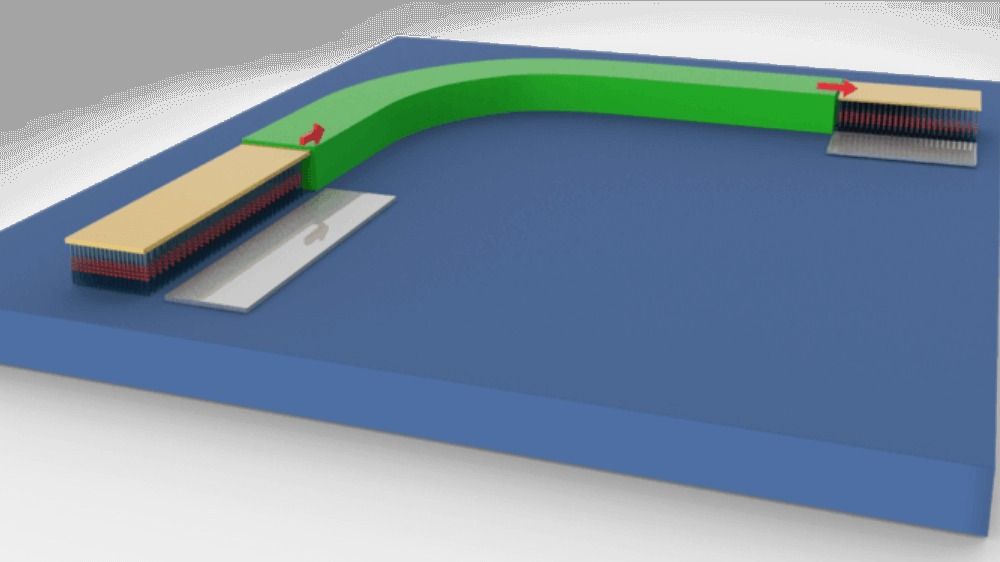

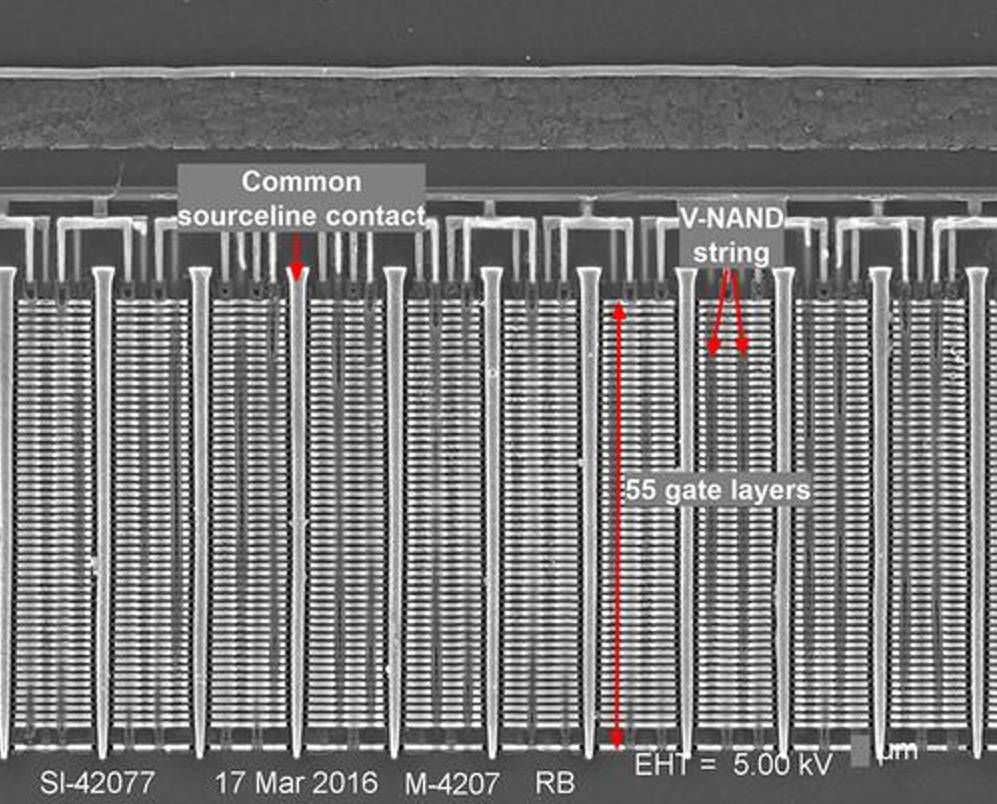

In the first of my “proof” articles two years ago, I described how it has become harder to miniaturize transistors, causing computing to go vertical instead. 2 years ago, Samsung was mass producing 24-layer 3D NAND chips and had announced 32-layer chips. As I write this, Samsung is mass producing 48-layer 3D NAND chips with 64-layer chips rumored to appear within a month or so. Even more importantly, it is expected that by the end of 2017, the majority of NAND chips produced by all companies will be 3D. Currently Samsung and its competitors are working 24/7 to transform their 2D factories to 3D factories causing a dramatic change in how NAND flash chips are created.

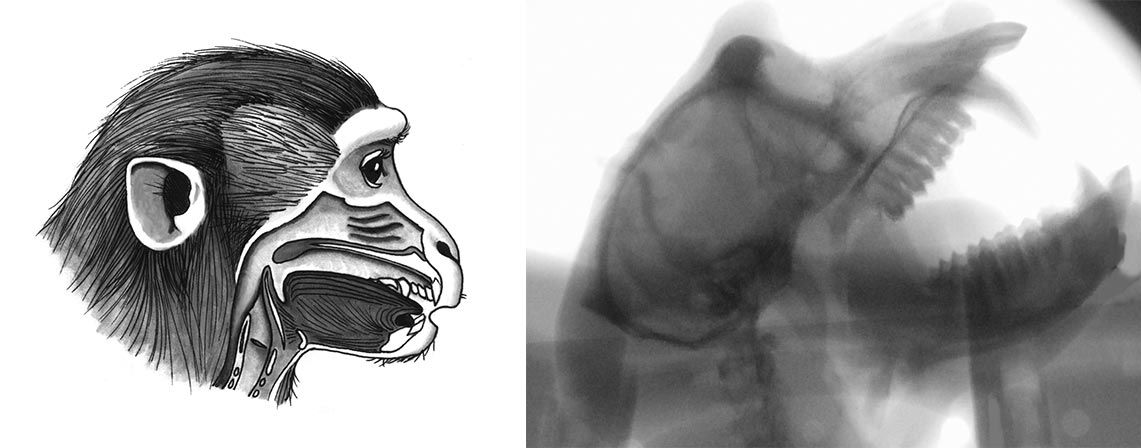

Cross section of 48-layer 3D NAND chip (Learn more!)

Going Massively Parallel

Moore’s law only talks about the number of transistors per square inch. It doesn’t directly mean that a chip will run any faster. Unfortunately, since 2006 Intel’s CPUs have dramatically slowed their increase in performance, averaging about 10% a year. The cause of this problem is that the average Intel CPU only has 2 to 4 cores and it has become difficult to speed up these cores.

Nvidia has been promoting a different architecture, which averages thousands of cores. In 2016, they had a HUUGE success with this idea causing their company to soar in value with the market capitalization of Nvidia reaching about one third of Intel’s value. (This is impressive as Intel is a very profitable company with profits exceeding $15 billion in 2015.)

Titan X Pascal is 566% faster at AI than last year’s model!

Exactly what did Nvidia achieve? Their new Titan X runs AI instructions 566% faster than the old Titan X card that was only released a year earlier. Also, Nvidia got a half-size version of their Drive PX 2 put in all Tesla cars and this chip is about 4000% faster than the chip it replaced. Finally, Nvidia is working on a successor to the Drive PX 2 chip called Xavier that is rumored to come out in about a year and to be at least 400% more energy efficient than the current chip.

These numbers of 566%, 4000%, and 400% are much bigger than Intel’s 10% and are causing a fundamental change in how computing is done. It is worth noting that Nvidia’s main competitor AMD has also been blowing past Intel’s 10% annual performance gains so the idea of many cores has been proven by multiple companies. In fact, even the graphical part of Intel’s chips has been blowing past this 10% performance gain per year.

Thousands of programs have been designed to take advantage of this parallel computing performance. For example, the program BlazingDB runs over 100 times as fast as MySQL which was only designed to run on CPUs. As the performance gap increases between a standard CPU with a handful of cores and a GPU with thousands of cores, more and more programs are being written to take advantage of GPUs. And the growing market for massively parallel chips means that Nvidia can now afford to spend lots of money in making their chips better. For example, their latest generation of chips called Pascal cost over $2 billion to develop. (All this money goes into making a better chip design, Nvidia doesn’t actually build chips. They currently use TSMC and Samsung for that.)

Lightning-Fast Data

For a long time, data for programs was stored on slow hard drives. Then it was moved to SATA SSDs which rapidly sped up each year until they finally hit the bandwidth limits of the SATA standard. Now data is moving to PCIe SSDs that currently have 6 times the bandwidth of SATA drives with even faster PCIe drives planned. (A PCIe drive that used 16 lanes like a graphics card would have 4 times the bandwidth of current PCIe drives.) Both Intel’s coming Optane 3D XPoint SSDs and Samsung’s Z-NAND SSDs are examples of such faster PCIe drives and a handful of enterprise SSDs already exist that use 16 lanes.

Even faster than all these drives is the idea of storing everything in memory which is becoming more and more common. When Watson won at Jeopardy in 2011, it used the trick of using its 16 terabytes of RAM to store everything in memory instead of using its drives during the competition. Today Samsung sells 2.5D memory cards that hold 128GB each.

Intel’s Xeons with the highest memory capacity can handle 3 terabytes of memory per chip and motherboards are being sold that can hold 3 terabytes of Samsung’s 2.5D memory. 2.5D means that four layers of chips are “soldered” on top of each other. (Cheaper non-Xeon systems now hold as much as 128GB 2D memory which is pretty good for a home computer.)

Computers Programming Computers

Nvidia’s CEO Jen-Hsun Huang said, “AI is going to increase in capability faster than Moore’s Law. I believe it’s a kind of a hyper Moore’s Law phenomenon because it has the benefit of continuous learning. It has the benefit of large-scale networked continuous learning. Today, we roll out a new software package, fix bugs, update it once a year. That rhythm is going to change. Software will learn from experience much more quickly. Once one smart piece of software on one device learns something, then you can over-the-air (OTA) it across the board. All of a sudden, everything gets smarter.”

Computers Designing Chips

Since the mid-1970s, programs have been used to design chips as chips have become too complicated for any team of humans to handle. (Nvidia’s Tesla P100 GPUs have 150 billion transistors when you include the memory “soldered” to the top of them!)

A quantum leap in chip design may happen in the near future as Nvidia recently built a supercomputer for internal research out of mainly Nvidia Tesla P100 GPUs. This supercomputer was ranked 28 out of all computers in the world. What will this computer be used for?

Nvidia said, “We’re also training neural networks to understand chipset design and very-large-scale-integration, so our engineers can work more quickly and efficiently. Yes, we’re using GPUs to help us design GPUs.”

This is a very interesting area to watch as today’s chips are so complicated that they are likely very inefficient with massive speedups being available if we could find a better way to optimize them. An example of the gains possible is that Nvidia got about a 50% performance increase between its Kepler and Maxwell generations despite both microarchitectures using the same 28nm technology.

Conclusion

The new Virtual Moore’s Law is already having a massive effect. Jen-Hsun said, “By collaborating with AI developers, we continued to improve our GPU designs, system architecture, compilers, and algorithms, and sped up training deep neural networks by 50x in just three years — a much faster pace than Moore’s Law.”

With chips going vertical, chip architectures going massively parallel, lightning-fast data, computers programming computers, and computers designing chips, the Singularity is closer than you think!

Technical Note for Geeks

Here is what I actually meant by “soldered”:

Conventional chip packages interconnect die stacks using wire bonding, whereas in TSV packages, the chip dies are ground down to a few dozen micrometers, pierced with hundreds of fine holes and vertically connected by electrodes passing through the holes, allowing for a significant boost in signal transmission. TSV stands for Through-Silicon Vias.