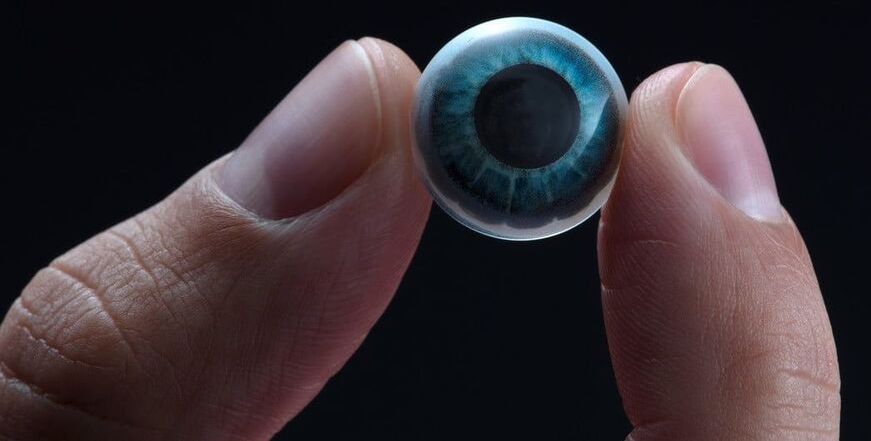

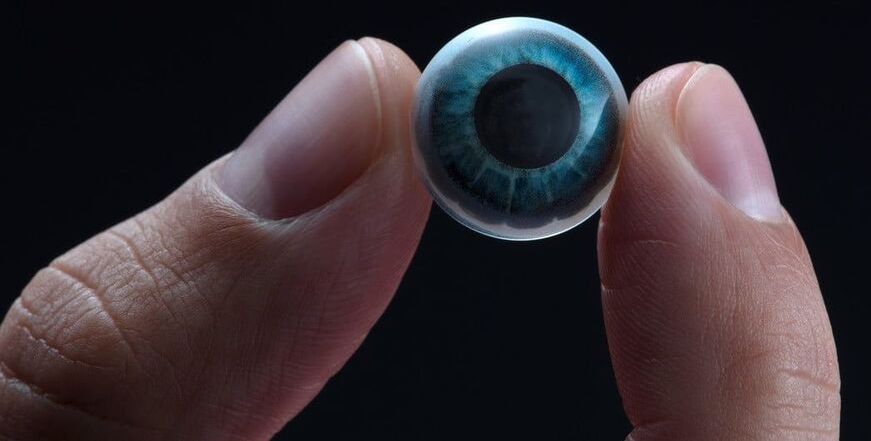

Smart contact lenses are finally becoming a reality. And the future of this intriguing technology is nothing like what you might expect.

Today, at a special AR/VR focused event held inside its virtual reality community platform Altspace, Microsoft showcased a new product aiming to provide their AR HoloLens platform and VR Windows Mixed Reality platform with a shared platform for meetings.

The app is called Microsoft Mesh and it gives users a cross AR/VR meeting space to interact with other users and 3D content, handling all of technical hard parts of sharing spatial multi-player experiences over the web. Like Microsoft’s other AR/VR apps, the sell seems to be less in the software than it is in enabling developers to tap into one more specialization of Azure, building their own software that builds on the capabilities. The company announced that AltspaceVR will now be Mesh-enabled.

In the company’s presentation, they swung for the fences in showcasing potential use cases, bringing in James Cameron, the co-founder of Cirque du Soleil and Pokémon Go developer Niantic.

Today, the most common use cases are much more mundane, including smartphone-based games and apps like Pokemon Go or Apple’s Ruler app, which use the phone’s screen and camera rather than relying on glasses or another set of screens sitting on your face. The few companies who are actively producing AR glasses are mostly focused on work scenarios, like manufacturing and medicine.

Industry watchers and participants think that Apple has a good chance to validated and revolutionize augmented reality like it did with smartphones.

We can immediately supersede the Mojo Vision approach for retinal projection, with an interim projection system using metalenses. The Mojo Lens approach is to try to put everything, including the television screen, projection method and energy source onto one contact lens. With recent breakthroughs in scaling up the size of metalenses, an approach utilizing a combination of a contact metalens and a small pair of glasses can be utilized. This is emphatically not the Google Glass approach, which did not use modern metalenses. The system would work as follows:

1)Thin TV cameras are mounted on both sides of a pair of wearable glasses.

2)The images from these cameras are projected via projection metalenses in a narrow beam to the center of the pupils.

3)A contact lens with a tiny metalens mounted in the center, directly over the pupil, projects this projected beam outwards, through the pupil, onto the full width of the curved retina.

The end result would be a 360 degree, full panorama image. This image can either be a high resolution real time vision of the wearer’s surroundings, or can be a projection of a movie, or augmented reality superimposed on the normal field of vision. It can inherently be full-color 3D. Of course such a system will be complemented with ear phones. Modern hearing aids are already so small they can barely be seen, and have batteries that last a week. A pair of ear phones will also allow full 3D sound and also will be the audible complement of augmented vision.

Cameras in cell phones using traditional lenses are already very thin, and even they could be used for an experimental system of this type, but the metalens cameras will make this drastically thinner. The projection lens system must work in combination with the lens over the pupil. This also means that when the glasses are removed, the contact lens must also be removed, or the vision will be distorted.

The end result will be a pair of glasses, not quite as thin as an ordinary pair of glasses, but still very thin and comfortable. Instead of trying to mount the power source in the contact lens, like Mojo Vision is trying to do, a small battery would be mounted in the glasses. Mojo Vision is probably going to have to do something similar for the power source: put the battery in a small pair of glasses that projects the energy onto its contact lens.

This also solves the problem of the exit pupil limitations from binoculars. The entire light of the objective metalenses is projected through the pupil, since it is using a narrow beam projection method. The special lens mounted on the contact lens, will be tailored to work with this system. The included photograph is of underwater, single-separated googles, which gives an idea of what this might look like.

Harvard’s Capasso Group has scaled up the achromatic metalens to 2mm in diameter. That may not sound like much, but it is plenty for virtual reality contact lenses. The human pupil is 7mm at widest. These guys are going to beat Mojo Lens to the finish line for smart contact lenses.

Read the latest updates on coronavirus from Harvard University. For SEAS specific-updates, please visit SEAS & FAS Division of Science: Coronavirus FAQs.

Here’s how it worked: red buoys placed along the river walk indicated the locations of the digital artworks. Visitors had to install an app on their phones called Acute Art. Pointing their phones at the area around the buoys, they’d see the digital sculptures appear.

The artwork didn’t follow any particular theme, but rather consisted of everything from a giant, furry spider to a wriggling octopus to a levitating spiritual leader. Artists included Norwegian Bjarne Melgaard, Chinese Cao Fei, Argentine Tomas Saraceno, German Alicja Kwade, American KAWS, and several others.

“I want to use augmented reality to shape emotional connections with humans,” Fei told AnOther. “Augmented reality can re-enact what has happened in the past and provide an alternative to reality that is open-ended.”

The process of systems integration (SI) functionally links together infrastructure, computing systems, and applications. SI can allow for economies of scale, streamlined manufacturing, and better efficiency and innovation through combined research and development.

New to the systems integration toolbox are the emergence of transformative technologies and, especially, the growing capability to integrate functions due to exponential advances in computing, data analytics, and material science. These new capabilities are already having a significant impact on creating our future destinies.

The systems integration process has served us well and will continue to do so. But it needs augmenting. We are on the cusp of scientific discovery that often combines the physical with the digital—the Techno-Fusion or merging of technologies. Like Techno-Fusion in music, Techno-Fusion in technologies is really a trend that experiments and transcends traditional ways of integration. Among many, there are five grouping areas that I consider good examples to highlight the changing paradigm. They are: Smart Cities and the Internet of Things (IoT); Artificial Intelligence (AI), Machine Learning (ML), Quantum and Super Computing, and Robotics; Augmented Reality (AR) and Virtual Reality Technologies (VR); Health, Medicine, and Life Sciences Technologies; and Advanced Imaging Science.

AR cloud technology enables the unification of the physical and digital world to create immersive experiences. This technology uses a common interface to deliver persistent, collaborative and contextual digital content overlaid onto people, objects and locations. This provides users with information and services directly tied to every aspect of their physical surroundings.

The AR cloud will use a common interface to overlay digital content onto people, objects, and locations in persistent, interactive way.