Japan is using Augmented Reality to teach children about the dangers of flash floods 🌊 https://bit.ly/3kYclTI

Japan is using Augmented Reality to teach children about the dangers of flash floods 🌊 https://bit.ly/3kYclTI

Forward-looking: The service department can seem like the slowest part of a dealership, especially when it’s your car getting worked on. But Mercedes-Benz is infusing its dealerships with AR technology to speed up the diagnosis and repair of tricky and complex issues with its Virtual Remote Support, powered by Microsoft’s HoloLens 2 and Dynamics 365 Remote Assist. I visited one of Mercedes’ showcases last week to check out the implementation and get some hands-on time with the HoloLens 2.

For customers that bring in a hard-to-solve problem, it was common for Mercedes-Benz to call in a flying doctor, an expert from HQ that would fly in and get hands-on with an issue. That could take days to arrange, not to mention the costs and environmental impacts associated with flying in these technical specialists from all over the country. All the while, the customer is left without their luxury automobile.

While many shoppers go with a Mercedes for the styling and performance, it’s the service experience that impacts their future purchases. Long waits could see someone changing brands in the future.

Learn More.

UNDRR

The waters are rising! Japan is using Augmented Reality to teach children about the dangers of flash floods 🌊

The Status of Science and Technology report is an important step for monitoring the progress in the implementation of the Sendai Framework and an attempt to capture some of the progress across geographies, stakeholders, and disciplines towards the application of science and technology towards risk reduction in Asia-Pacific.

RENO, Nev. (KOLO) — Over the last 15 months, some of the world’s most advanced spinal care has taken place in Northern Nevada.

In July of 2,020 Spine Nevada’s Dr. James Lynch was the first surgeon in the country to use Augmedics Xvision in a community hospital. He’s now the first to reach 100 cases using the cutting-edge technology, which essentially allows surgeons to look through a patients’ skin using a pre-loaded CT scan and virtual headset.

“A long fusion that would’ve taken us an hour before can be done in about 15 minutes,” said Dr. Lynch. “The proof is in the pudding. The last 100 patients, most of them have done very well and benefited from this technology.”

The University of Bristol is part of an international consortium of 13 universities in partnership with Facebook AI, that collaborated to advance egocentric perception. As a result of this initiative, we have built the world’s largest egocentric dataset using off-the-shelf, head-mounted cameras.

Progress in the fields of artificial intelligence (AI) and augmented reality (AR) requires learning from the same data humans process to perceive the world. Our eyes allow us to explore places, understand people, manipulate objects and enjoy activities—from the mundane act of opening a door to the exciting interaction of a game of football with friends.

Egocentric 4D Live Perception (Ego4D) is a massive-scale dataset that compiles 3,025 hours of footage from the wearable cameras of 855 participants in nine countries: UK, India, Japan, Singapore, KSA, Colombia, Rwanda, Italy, and the US. The data captures a wide range of activities from the ‘egocentric’ perspective—that is from the viewpoint of the person carrying out the activity. The University of Bristol is the only UK representative in this diverse and international effort, collecting 270 hours from 82 participants who captured footage of their chosen activities of daily living—such as practicing a musical instrument, gardening, grooming their pet, or assembling furniture.

“In the not-too-distant future you could be wearing smart AR glasses that guide you through a recipe or how to fix your bike—they could even remind you where you left your keys,” said Principal Investigator at the University of Bristol and Professor of Computer Vision, Dima Damen.

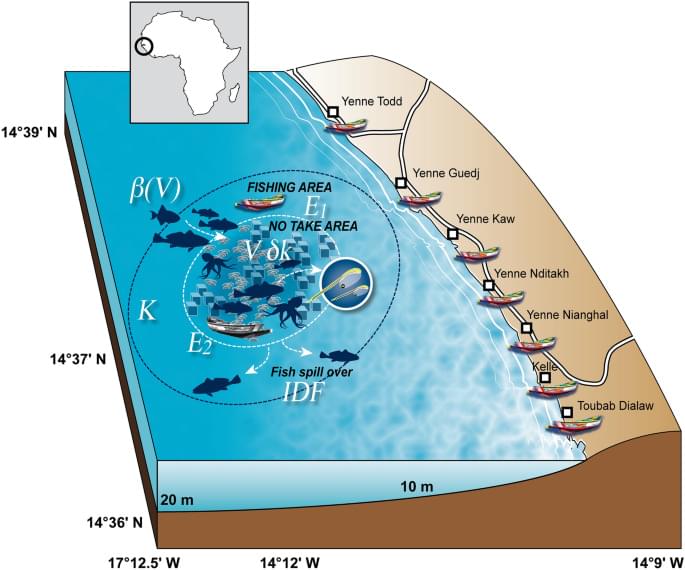

Coastal artisanal fisheries, particularly those in developing countries, are facing a global crisis of overexploitation1. Artificial reefs (ARs), or human–made reefs2, have been widely advocated by governmental and non-governmental conservation and management organizations for addressing these issues. Industries, particularly oil and gas, seeking to avoid the costs of removal or conventional disposal of used materials are often major advocates for deploying ARs. Yet, major questions remain regarding the success of such efforts in the context of weak governance and poorly sustained international investment in AR development projects. There is frequently confusion over whether or not ARs should be fishing sites and the precise goals of constructing such ARs are often unclear, making difficult to evaluate their successfulness3. Over the last 40 years, both failures and success AR implementation programs have been reported4,5. The main point of the present work is to underline the importance of the governance issue and address social and management factors on AR “success”.

To improve fishery yields, it has been recommended that ARs must be no-take areas (e.g.,2). Yet, most ARs were historically delineated as sites for fishing4, and were rarely implemented at large scales in/for no-take zones, even in countries with centuries of experience in constructing ARs, such as Japan. In Japan, fishery authorities and local fishers use ARs to promote sustainable catches and to establish nursery grounds of target species6. However, fishery authorities and local fishery cooperatives in Japan have extensive management authority over ARs. For example, fishing around ARs is usually limited to hook and line techniques, with net fishing rarely being permitted in areas where risk of entanglement in ARs is high. Furthermore, during spawning, fishing gear and fishing season are often restricted around ARs in Japan. These practices are recognized for their effectiveness in maintaining good fishing performance and marine conservation in Japan and elsewhere where they have been implemented7.

Attempts to transpose ARs to developing countries have, however, frequently ended in failure8, particularly when project funding comes to an end9. Thus, it is important to provide recommendations to improve the sustainability of AR deployments and realize their biodiversity conservation and fisheries management goals. This is particularly important in developing countries, which are often characterized by poor governance. For fisheries scientists and marine ecologists, the effectiveness of ARs is primarily quantified by surveying fish populations on ARs. In particular, the question of whether ARs facilitate the “production” of new fish or whether they only attract the surrounding fish remains under debate10,11,12. Few studies have documented how ARs are managed, and the impacts of such management8,13, despite the key importance of protecting no-take ARs from illegal fishing being repeatedly highlighted2. Mathematical models, implemented to set the optimal AR volume to maximize catches, suggest that, although attraction and production effects can modulate the response, the effect of ARs on fisheries mostly depends on governance options and efficiency14. Existing models show that fishing exclusively on ARs has consistently negative impacts on the equilibrium of catches. In comparison, ARs can have negative or positive impacts on catches when fishing on areas surrounding them, as a function of the magnitude of the AR attraction effect14. Whether or not ARs are managed as no-take areas influences these phenomena. For instance, on unmanaged ARs, overexploitation risk increases, as fish become more accessible to fishing fleets. In comparison, when fishing is banned on ARs, the fish biomass concentrated near the AR rises, leading to a “spill-over” effect that enhances catch at equilibrium in adjacent fishing areas15.

If you follow the latest trends in the tech industry, you probably know that there’s been a fair amount of debate about what the next big thing is going to be. Odds-on favorite for many has been augmented reality (AR) glasses, while others point to fully autonomous cars, and a few are clinging to the potential of 5G. With the surprise debut of Amazon’s Astro a few weeks back, personal robotic devices and digital companions have also thrown their hat into the ring.

However, while there has been little agreement on exactly what the next thing is, there seems to be little disagreement that whatever it turns out to be, it will be somehow powered, enabled, or enhanced by artificial intelligence (AI). Indeed, the fact that AI and machine learning (ML) are our future seems to be a foregone conclusion.

Yet, if we do an honest assessment of where some of these technologies actually stand on a functionality basis versus initial expectations, it’s fair to argue that the results have been disappointing on many levels. In fact, if we extend that thought process out to what AI/ML were supposed to do for us overall, then we start to come to a similarly disappointing conclusion.

Imagine a place where you could always stay young, name a city after yourself, or even become the president — sounds like a dream? Well, if not in the real world, such dreams can definitely be fulfilled in the virtual world of a metaverse. The metaverse is believed by some to be the future of the internet, where apart from surfing, people would also be able to enter inside the digital world of the internet, in the form of their avatars.

The advent of AR, blockchain, and VR devices in the last few years has sparked the development of the metaverse. Moreover, the unprecedented growth of highly advanced technologies in the gaming industry, which offer immersive gameplay experiences, not only provides us a glimpse of how the metaverse would look like but also indicates that we are closer than ever to experience a virtual world of our own.

But first, Facebook is going to have to bridge the territory of privacy — not just for those who might have photos taken of them, but for the wearers of these microphone and camera-equipped glasses. VR headsets are one thing (and they come off your face after a session). Glasses you wear around every day are the start of Facebook’s much larger ambition to be an always-connected maker of wearables, and that’s a lot harder for most people to get comfortable with.

Walking down my quiet suburban street, I’m looking up at the sky. Recording the sky. Around my ears, I hear ABBA’s new song, I Still Have Faith In You. It’s a melancholic end to the summer. I’m taking my new Ray Ban smart glasses for a walk.

The Ray-Ban Stories feel like a conservative start. They lack some features that have been in similar products already. The glasses, which act as earbud-free headphones, don’t have 3D spatial audio like the Bose Frames and Apple’s AirPods Pro do. The stereo cameras, on either side of the lenses, don’t work with AR effects, either. Facebook has a few sort-of-AR tricks in a brand-new companion app called View that pairs with these glasses on your phone, but they’re mostly ways of using depth data for a few quick social effects.

Traditional networks are unable to keep up with the demands of modern computing, such as cutting-edge computation and bandwidth-demanding services like video analytics and cybersecurity. In recent years, there has been a major shift in the focus of network research towards software-defined networks (SDN) and network function virtualization (NFV), two concepts that could overcome the limitations of traditional networking. SDN is an approach to network architecture that allows the network to be controlled using software applications, whereas NFV seeks to move functions like firewalls and encryption to virtual servers. SDN and NFV can help enterprises perform more efficiently and reduce costs. Needless to say, a combination of the two would be far more powerful than either one alone.

In a recent study published in IEEE Transactions on Cloud Computing, researchers from Korea now propose such a combined SDN/NFV network architecture that seeks to introduce additional computational functions to existing network functions. “We expect our SDN/NFV-based infrastructure to be considered for the future 6G network. Once 6G is commercialized, the resource management technique of the network and computing core can be applied to AR/VR or holographic services,” says Prof. Jeongho Kwak of Daegu Gyeongbuk Institute of Science and Technology (DGIST), Korea, who was an integral part of the study.

The new network architecture aims to create a holistic framework that can fine-tune processing resources that use different (heterogeneous) processors for different tasks and optimize networking. The unified framework will support dynamic service chaining, which allows a single network connection to be used for many connected services like firewalls and intrusion protection; and code offloading, which involves shifting intensive computational tasks to a resource-rich remote server.