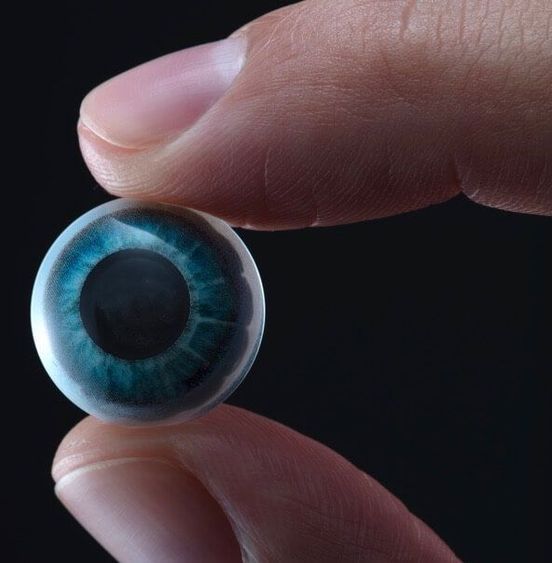

Researchers have fashioned ultrathin silicon nanoantennas that trap and redirect light, for applications in quantum computing, LIDAR and even the detection of viruses.

Light is notoriously fast. Its speed is crucial for rapid information exchange, but as light zips through materials, its chances of interacting and exciting atoms and molecules can become very small. If scientists can put the brakes on light particles, or photons, it would open the door to a host of new technology applications.

Now, in a paper published on August 17, 2020, in Nature Nanotechnology, Stanford scientists demonstrate a new approach to slow light significantly, much like an echo chamber holds onto sound, and to direct it at will. Researchers in the lab of Jennifer Dionne, associate professor of materials science and engineering at Stanford, structured ultrathin silicon chips into nanoscale bars to resonantly trap light and then release or redirect it later. These “high-quality-factor” or “high-Q” resonators could lead to novel ways of manipulating and using light, including new applications for quantum computing, virtual reality and augmented reality; light-based WiFi; and even the detection of viruses like SARS-CoV-2.