Australia’s more investment into Quantum technology.

The coming scientific revolution will make today’s supercomputers seem sluggish.

Australia’s more investment into Quantum technology.

The coming scientific revolution will make today’s supercomputers seem sluggish.

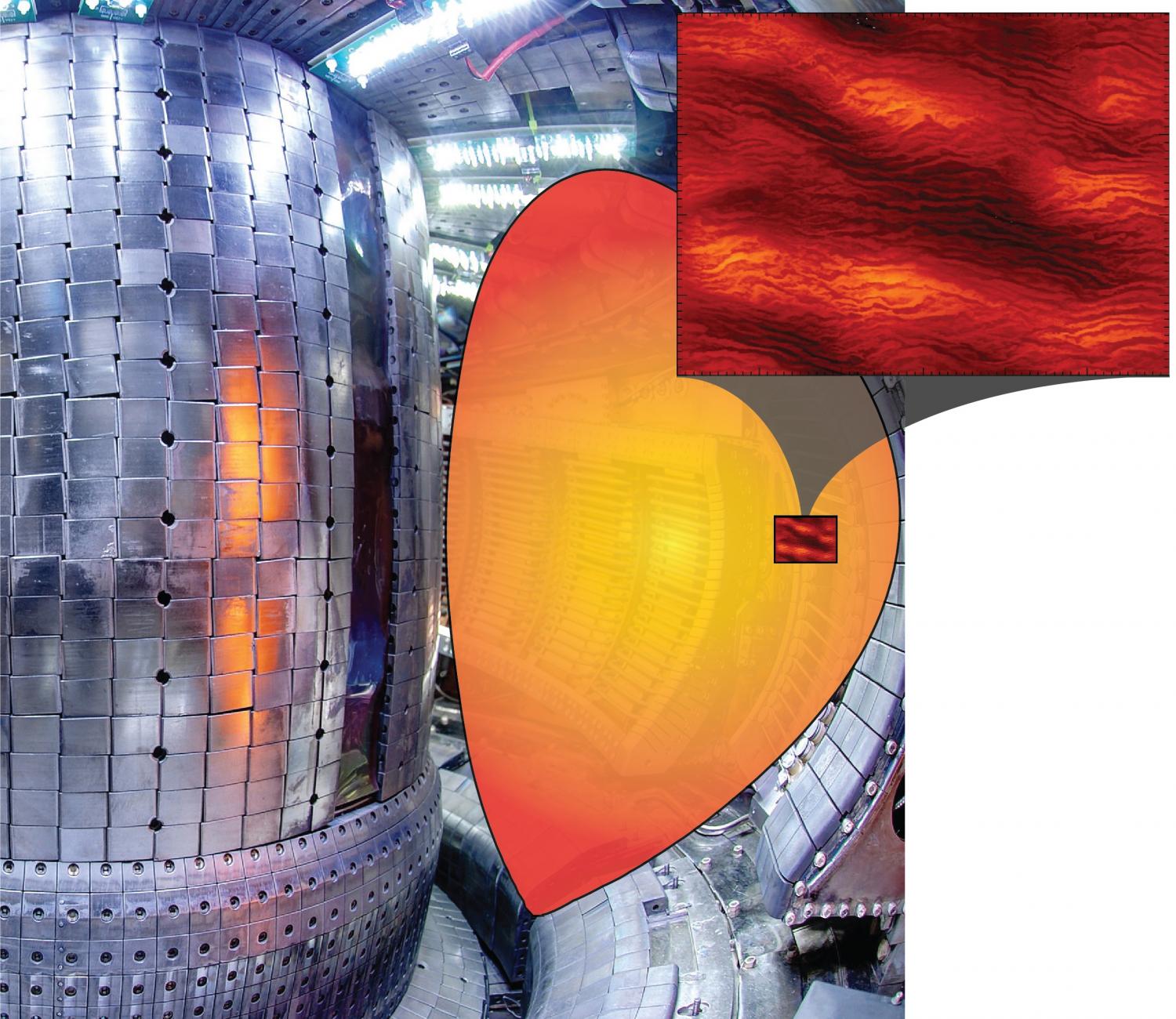

Solving the turbulence plasma mystery.

Cutting-edge simulations run at Lawrence Berkeley National Laboratory’s National Energy Research Scientific Computing Center (NERSC) over a two-year period are helping physicists better understand what influences the behavior of the plasma turbulence that is driven by the intense heating necessary to create fusion energy. This research has yielded exciting answers to long-standing questions about plasma heat loss that have previously stymied efforts to predict the performance of fusion reactors and could help pave the way for this alternative energy source.

The key to making fusion work is to maintain a sufficiently high temperature and density to enable the atoms in the reactor to overcome their mutual repulsion and bind to form helium. But one side effect of this process is turbulence, which can increase the rate of plasma heat loss, significantly limiting the resulting energy output. So researchers have been working to pinpoint both what causes the turbulence and how to control or possibly eliminate it.

Because fusion reactors are extremely complex and expensive to design and build, supercomputers have been used for more than 40 years to simulate the conditions to create better reactor designs. NERSC is a Department of Energy Office of Science User Facility that has supported fusion research since 1974.

Canadian scientists have apparently opened the door to the world of biological supercomputers: this week they unveiled a prototype of a potentially revolutionary unit — as small as a book, energy-efficient with extreme mathematical capabilities and which, importantly, does not overheat.

In what appears at first to be a storyline ripped from a sci-fi thriller, a multi-national research team spread across two continents, four countries, and ten years in the making have created a model of a supercomputer that runs on the same substance that living things use as an energy source.

Humans and virtually all living things rely on Adenosine triphosphate ( ATP ) to provide the energy our cells need to perform daily functions. The biological computer created by the team led by Professor Dan Nicolau, Chair of the Department of Bioengineering at McGill, also relies on ATP for power.

The biological computer is able to process information very quickly and operates accurately using parallel networks like contemporary massive electronic super computers. In addition, the model is lot smaller in size, uses relatively less energy, and functions using proteins that are present in all living cells.

I must admit, when people see that you work with Quantum Computing and/ or networking; they have no idea how to classify you because you’re working on Nextgen “disruptive” technology that most of mainstream has not been exposed to.

Peter Wittek and I met more than a decade ago while he was an exchange student in Singapore. I consider him one of the most interesting people I’ve met and an inspiration to us all.

Currently, he is a research scientist working on quantum machine learning, an emergent field halfway between data science and quantum information processing. Peter also has a long history in machine learning on supercomputers and large-scale simulations of quantum systems. As a former digital nomad, Peter has been to over a hundred countries, he is currently based in Barcelona where, outside work hours, he focuses on dancing salsa, running long distances, and advising startups.

Researchers have taken on the problem of reducing a super computer the size of a basketball field to that of a book. The answer is “biocomputers” – incredibly powerful machines capable of performing multiple calculations with a fraction of energy.

According to study coordinator Heiner Linke, who heads nanoscience at Lund University in Sweden, “a biocomputer requires less than one percent of the energy an electronic transistor needs to carry out one calculation step.”

A biocomputer is useful because ordinary computers are incapable of solving combinational problems, such as those dealing with cryptography or other tasks requiring that a multitude of possible solutions be considered before deciding on the optimal one. These already exist, but the new research from Lund tackles the key problems of scalability and energy efficiency.

The substance that provides energy to all the cells in our bodies, Adenosine triphosphate (ATP), may also be able to power the next generation of supercomputers. The discovery opens doors to the creation of biological supercomputers that are about the size of a book. That is what an international team of researchers led by Prof. Nicolau, the Chair of the Department of Bioengineering at McGill, believe. They’ve published an article on the subject earlier this week in the Proceedings of the National Academy of Sciences (PNAS), in which they describe a model of a biological computer that they have created that is able to process information very quickly and accurately using parallel networks in the same way that massive electronic super computers do.

Except that the model bio supercomputer they have created is a whole lot smaller than current supercomputers, uses much less energy, and uses proteins present in all living cells to function.

Doodling on the back of an envelope

“We’ve managed to create a very complex network in a very small area,” says Dan Nicolau, Sr. with a laugh. He began working on the idea with his son, Dan Jr., more than a decade ago and was then joined by colleagues from Germany, Sweden and The Netherlands, some 7 years ago. “This started as a back of an envelope idea, after too much rum I think, with drawings of what looked like small worms exploring mazes.”

A fun story:

Advances in artificial intelligence have raised the question of a supercomputer running for office.

Someday this could happen as well as US congress, Supreme Court, the UN, Nato, IAEA, WTO, World Bank, etc.

IBM’s AI researchers seem to favour recreational drug use, free university education and free healthcare.

Luv the whole beautiful picture of a Big Data Quantum Computing Cloud. And, we’re definitely going to need it for all of our data demands and performance demands when you layer in the future of AI (including robotics), wearables, our ongoing convergence to singularity with nanobots and other BMI technologies. Why we could easily exceed $4.6 bil by 2021.

From gene mapping to space exploration, humanity continues to generate ever-larger sets of data—far more information than people can actually process, manage, or understand.

Machine learning systems can help researchers deal with this ever-growing flood of information. Some of the most powerful of these analytical tools are based on a strange branch of geometry called topology, which deals with properties that stay the same even when something is bent and stretched every which way.

Such topological systems are especially useful for analyzing the connections in complex networks, such as the internal wiring of the brain, the U.S. power grid, or the global interconnections of the Internet. But even with the most powerful modern supercomputers, such problems remain daunting and impractical to solve. Now, a new approach that would use quantum computers to streamline these problems has been developed by researchers at MIT, the University of Waterloo, and the University of Southern California…