Interesting insight on Aluminum Nitride used to create Qubits.

http:///articles/could-aluminum-nitride-be-engineered-to-produce-quantum-bitsInteresting insight.

Newswise — Quantum computers have the potential to break common cryptography techniques, search huge datasets and simulate quantum systems in a fraction of the time it would take today’s computers. But before this can happen, engineers need to be able to harness the properties of quantum bits or qubits.

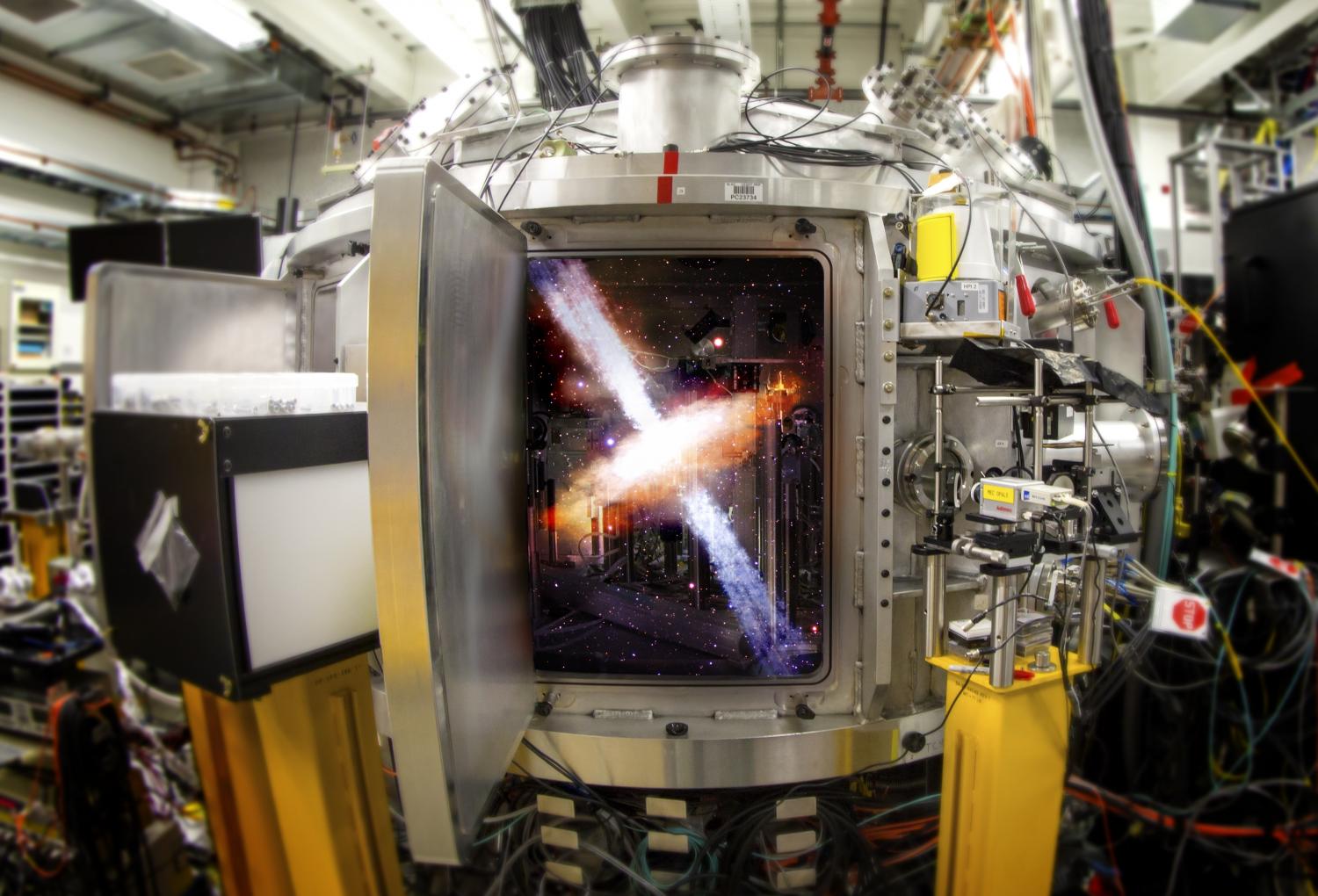

Currently, one of the leading methods for creating qubits in materials involves exploiting the structural atomic defects in diamond. But several researchers at the University of Chicago and Argonne National Laboratory believe that if an analogue defect could be engineered into a less expensive material, the cost of manufacturing quantum technologies could be significantly reduced. Using supercomputers at the National Energy Research Scientific Computing Center (NERSC), which is located at the Lawrence Berkeley National Laboratory (Berkeley Lab), these researchers have identified a possible candidate in aluminum nitride. Their findings were published in Nature Scientific Reports.

“Silicon semiconductors are reaching their physical limits—it’ll probably happen within the next five to 10 years—but if we can implement qubits into semiconductors, we will be able to move beyond silicon,” says Hosung Seo, University of Chicago Postdoctoral Researcher and a first author of the paper.