Humans are able to find objects in their surroundings and detect some of their properties simply by touching them. While this skill is particularly valuable for blind individuals, it can also help people with no visual impairments to complete simple tasks, such as locating and grabbing an object inside a bag or pocket.

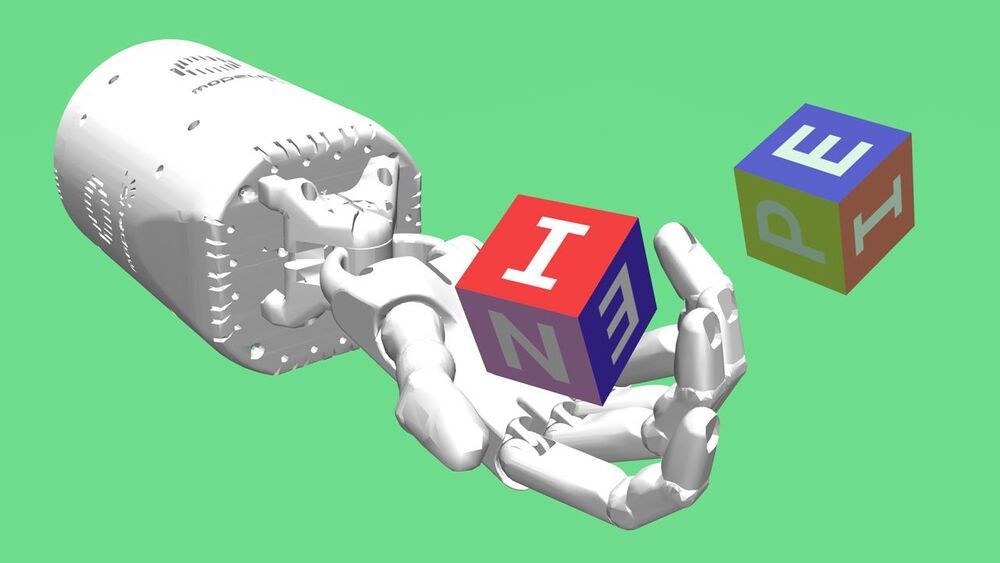

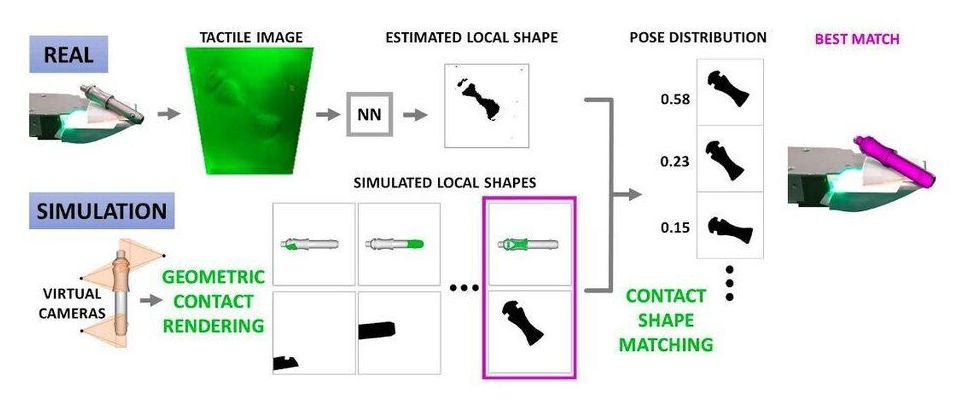

Researchers at Massachusetts Institute of Technology (MIT) have recently carried out a study aimed at replicating this human capability in robots, allowing them to understand where objects are located simply by touching them. Their paper, pre-published on arXiv, highlights the advantages of developing robots that can interact with their surrounding environment through touch rather than merely through vision and audio processing.

“The goal of our work was to demonstrate that with high-resolution tactile sensing it is possible to accurately localize known objects even from the first contact,” Maria Bauza, one of the researchers who carried out the study, told TechXplore. “Our approach makes an important leap compared to previous works on tactile localization, as we do not rely on any other external sensing modality (like vision) or previously collected tactile data related to the manipulated objects. Instead, our technique, which was trained directly in simulation, can localize known objects from the first touch which is paramount in real robotic applications where real data collection is expensive or simply unfeasible.”