Terrain-relative navigation helped Perseverance land – and Ingenuity fly – autonomously on Mars. Now it’s time to test a similar system while exploring another frontier.

Got milk?

Hoping to capitalise on a surge in demand for home deliveries, a Singapore technology company has deployed a pair of robots to bring residents their groceries in one part of the city state.

Developed by OTSAW Digital and both named “Camello”, the robots’ services have been offered to 700 households in a one-year trial.

Users can book delivery slots for their milk and eggs, and an app notifies them when the robot is about to reach a pick-up point — usually the lobby of an apartment building.

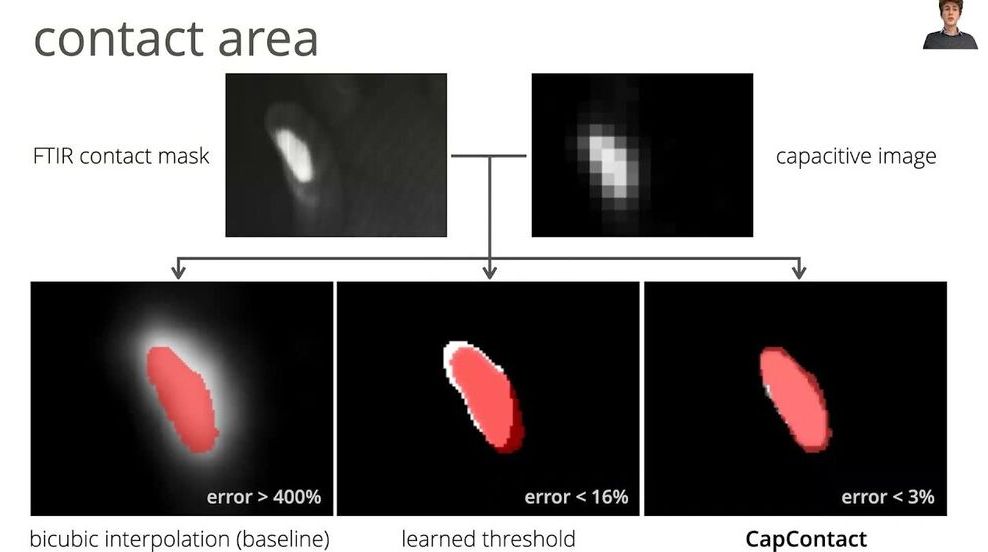

ETH Computer scientists have developed a new AI solution that enables touchscreens to sense with eight times higher resolution than current devices. Thanks to AI, their solution can infer much more precisely where fingers touch the screen.

Quickly typing a message on a smartphone sometimes results in hitting the wrong letters on the small keyboard or on other input buttons in an app. The touch sensors that detect finger input on the touch screen have not changed much since they were first released in mobile phones in the mid-2000s.

In contrast, the screens of smartphones and tablets are now providing unprecedented visual quality, which is even more evident with each new generation of devices: higher color fidelity, higher resolution, crisper contrast. A latest-generation iPhone, for example, has a display resolution of 2532×1170 pixels. But the touch sensor it integrates can only detect input with a resolution of around 32×15 pixels—that’s almost 80 times lower than the display resolution: “And here we are, wondering why we make so many typing errors on the small keyboard? We think that we should be able to select objects with pixel accuracy through touch, but that’s certainly not the case,” says Christian Holz, ETH computer science professor from the Sensing, Interaction & Perception Lab (SIPLAB) in an interview in the ETH Computer Science Department’s “Spotlights” series.

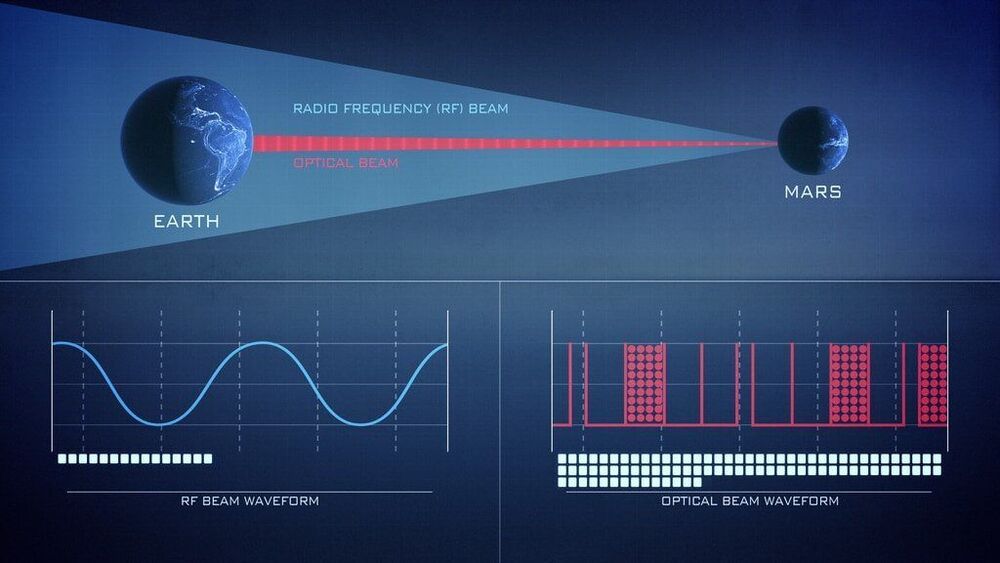

Launching this summer, NASA’s Laser Communications Relay Demonstration (LCRD) will showcase the dynamic powers of laser communications technologies. With NASA’s ever-increasing human and robotic presence in space, missions can benefit from a new way of “talking” with Earth.

Since the beginning of spaceflight in the 1950s, NASA missions have leveraged radio frequency communications to send data to and from space. Laser communications, also known as optical communications, will further empower missions with unprecedented data capabilities.

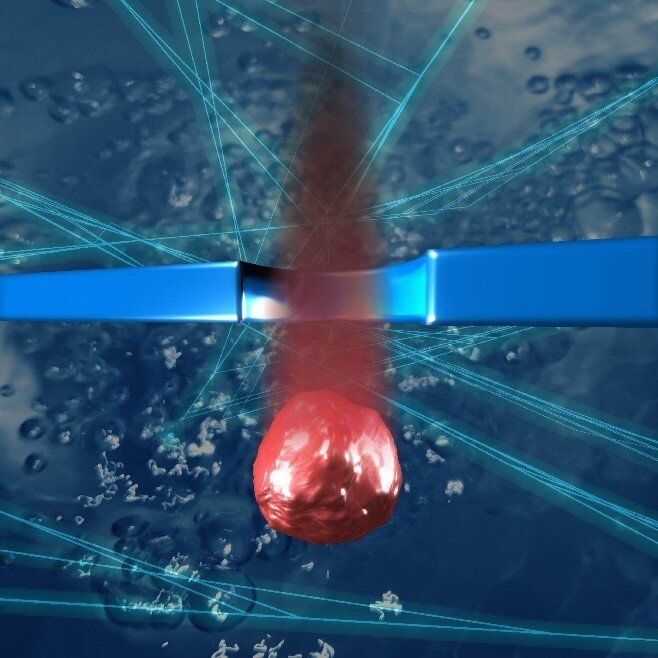

Scientists from the Institute of Scientific and Industrial Research at Osaka University have used machine-learning methods to enhance the signal-to-noise ratio in data collected when tiny spheres are passed through microscopic nanopores cut into silicon substrates. This work may lead to much more sensitive data collection when sequencing DNA or detecting small concentrations of pathogens.

Miniaturization has opened the possibility for a wide range of diagnostic tools, such as point-of-care detection of diseases, to be performed quickly and with very small samples. For example, unknown particles can be analyzed by passing them through nanopores and recording tiny changes in the electrical current. However, the intensity of these signals can be very low, and is often buried under random noise. New techniques for extracting the useful information are clearly needed.

Now, scientists from Osaka University have used deep learning to “denoise” nanopore data. Most machine learning methods need to be trained with many “clean” examples before they can interpret noisy datasets. However, using a technique called Noise2Noise, which was originally developed for enhancing images, the team was able to improve resolution of noisy runs even though no clean data was available. Deep neural networks, which act like layered neurons in the brain, were utilized to reduce the interference in the data.

Researchers at Intel Labs have modded Grand Theft Auto V using a neural network and a dataset of photos of German cities. The results look unsettlingly photorealistic.

It will serve as a backbone network for the China Environment for Network Innovations (CENI), a national research facility connecting the largest cities in China, to verify its performance and the security of future network communications technology before commercial use.

Experimental network connects 40 leading universities to prepare for an AI-driven society five to 10 years down the track.

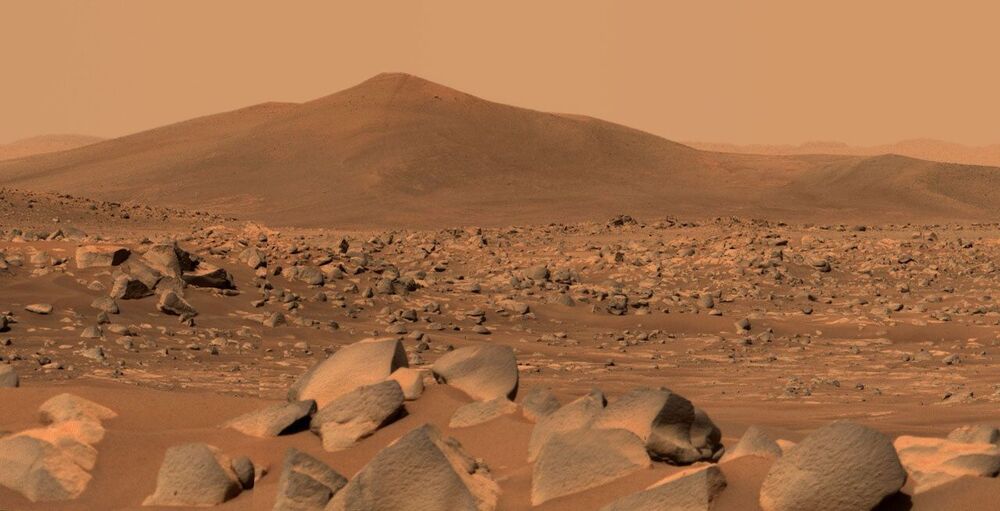

NASA’s newest Mars rover is beginning to study the floor of an ancient crater that once held a lake.

NASA’s Perseverance rover has been busy serving as a communications base station for the Ingenuity Mars Helicopter and documenting the rotorcraft’s historic flights. But the rover has also been busy focusing its science instruments on rocks that lay on the floor of Jezero Crater.

What insights they turn up will help scientists create a timeline of when an ancient lake formed there, when it dried, and when sediment began piling up in the delta that formed in the crater long ago. Understanding this timeline should help date rock samples – to be collected later in the mission – that might preserve a record of ancient microbes.

Researchers in Singapore have found a way of controlling a Venus flytrap using electric signals from a smartphone, an innovation they hope will have a range of uses from robotics to employing the plants as environmental sensors.

Luo Yifei, a researcher at Singapore’s Nanyang Technological University (NTU), showed in a demonstration how a signal from a smartphone app sent to tiny electrodes attached to the plant could make its trap close as it does when catching a fly.

“Plants are like humans, they generate electric signals, like the ECG (electrocardiogram) from our hearts,” said Luo, who works at NTU’s School of Materials Science and Engineering.