Despite years of hype, virtual reality headsets have yet to topple TV or computer screens as the go-to devices for video viewing.

One reason: VR can make users feel sick. Nausea and eye strain can result because VR creates an illusion of 3D viewing although the user is in fact staring at a fixed-distance 2D display. The solution for better 3D visualization could lie in a 60-year-old technology remade for the digital world: holograms.

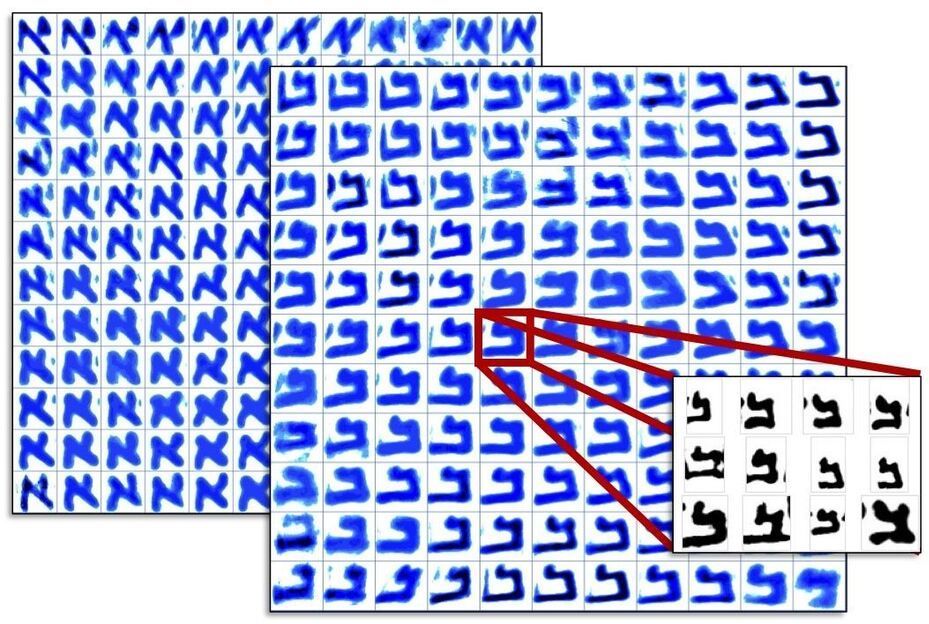

Holograms deliver an exceptional representation of 3D world around us. Plus, they’re beautiful. (Go ahead — check out the holographic dove on your Visa card.) Holograms offer a shifting perspective based on the viewer’s position, and they allow the eye to adjust focal depth to alternately focus on foreground and background.