ETH Computer scientists have developed a new AI solution that enables touchscreens to sense with eight times higher resolution than current devices. Thanks to AI, their solution can infer much more precisely where fingers touch the screen.

Quickly typing a message on a smartphone sometimes results in hitting the wrong letters on the small keyboard or on other input buttons in an app. The touch sensors that detect finger input on the touch screen have not changed much since they were first released in mobile phones in the mid-2000s.

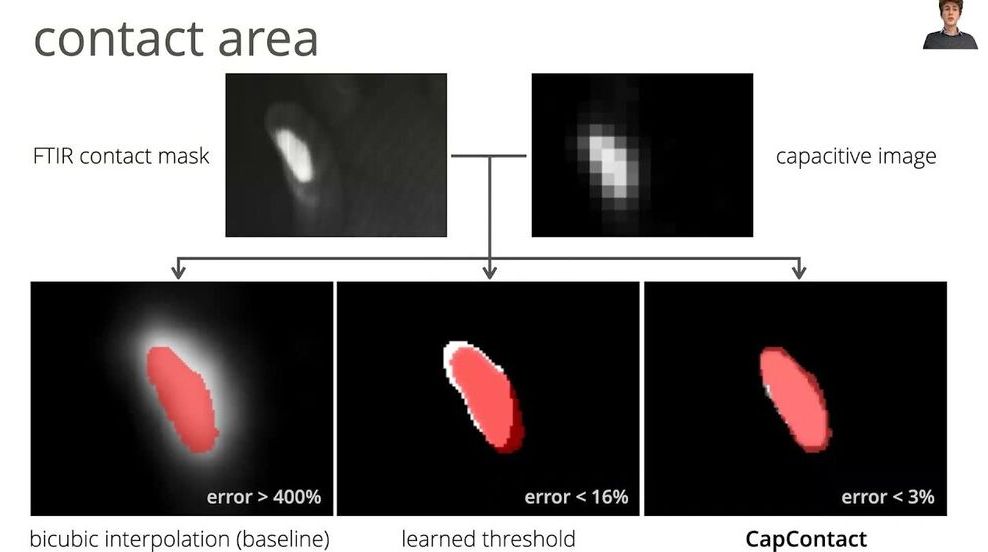

In contrast, the screens of smartphones and tablets are now providing unprecedented visual quality, which is even more evident with each new generation of devices: higher color fidelity, higher resolution, crisper contrast. A latest-generation iPhone, for example, has a display resolution of 2532×1170 pixels. But the touch sensor it integrates can only detect input with a resolution of around 32×15 pixels—that’s almost 80 times lower than the display resolution: “And here we are, wondering why we make so many typing errors on the small keyboard? We think that we should be able to select objects with pixel accuracy through touch, but that’s certainly not the case,” says Christian Holz, ETH computer science professor from the Sensing, Interaction & Perception Lab (SIPLAB) in an interview in the ETH Computer Science Department’s “Spotlights” series.