One of the most important breakthroughs, perhaps, may be AI that can understand humans. http://ow.ly/WLEBo

One of the most important breakthroughs, perhaps, may be AI that can understand humans. http://ow.ly/WLEBo

A new kind of security guard is on patrol in Silicon Valley: Crime-fighting robots that look like they’re straight out of a sci-fi movie. News, Sports, Weather, Traffic and the Best of SF

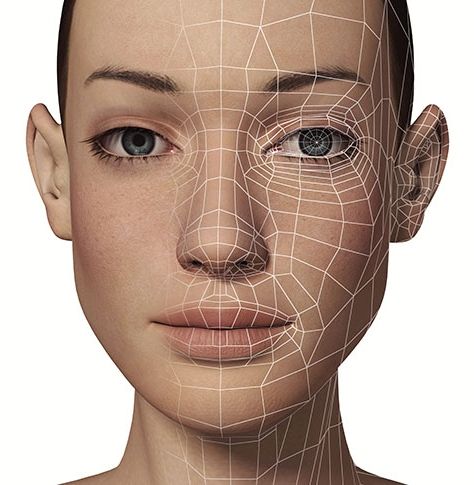

Analyzing expressions is an increasingly hot topic among tech companies.

It’s not clear what it plans to do with it yet, but Apple has gobbled up a startup whose technology can read facial expressions.

The tech giant has reportedly acquired Emotient, a San Diego-based company that uses artificial technology to detect emotion from facial expressions, Apple confirmed to The Wall Street Journal. The company’s technology has primarily been used by advertisers, doctors, and retailers, though it’s not clear what Apple AAPL 0.66% plans to do with it.

Johnny Matheny is the first person to attach a mind-controlled prosthetic limb directly to his skeleton. After losing his arm to cancer in 2008, Johnny signed up for a number of experimental surgeries to prepare himself to use a DARPA-funded prosthetic prototype. The Modular Prosthetic Limb, developed by the Johns Hopkins Applied Physics Laboratory, allows Johnny to regain almost complete range of motion through the Bluetooth-controlled arm. (Video by Drew Beebe, Brandon Lisy) (Source: Bloomberg)

“Apple Inc. has purchased Emotient Inc., a startup that uses artificial-intelligence technology to read people’s emotions by analyzing facial expressions.”

Yup, you read that headline right. A Chinese UAV company named Ehang just unveiled the world’s first autonomous flying taxi.

The plainly-named 184 drone is essentially a giant quadcopter designed to carry a single passenger — and it needs no pilot. Inside the cockpit, there are absolutely zero controls. No joystick, no steering wheel, no buttons, switches, or control panels — just a seat and a small tablet stand.

To fly it, the user simply hops in the cockpit, fires up the accompanying mobile app, and chooses a destination. From that point onward, you’re just along for the ride. The drone takes care of all the piloting and navigation autonomously — so you supposedly don’t need a pilot’s license to use it.

When will autonomous cars actually be on our roads? It seems that the date is far closer than anticipated.

This futuristic autonomous car can communicate with its surroundings, and it charges as it drives…

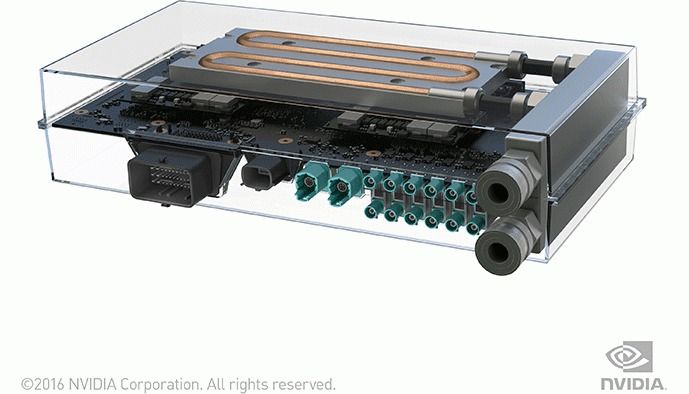

Nvidia took pretty much everyone by surprise when it announced it was getting into self-driving cars; it’s just not what you expect from a company that’s made its name off selling graphics cards for gamers.

At this year’s CES, it’s taking the focus on autonomous cars even further.

The company today announced the Nvidia Drive PX2. According to CEO Jen-Hsun Huang, it’s basically a supercomputer for your car. Hardware-wise, it’s made up of 12 CPU cores and four GPUs, all liquid-cooled. That amounts to about 8 teraflops of processing power, is as powerful as 6 Titan X graphics cards, and compares to ‘about 150 MacBook Pros’ for self-driving applications.

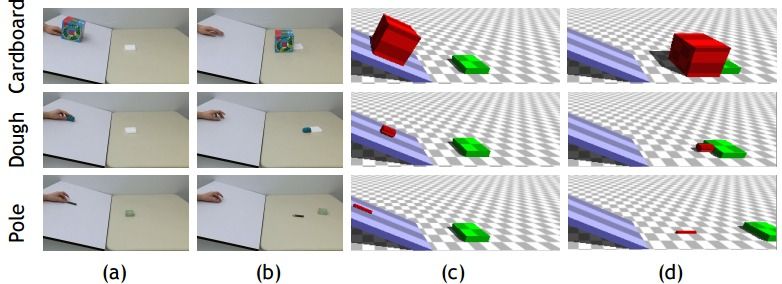

We humans take for granted our remarkable ability to predict things that happen around us. For example, consider Rube Goldberg machines: One of the reasons we enjoy them is because we can watch a chain-reaction of objects fall, roll, slide and collide, and anticipate what happens next.

But how do we do it? How do we effortlessly absorb enough information from the world to be able to react to our surroundings in real-time? And, as a computer scientist might then wonder, is this something that we can teach machines?

That last question has recently been partially answered by researchers at MIT’s Computer Science and Artificial Intelligence Lab (CSAIL), who have developed a computational model that is just as accurate as humans at predicting how objects move.