Tesla announced software updates that will enhance cars’ self-driving abilities.

Run away AI & Robots in particular do not worry me at this point. When we have Quantum based AI and Robots meaning they can fully operate themselves; that’s when we have to truly consider our real risks and ensure we have proper safe gaurds. The bigger issue with current AI and Robots that are not developed on a Quantum platform or technology is hacking. Hacking by others is the immediate threat for AI & Robots.

Interesting and could change as well as acellerate our efforts around bot technology and humans as well as other areas of robotic technology.

Like Jedi Knights, researchers at Purdue University are using the force — force fields, that is. (Photo : Windell Oskay | Flickr)

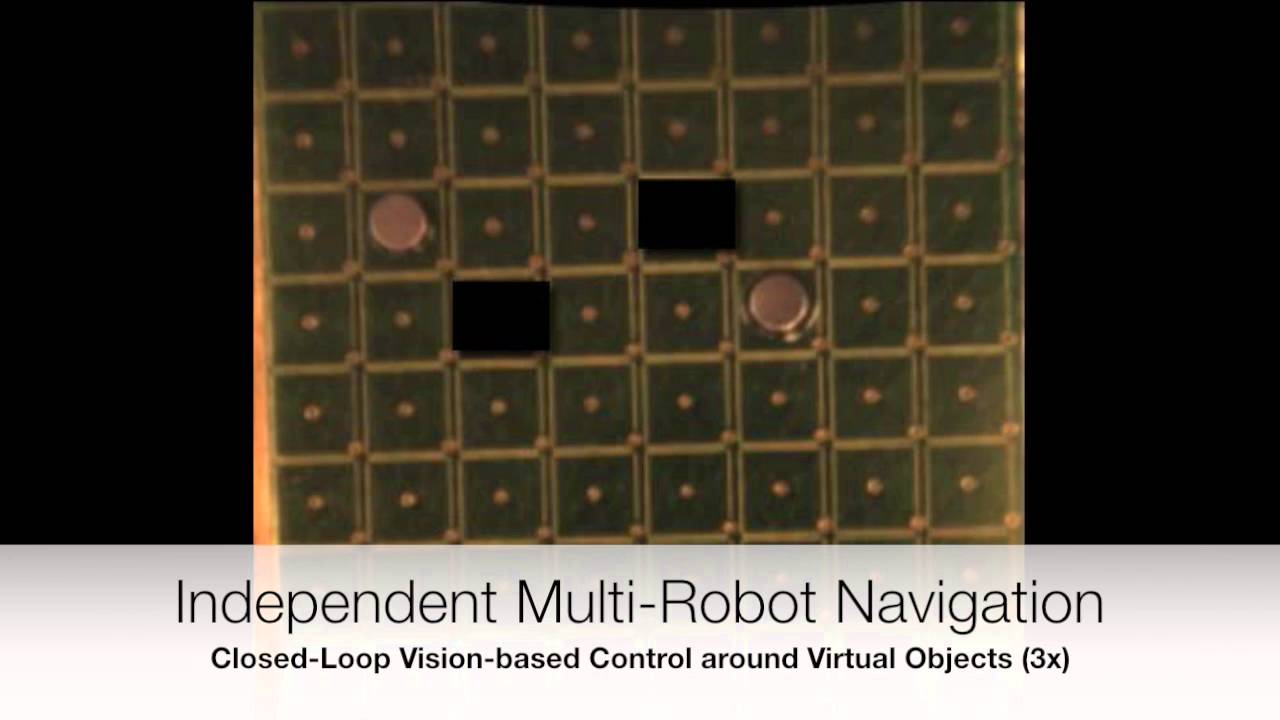

Like Jedi Knights, researchers at Purdue University are using the force — force fields, that is. The team of scientists has discovered a way to control tiny robots with the help of individual magnetic fields, which, in turn, might help us one day learn how to control entire groups of microbots and nanobots in areas like medicine or even manufacturing.

While the idea of controlling microbots might be simple, it’s a deceptively complicated goal, especially if the bots in question are conceivably too small to realistically accommodate a tiny enough battery to power them. This is where the magnetic force fields come into play: they can generate enough energy and charge to move the microbots about — “like using mini force fields.”

Seeing this video; I already identified quickly where the US Government could truly cut it’s budget.

I legitimately have fun watching these robot arms sort out batteries because they are just so damn good at their jobs. A conveyor belt pushes out an endless stream of batteries that desperately need sorting and the robot arms somehow never fall behind. One robot arm grabs the batteries that are scattered all over the place and creates a set of 4 while the other robotic arm snatches those sets of batteries and puts them aside. It’s great because the whole sorting system isn’t totally uniform, the robot arms look like they’re frantically fighting against the clock.

I could see the value of AI in helping with a whole host of addictions, compulsive disorders, etc. AI at the core is often looking at patterns and predicting outcomes, or the next steps to make, or predicting what you or I will want to do or react to something, etc. So, leveraging AI as a tool to help in finding or innovating new solutions for things like OCD or addictions does truly make sense.

New York-based AiCure, which holds 12 patents for artificially intelligent software platforms that aim to improve patient outcomes by targeting medication adherence, announced the closing of a $12.25 million funding round Monday.

The company’s software was built with help from $7 million in competitive grants from four National Institutes of Health organizations, awarded in order to spur tech developments that would have a significant impact on drug research and therapy. The National Institute of Drug Abuse awarded AiCure $1 million in 2014 to help launch a major study into the efficacy of using the company’s platform to monitor and intervene with patients receiving medication as maintenance therapy for addiction.

Adherence to such therapies is associated with improved recovery, but often patients take improper doses or sell the drugs to others. To address this, AiCure’s platform connects patients with artificial intelligence software via their devices that determines whether a medication is being taken as prescribed. The platform has shown to be feasible for use across various patient populations, including elderly patients and study participants in schizophrenia and HIV prevention trials, according to a news release.

As I have mentioned in some of my other reports and writings; infrastructure (power grids, transportation, social services, etc.) is a key area that we need to modernize and get funding soon in place given the changes that are coming. As Russia’s own power stations were hacked; it will not be anything to when the more sophisticated releases of the Quantum Internet and Platforms are finally releasing to the main stream. Someone last week asked me what kept me up at night worrying; I told them our infrastructure and we have not been planning or modernizing it to handle the changes that are coming in the next 5 years much less the next 7 years.

With cyberattacks gaining in sophistication and volume, we can expect to see a range of new targets in the year ahead.

Good report from Brookings Institute on the longer term IT Transformation. It highlights the need for countries and industry needs to be prepared for the magnitude of the transformation that is on the horizon. I support this perspective that there will indeed be a need for programs to be in place to retool,educate, and support workers that will be displaced. Also, there is a larger threat; and that is we must ensure that our critical infrastructure like Power Grids, banks, military, social prog, etc. are modernized into the changes that are coming from AI & Quantum.

Kemal Dervis examines the impact of artificial intelligence on our economies and labor markets.

Makes sense.

Moth Orchid flower (credit: Imgur.com)

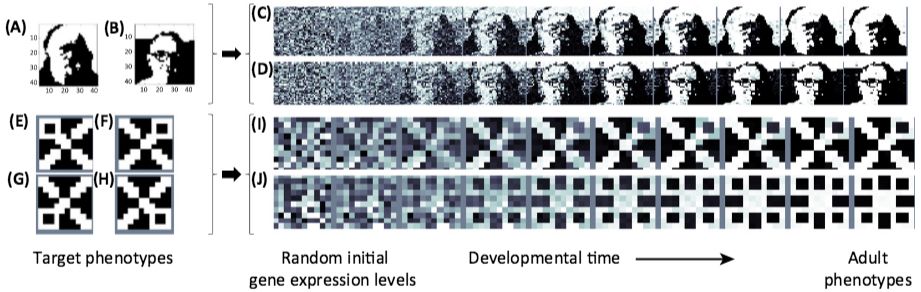

A computer scientist and biologist propose to unify the theory of evolution with learning theories to explain the “amazing, apparently intelligent designs that evolution produces.”

The scientists — University of Southampton School of Electronics and Computer Science professor Richard Watson * and Eötvös Loránd University (Budapest) professor of biology Eörs Szathmáry * — say they’ve found that it’s possible for evolution to exhibit some of the same intelligent behaviors as learning systems — including neural networks.

In the early days of the space race of the 1960s, NASA used satellites to map the geography of the moon. A better understanding of its geology, however, came when men actually walked on the moon, culminating with Astronaut and Geologist Harrison Schmitt exploring the moon’s surface during the Apollo 17 mission in 1972.

Image credit: Scientific AmericanIn the modern era, Dr. Gregory Hickock is one neuroscientist who believes the field of neuroscience is pursuing comparable advances. While scientists have historically developed a geographic map of the brain’s functional systems, Hickock says computational neuroanatomy is digging deeper into the geology of the brain to help provide an understanding of how the different regions interact computationally to give rise to complex behaviors.

“Computational neuroanatomy is kind of working towards that level of description from the brain map perspective. The typical function maps you see in textbooks are cartoon-like. We’re trying to take those mountain areas and, instead of relating them to labels for functions like language, we’re trying to map them on — and relate them to — stuff that the computational neuroscientists are doing.”

Hickok pointed to a number of advances that have already been made through computational neuroanatomy: mapping visual systems to determine how the visual cortex can code information and perform computations, as well as mapping neurally realistic approximations of circuits that actually mimic motor control, among others. In addition, researchers are building spiking network models, which simulate individual neurons. Scientists use thousands of these neurons in simulations to operate robots in a manner comparable to how the brain might perform the job.

That research is driving more innovation in artificial intelligence, says Gregory. For example, brain-inspired models are being used to develop better AI systems for stores of information or retrieval of information, as well as in automated speech recognition systems. In addition, this sort of work can be used to develop better cochlear implants or other sorts of neural-prostheses, which are just starting to be explored.

“In terms of neural-prostheses that can take advantage of this stuff, if you look at patterns and activity in neurons or regions in cortex, you can decode information from those patterns of activity, (such as) motor plans or acoustic representation,” Hickok said. “So it’s possible now to implant an electrode array in the motor cortex of an individual who is locked in, so to speak, and they can control a robotic arm.”

More specifically, Hickok is interested in applying computational neuroanatomy to speech and language functions. In some cases where patients have lost the ability to produce fluid speech, he states that the cause is the disconnection of still-intact brain areas that are no longer “talking to each other”. Once we understand how these circuits are organized and what they’re doing computationally, Gregory believes we might one day be able to insert electrode arrays and reconnect those brain areas as a form of rehabilitation.

As he looks at the future applications in artificial intelligence, Hickok says he expects continued development in neural-prostheses, such as cochlear implants, artificial retinas, and artificial motor control circuits. The fact that scientists are still trying to simulate how the brain does its computations is one hurdle; the “squishy” nature of brain matter seems to operate differently than the precision developed in digital computers.

Though multiple global brain projects are underway and progress is being made (Wired’s Katie Palmer gives a succinct overview), Gregory emphasizes that we’re still nowhere close to actually re-creating the human mind. “Presumably, this is what evolution has done over millions of years to configure systems that allow us to do lots of different things and that is going to (sic) take a really long time to figure out,” he said. “The number of neurons involved, 80 billion in the current estimate, trillions of connections, lots and lots of moving parts, different strategies for coding different kinds of computations… it’s just ridiculously complex and I don’t see that as something that’s easily going to give up its secrets within the next couple of generations.”

The Google billionaire believes companies need to start working together on developing artificial intelligence.