This is Disney’s wall-riding robot.

https://www.youtube.com/watch?v=e9P9_QM8cN8

Discover & Share this Robot Car GIF with everyone you know. GIPHY is how you search, share, discover, and create GIFs.

This is Disney’s wall-riding robot.

https://www.youtube.com/watch?v=e9P9_QM8cN8

Discover & Share this Robot Car GIF with everyone you know. GIPHY is how you search, share, discover, and create GIFs.

Futurism presents its annual This Year in Science immersive experience! This year’s themes include robot intelligence, space exploration, drones, CRISPR (a breakthrough gene editing tool), plus a special ‘Futurist of the Year’ award.

BY: DANIEL KORN

The very mention of “nanobots” can bring up a certain future paranoia in people—undetectable robots under my skin? Thanks, but no thanks. Professor Ido Bachelet of Israel’s Bar-Ilan University confirms that while tiny robots being injected into a human body to fight disease might sound like science fiction, it is in fact very real.

Cancer treatment as we know it is problematic because it targets a large area. Chemo and radiation therapies are like setting off a bomb—they destroy cancerous cells, but in the process also damage the healthy ones surrounding it. This is why these therapies are sometimes as harmful as the cancer itself. Thus, the dilemma with curing cancer is not in finding treatments that can wipe out the cancerous cells, but ones that can do so without creating a bevy of additional medical issues. As Bachelet himself notes in a TEDMED talk: “searching for a safer cancer drug is basically like searching for a gun that kills only bad people.”

[via: http://imgur.com/]

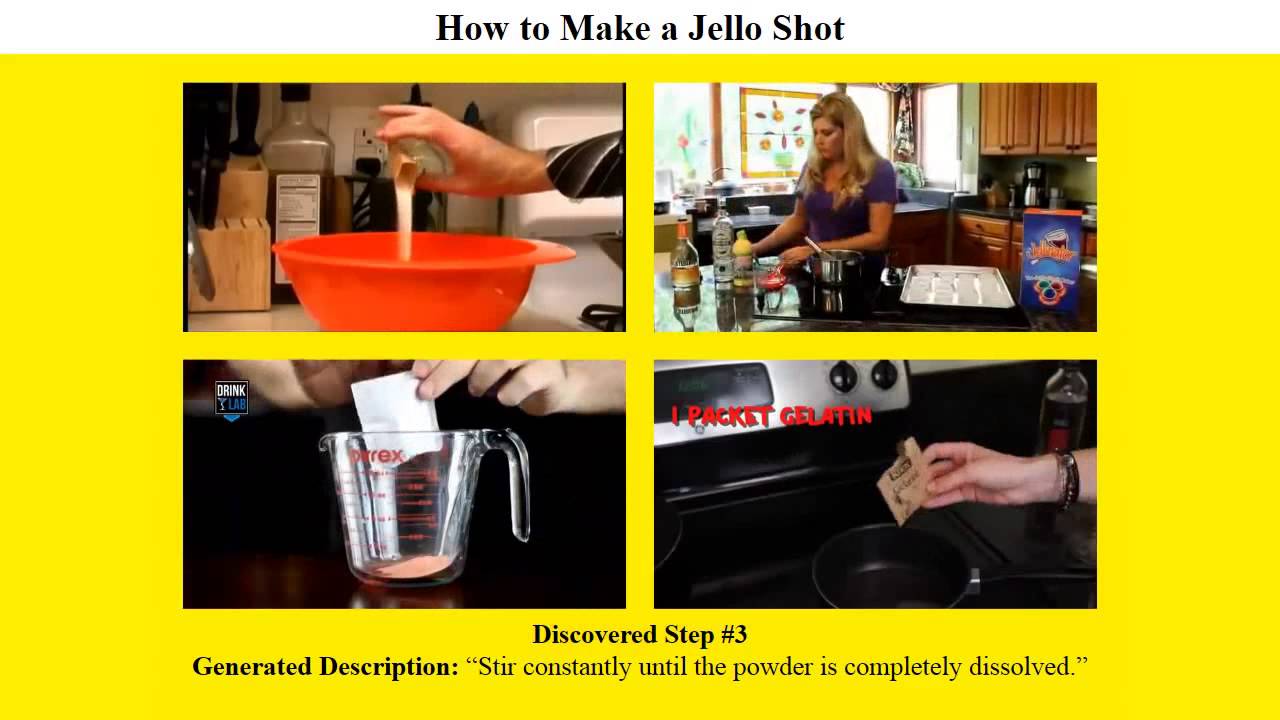

In the “RoboWatch” project at Cornell, researchers let robots search the Internet for online how-to videos to instruct themselves on how to complete certain tasks.

Cornell researchers are using instructional videos off the Internet to teach robots the step-by-step instructions required to perform certain tasks. This ability may become necessary in a future where menial laborer robots – the ones responsible for mundane tasks such as cooking, cleaning, and other household chores – can readily carry out such tasks.

Robots such as these will definitely be beneficial in assisting the elderly and the disabled, though it remains to be seen when (and if) they will truly become available for use. Hopefully, these early tests will help us make such determinations.

‘In a surprisingly polemic report, ITIF think-tank president Robert Atkinson misinterprets this growing altruistic focus of AI researchers as innovation-stifling “Luddite-induced paranoia.”’

The report released by the ITIF think tank suffers from many problems. It accuses Elon Musk in risking research in the “cars that Google and TESLA are testing”, missing entirely the irony. IMHO, the nomination is not the product of research in what Nick Bostrom, Stephen Hawking, Bill Gates, & Elon Musk actually say.

Each year, the ITIF produces a list of 10 groups they think are holding back technological progress with their annual Luddite award. This year, they included researchers who support AI safety research and autonomous weapons bans, and they called out Elon Musk, Bill Gates and Stephen Hawking by name. The ITIF doesn’t seem to see the irony of calling Elon Musk a luddite despite just landing a rocket, launching auto-piloted electric cars and investing in a $1Bn AI-startup. Read the response written by Stuart Russell and Max Tegmark:

Watch out, news anchors! Chatbot #Xiaoice was upgraded to a weather forecaster. #AI Shanghai Dragon TV.

Self-driving cars could mean a lot of free time for drivers.

We won’t have to drive soon, so what’re we going to do in cars? Nissan has an answer: http://voc.tv/1P6L9zh

From autonomous cars to see-through semi trucks, car technology innovated at blazing speeds this year. http://voc.tv/1P6L9zh