Computational biology is the combined application of math, statistics and computer science to solve biology-based problems. Examples of biology problems are: genetics, evolution, cell biology, biochemistry. [1].

Isaac Newton and other premodern physicists saw space and time as separate, absolute entities — the rigid backdrops against which we move. On the surface, this made the mathematics behind Newton’s 1687 laws of motion look simple. He defined the relationship between force, mass and acceleration, for example, as $latex \vec{F} = m \vec{a}$.

In contrast, when Albert Einstein revealed that space and time are not absolute but relative, the math seemed to get harder. Force, in relativistic terms, is defined by the equation $latex \vec {F} =\gamma (\vec {v})^{3}m_{0}\,\vec {a} _{\parallel }+\gamma (\vec {v})m_{0}\,\vec {a} _{\perp }$.

But in a deeper sense, in the ways that truly matter to our fundamental understanding of the universe, Einstein’s theory represented a major simplification of the underlying math.

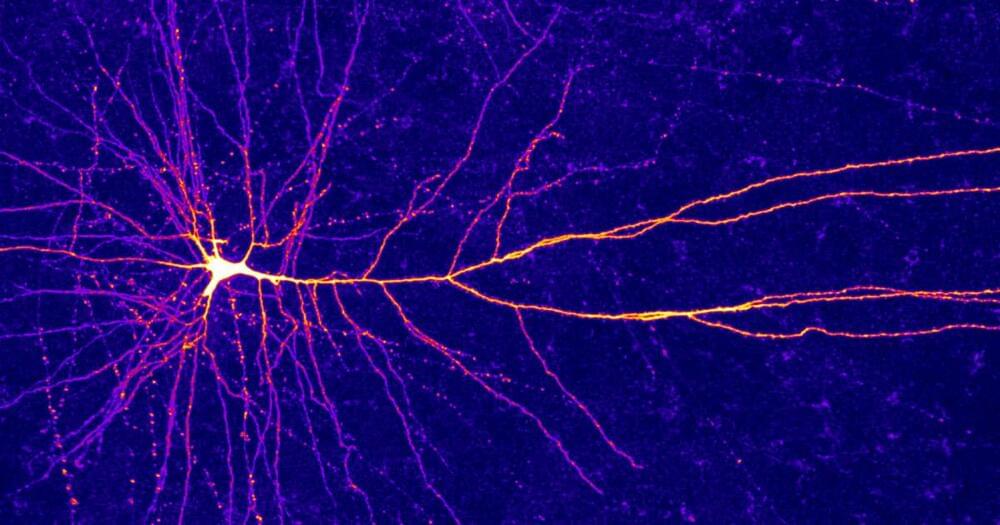

The information-processing capabilities of the brain are often reported to reside in the trillions of connections that wire its neurons together. But over the past few decades, mounting research has quietly shifted some of the attention to individual neurons, which seem to shoulder much more computational responsibility than once seemed imaginable.

The latest in a long line of evidence comes from scientists’ discovery of a new type of electrical signal in the upper layers of the human cortex. Laboratory and modeling studies have already shown that tiny compartments in the dendritic arms of cortical neurons can each perform complicated operations in mathematical logic. But now it seems that individual dendritic compartments can also perform a particular computation — “exclusive OR” — that mathematical theorists had previously categorized as unsolvable by single-neuron systems.

“I believe that we’re just scratching the surface of what these neurons are really doing,” said Albert Gidon, a postdoctoral fellow at Humboldt University of Berlin and the first author of the paper that presented these findings in Science earlier this month.

The discovery marks a growing need for studies of the nervous system to consider the implications of individual neurons as extensive information processors. “Brains may be far more complicated than we think,” said Konrad Kording, a computational neuroscientist at the University of Pennsylvania, who did not participate in the recent work. It may also prompt some computer scientists to reappraise strategies for artificial neural networks, which have traditionally been built based on a view of neurons as simple, unintelligent switches.

The Limitations of Dumb Neurons

In the 1940s and ’50s, a picture began to dominate neuroscience: that of the “dumb” neuron, a simple integrator, a point in a network that merely summed up its inputs. Branched extensions of the cell, called dendrites, would receive thousands of signals from neighboring neurons — some excitatory, some inhibitory. In the body of the neuron, all those signals would be weighted and tallied, and if the total exceeded some threshold, the neuron fired a series of electrical pulses (action potentials) that directed the stimulation of adjacent neurons.

At around the same time, researchers realized that a single neuron could also function as a logic gate, akin to those in digital circuits (although it still isn’t clear how much the brain really computes this way when processing information). A neuron was effectively an AND gate, for instance, if it fired only after receiving some sufficient number of inputs.

The dendritic arms of some human neurons can perform logic operations that once seemed to require whole neural networks.

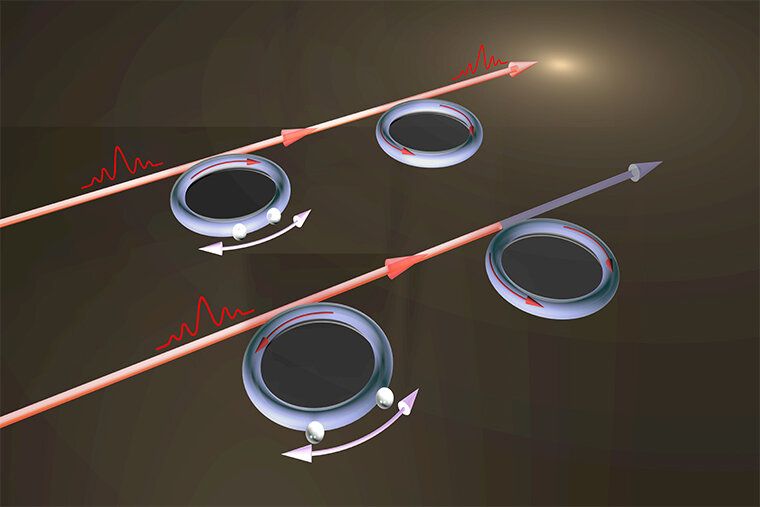

In the quantum realm, under some circumstances and with the right interference patterns, light can pass through opaque media.

This feature of light is more than a mathematical trick; optical quantum memory, optical storage and other systems that depend on interactions of just a few photons at a time rely on the process, called electromagnetically induced transparency, also known as EIT.

Because of its usefulness in existing and emerging quantum and optical technologies, researchers are interested in the ability to manipulate EIT without the introduction of an outside influence, such as additional photons that could perturb the already delicate system. Now, researchers at the McKelvey School of Engineering at Washington University in St. Louis have devised a fully contained optical resonator system that can be used to turn transparency on and off, allowing for a measure of control that has implications across a wide variety of applications.

Recent AI lecture by Stanford University.

What do web search, speech recognition, face recognition, machine translation, autonomous driving, and automatic scheduling have in common? These are all complex real-world problems, and the goal of artificial intelligence (AI) is to tackle these with rigorous mathematical tools.

In this course, you will learn the foundational principles that drive these applications and practice implementing some of these systems. Specific topics include machine learning, search, game playing, Markov decision processes, constraint satisfaction, graphical models, and logic. The main goal of the course is to equip you with the tools to tackle new AI problems you might encounter in life.

Instructors:

By Donna Lu

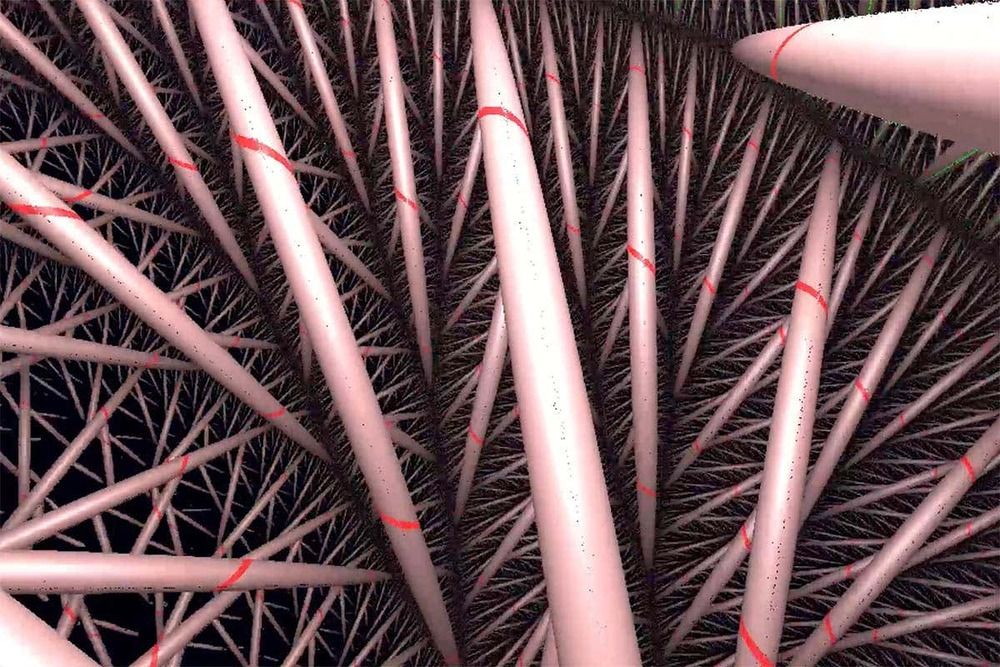

A trippy maths program that visualises the inside of strange 3D spaces could help us figure out the shape of the universe.

Henry Segerman at Oklahoma State University and his colleagues have been working to interactively map the inside of mathematical spaces known as 3-manifolds using a program called SnapPy.

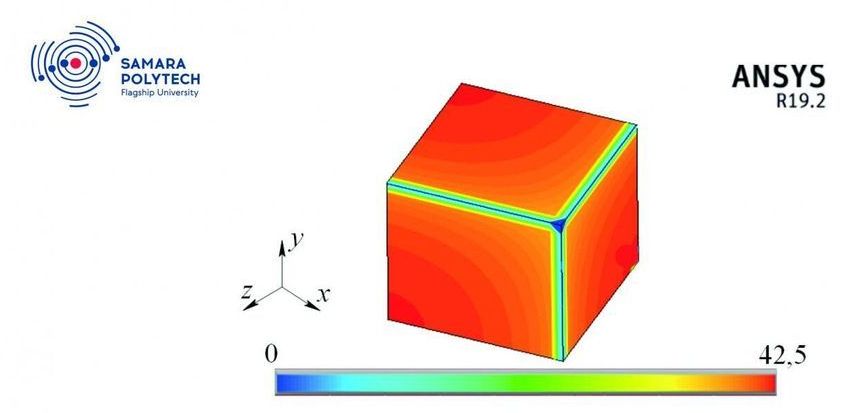

A team of scientists from the Research Center “Fundamental Problems of Thermophysics and Mechanics,” of Samara Polytech is engaged in the construction of new mathematical models and the search for methods for their study in relation to a wide range of local nonequilibrium transport processes in various physical systems. An innovative approach developed not so long ago is based on a modern version of third-generation thermodynamics. The project of these scientists, “Development, theoretical research and experimental verification of mathematical models of oscillatory processes, heat and mass transfer and thermomechanics with two- and multiphase delays” was among the winners of the RFBR contest. Recent research results are published in the journal Physica A: Statistical Mechanics and its Applications.

An interest in studying local nonequilibrium processes that take into account the specifics of transport processes at the molecular level (the mean free path of a molecule, the momentum transfer rate, relaxation time, etc.) is dictated by the need to conduct various physical processes under extreme conditions—for example, femtosecond concentrated exposure to energy flows on matter, ultra-low and ultra-high temperatures and pressures, shock waves, etc. Such physical processes are widely used to create new technologies for producing nanomaterials and coatings with unique physicochemical properties that cannot be obtained by traditional methods (binary and multicomponent metal alloys, ceramics, polymeric materials, metal and semiconductor glasses, nanofilms, graphene, composite nanomaterials, etc.).

“Classical thermodynamics is not suitable for describing processes that occur under local nonequilibrium conditions, since it is based on the principle of local equilibrium. Our project is important both for fundamental science and for practical applications,” explains the project manager, Professor Igor Kudinov. “To accomplish the tasks, we plan to create a new, unparalleled software package designed for 3D modeling of high-speed local nonequilibrium processes of heat, mass and momentum transfer. Thus, our method opens up wide possibilities for studying processes that are practically significant from the point of view of modern nanotechnology.”

The ability to process qubits is what allows a quantum computer to perform functions a binary computer simply cannot, like computations involving 500-digit numbers. To do so quickly and on demand might allow for highly efficient traffic flow. It could also render current encryption keys mere speedbumps for a computer able to replicate them in an instant. #QuantumComputing

Multiply 1,048,589 by 1,048,601, and you’ll get 1,099,551,473,989. Does this blow your mind? It should, maybe! That 13-digit prime number is the largest-ever prime number to be factored by a quantum computer, one of a series of quantum computing-related breakthroughs (or at least claimed breakthroughs) achieved over the last few months of the decade.

An IBM computer factored this very large prime number about two months after Google announced that it had achieved “quantum supremacy”—a clunky term for the claim, disputed by its rivals including IBM as well as others, that Google has a quantum machine that performed some math normal computers simply cannot.

SEE ALSO: 5G Coverage May Set Back Accurate Weather Forecasts By 30 Years

A team of mathematicians from the University of North Carolina at Chapel Hill and Brown University has discovered a new phenomenon that generates a fluidic force capable of moving and binding particles immersed in density-layered fluids. The breakthrough offers an alternative to previously held assumptions about how particles accumulate in lakes and oceans and could lead to applications in locating biological hotspots, cleaning up the environment and even in sorting and packing.

How matter settles and aggregates under gravitation in fluid systems, such as lakes and oceans, is a broad and important area of scientific study, one that greatly impacts humanity and the planet. Consider “marine snow,” the shower of organic matter constantly falling from upper waters to the deep ocean. Not only is nutrient-rich marine snow essential to the global food chain, but its accumulations in the briny deep represent the Earth’s largest carbon sink and one of the least-understood components of the planet’s carbon cycle. There is also the growing concern over microplastics swirling in ocean gyres.

Ocean particle accumulation has long been understood as the result of chance collisions and adhesion. But an entirely different and unexpected phenomenon is at work in the water column, according to a paper published Dec. 20 in Nature Communications by a team led by professors Richard McLaughlin and Roberto Camassa of the Carolina Center for Interdisciplinary Applied Mathematics in the College of Arts & Sciences, along with their UNC-Chapel Hill graduate student Robert Hunt and Dan Harris of the School of Engineering at Brown University.