During development, cells seem to decode their fate through optimal information processing, which could hint at a more general principle of life.

Within a week, many world leaders went from downplaying the seriousness of coronavirus to declaring a state of emergency. Even the most efficacious of nations seem to be simultaneously confused and exasperated, with delayed responses revealing incompetence and inefficiency the world over.

So this begs the question: why is it so difficult for us to comprehend the scale of what an unmitigated global pandemic could do? The answer likely relates to how we process abstract concepts like exponential growth. Part of the reason we’ve struggled so much applying basic math to our practical environment is because humans think linearly. But like much of technology, biological systems such as viruses can grow exponentially.

As we scramble to contain and fight the pandemic, we’ve turned to technology as our saving grace. In doing so, we’ve effectively hit a “fast-forward” button on many tech trends that were already in place. From remote work and virtual events to virus-monitoring big data, technologies that were perhaps only familiar to a fringe tech community are now entering center stage—and as tends to be the case with wartime responses, these changes are likely here to stay.

Mathematicians from the California Institute of Technology have solved an old problem related to a mathematical process called a random walk.

The team, which also worked with a colleague from Israel’s Ben-Gurion University, solved the problem in a rush after having a realization one evening. Lead author Omer Tamuz studies both economics and mathematics, using probability theory and ergodic theory as the link—a progressive and blended approach that this year’s Abel Prize-winning mathematicians helped to trailblaze.

The Royal Society is to create a network of disease modelling groups amid academic concern about the nation’s reliance on a single group of epidemiologists at Imperial College London whose predictions have dominated government policy, including the current lockdown.

It is to bring in modelling experts from fields as diverse as banking, astrophysics and the Met Office to build new mathematical representations of how the coronavirus epidemic is likely to spread across the UK — and how the lockdown can be ended.

The first public signs of academic tensions over Imperial’s domination of the debate came when Sunetra Gupta, professor of theoretical epidemiology at Oxford University, published a paper suggesting that some of Imperial’s key assumptions could be wrong.

Governments across the world are relying on mathematical projections to help guide decisions in this pandemic. Computer simulations account for only a fraction of the data analyses that modelling teams have performed in the crisis, Ferguson notes, but they are an increasingly important part of policymaking. But, as he and other modellers warn, much information about how SARS-CoV-2 spreads is still unknown and must be estimated or assumed — and that limits the precision of forecasts. An earlier version of the Imperial model, for instance, estimated that SARS-CoV-2 would be about as severe as influenza in necessitating the hospitalization of those infected. That turned out to be incorrect.

How epidemiologists rushed to model the coronavirus pandemic.

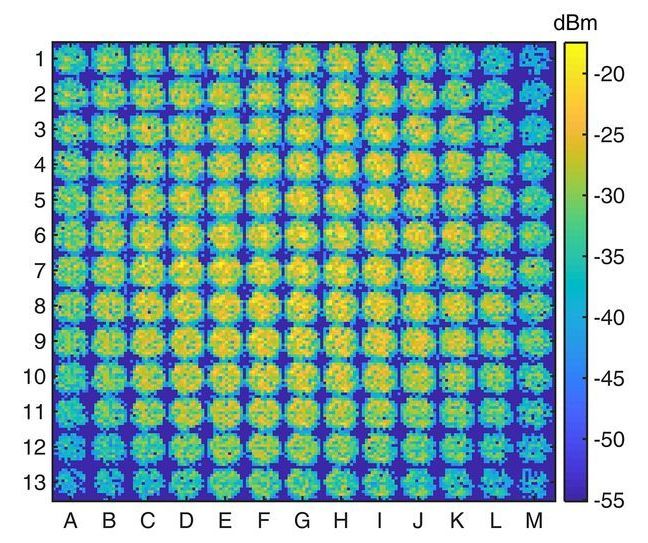

Using the same technology that allows high-frequency signals to travel on regular phone lines, researchers tested sending extremely high-frequency, 200 GHz signals through a pair of copper wires. The result is a link that can move data at rates of terabits per second, significantly faster than currently available channels.

While the technology to disentangle multiple, parallel signals moving through a channel already exists, thanks to signal processing methods developed by John Cioffi, the inventor of digital subscriber lines, or DSL, questions remained related to the effectiveness of implementing these ideas at higher frequencies.

To test the transmission of data at higher frequencies, authors of a paper published this week in Applied Physics Letters used experimental measurements and mathematical modeling to characterize the input and output signals in a waveguide.

Our body’s ability to detect disease, foreign material, and the location of food sources and toxins is all determined by a cocktail of chemicals that surround our cells, as well as our cells’ ability to ‘read’ these chemicals. Cells are highly sensitive. In fact, our immune system can be triggered by the presence of just one foreign molecule or ion. Yet researchers don’t know how cells achieve this level of sensitivity.

Now, scientists at the Biological Physics Theory Unit at Okinawa Institute of Science and Technology Graduate University (OIST) and collaborators at City University of New York have created a simple model that is providing some answers. They have used this model to determine which techniques a cell might employ to increase its sensitivity in different circumstances, shedding light on how the biochemical networks in our bodies operate.

“This model takes a complex biological system and abstracts it into a simple, understandable mathematical framework,” said Dr. Vudtiwat Ngampruetikorn, former postdoctoral researcher at OIST and the first author of the research paper, which was published in Nature Communications. “We can use it to tease apart how cells might choose to spend their energy budget, depending on the world around them and other cells they might be talking to.”

By bringing a quantitative toolkit to this biological question, the scientists found that they had a different perspective to the biologists. “The two disciplines are complimentary to one another,” said Professor Greg Stephens, who runs the unit. “Biologists tend to focus on one area and delve deeply into the details, whereas physicists simplify and look for patterns across entire systems. It’s important that we work closely together to make sure that our quantitative models aren’t too abstract and include the important details.”

On their computers, the scientists created the model that represented a cell. The cell had two sensors (or information processing units), which responded to the environment outside the cell. The sensors could either be bound to a molecule or ion from the outside, or unbound. When the number of molecules or ions in chemical cocktail outside the cell changed, the sensors would respond and, depending on these changes, either bind to a new molecule or ion, or unbind. This allowed the cell to gain information about the outside world and thus allowed the scientists to measure what could impact its sensitivity.

“Once we had the model, we could test all sorts of questions,” said Dr. Ngampruetikorn, “For example, is the cell more sensitive if we allow it to consume more energy? Or if we allow the two sensors to cooperate? How does the cell’s prior experiences influence its sensitivity?”

The scientists looked at whether allowing the cell to consume energy and allowing the two sensors to interact helped the cell achieve a higher level of sensitivity. They also decided to vary two other components to see if this had an impact—the level of noise, which refers to the amount of uncertainty or unnecessary information in the chemical cocktail, and the signal prior, which refers to the cell’s acquired knowledge, gained from past experiences.

Previous research had found that energy consumption and sensor interactions were important for cell sensitivity, but this research found that that was not always true. In some situations—such as if the chemical cocktail had a low level of noise and the correlations between different chemicals are high—the scientists found that allowing the cell to consume energy and the sensors to interact did help it achieve a higher level of sensitivity. However, in other situations—such as if there was a higher level of noise—this was not the case.

“It’s like tuning into a radio,” explained Professor Stephens. “If there’s too much static (or noise), it doesn’t do any good to turn up the radio (or, in this case, amplify the signal with energy and interactions).”

Despite this, Dr. Ngampruetikorn explained, energy consumption and sensor interactions do remain important mechanisms in many situations. “It would be interesting to continue to use this model to determine exactly how energy consumption can influence a cell’s sensitivity,” he said. “And in what situations it’s most valuable.”

Although the scientists decided to look specifically at how cells respond to their surroundings, they stressed that the general framework of their model could be used to shed light on sensing strategies across the biological world. Professor Stephens explained that whilst there’s a huge amount of effort going in to characterizing isolated, individual systems, there’s considerably less work looking for common principles. “If we can find these principles, then it could renew our understanding of how living systems function, from cell communication and the brain, to animal behavior and social interactions.”

Scientists create model to measure how cells sense their surroundings.

What is interaction, and when does it occur? Intuition suggests that the necessary condition for the interaction of independently created particles is their direct touch or contact through physical force carriers. In quantum mechanics, the result of the interaction is entanglement—the appearance of non-classical correlations in the system. It seems that quantum theory allows entanglement of independent particles without any contact. The fundamental identity of particles of the same kind is responsible for this phenomenon.

Quantum mechanics is currently the best and most accurate theory used by physicists to describe the world around us. Its characteristic feature, however, is the abstract mathematical language of quantum mechanics, notoriously leading to serious interpretational problems. The view of reality proposed by this theory is still a subject of scientific dispute that, over time, is only becoming hotter and more interesting. New research motivation and intriguing questions are brought forth by a fresh perspective resulting from the standpoint of quantum information and the enormous progress of experimental techniques. These allow verification of the conclusions drawn from subtle thought experiments directly related to the problem of interpretation. Moreover, researchers are now making enormous progress in the field of quantum communication and quantum computer technology, which significantly draws on non-classical resources offered by quantum mechanics.

Pawel Blasiak from the Institute of Nuclear Physics of the Polish Academy of Sciences in Krakow and Marcin Markiewicz from the University of Gdansk focus on analyzing widely accepted paradigms and theoretical concepts regarding the basics and interpretation of quantum mechanics. The researchers are trying to determine to what extent the intuitions used to describe quantum mechanical processes are justified in a realistic view of the world. For this purpose, they try to clarify specific theoretical ideas, often functioning in the form of vague intuitions, using the language of mathematics. This approach often results in the appearance of inspiring paradoxes. Of course, the more basic the concept to which a given paradox relates, the better, because it opens up new doors to deeper understanding a given problem.