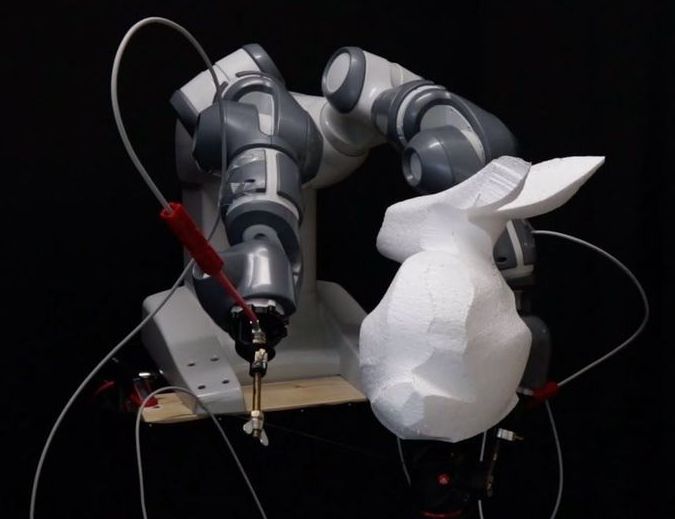

A high-power laser, optimized optical pathway, a patented adaptive resolution technology, and smart algorithms for laser scanning have enabled UpNano, a Vienna-based high-tech company, to produce high-resolution 3D-printing as never seen before.

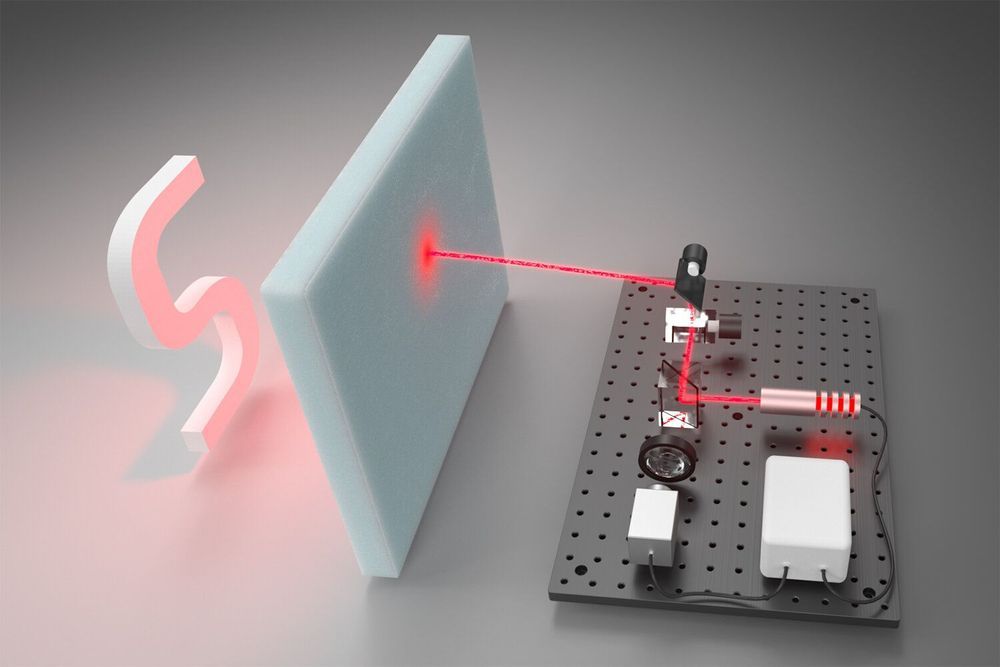

“Parts with nano- and microscale resolution can now be printed across 12 orders of magnitude—within times never achieved previously. This has been accomplished by UpNano, a spin-out of the TU Wien, which developed a high-end two-photon polymerization (2PP) 3D-printing system that can produce polymeric parts with a volume ranging from 100 to 1012 cubic micrometers. At the same time the printer allows for a nano- and microscale resolution,” the company said in a statement.

Recently the company demonstrated this remarkable capability by printing four models of the Eiffel Tower ranging from 200 micrometers to 4 centimeters—with perfect representation of all minuscule structures within 30 to 540 minutes. With this, 2PP 3D-printing is ready for applications in R&D and industry that seemed so far impossible.