A new study has explored whether AI can provide more attractive answers to humanity’s most profound questions than history’s most influential thinkers.

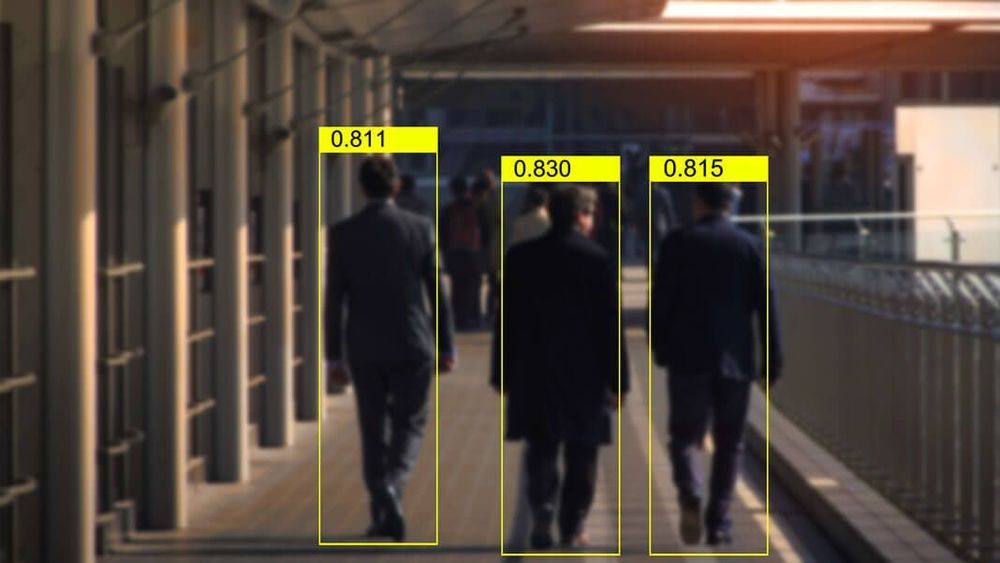

Researchers from the University of New South Wales first fed a series of moral questions to Salesforce’s CTRL system, a text generator trained on millions of documents and websites, including all of Wikipedia. They added its responses to a collection of reflections from the likes of Plato, Jesus Christ, and, err, Elon Musk.

The team then asked more than 1,000 people which musings they liked best — and whether they could identify the source of the quotes.