The “Luda” AI chatbot sparked a necessary debate about AI ethics as South Korea places new emphasis on the technology.

In the words of the book’s author, Benjamin Aldes Wurgaft, Meat Planet: Artificial Flesh and the Future of Food (2019) is “not an attempt at prediction but rather a study of cultured meat as a special case of speculation on the future of food, and as a lens through which to view the predictions we make about how technology changes the world.” While not serving as some crystal ball to tell us the future of food, Wurgaft’s book certainly does serve as a kind of lens.

Our very appetites are questioned quite a bit in the book. Wondering about the ever-changing history of food, the author asks, “Will it be an effort to reproduce the industrial meat forms we know, albeit on a novel, and more ethical and sustainable, foundation?” Questioning why hamburgers are automatically the default goal, he points out cultured meat advocates should carefully consider “the question of which human appetite for meat, in historical terms, they wish to satisfy.”

Wurgaft’s question of “which human appetite” – past, present, or future – is an excellent one. If we use his book as a lens to observe other emerging technologies, the question extends well beyond our choices of food. It could even have direct implications for such endeavours as radical life extension. Will we, if we extend our lifetimes, be satisfactory to future people? We already know the kind of clash that persists between different generations, and the blame we often place on previous generations for current social ills, without there also being a group of people who simply refuse to die. We should be wary of basing our future on the present – of attempting to preserve present tastes as somehow immutable and deserving immortality. This may be a problem such futurists as Ray Kurzweil, author of The Singularity is Near (2005) need to respond to.

If we are to justify the singularity at which we or our appetites are immortalized, we should remember technology changes “morality’s horizon”, as Wurgaft observes. If, for example, a new technology arises that can entirely eliminate suffering, our choice to allow suffering is an immoral one. If further technologies then emerge that can eliminate not just suffering but death, it will become immoral on that day to permit someone’s natural death – at least to the extent it is like the crime of manslaughter. I argued in my own book that it will be immoral to withhold novel biotechnologies from impoverished countries, if we know such direct action will increase their economic independence or improve their health. Put simply, our inaction in a situation can become an immoral deed if we have the necessary tools to stop suffering.

Beyond the way they alter our moral structures and expectations, Wurgaft notes that much fear over emerging technologies stems from the belief “technology might introduce a new plasticity into our concept of what it is to be human.” This is already expected to be the case with potential transhuman technologies, which critics of transhumanism find greatly troubling. Fully respecting the sanctity of animal life may ultimately coincide with respecting the same for all sentient beings, such as artificial and posthuman beings. Alternatively, the plasticity being described may ultimately undermine all our rights, leaving sentient life open to a whole new range of abuses, which certainly is the outcome critics of transhumanism fear. The fear of human rights being only more easily degraded and devalued by technology, or the notion technology will broaden the scope of all things morally wrong, is frequently expressed in the British dystopian Netflix series Black Mirror.

The moral appetite of the advocates of cultured meat is clear. They seek increased animal protection primarily, followed by environmental protection, but much rarer are their appeals to food security and human health. Wurgaft points out there is no apparent compelling philosophical defence or apologetic for the eating of animals. Perhaps the aforementioned plasticity of our morals to align with our species’ technological abilities, however, means most of us will remain unable to develop an acceptance of the sanctity of animal life until it becomes more broadly convenient to do so.

A chapter of Meat Planet addresses promises, noting how hopeful expectations often reinforce each other. The author also discusses “hype”, noting it is both necessary to the success of, and yet also a component leading to eventual (in Wurgaft’s view inevitable) disillusionment with any emerging technology. Such lessons may seem dissatisfying to those of us who are more enthusiastic about the future, but they seem necessary. Those of us who write science fiction know it is still fiction, and at best can only inspire some small part of the real future.

Wurgaft acknowledges “physical technologies (in energy, in transport, in medicine, in manufacturing) have lagged behind our digital ones”. This is regrettably true. Far too much effort in the tech sectors goes into software and smarter approaches to old problems rather than achieving real breakthroughs or actually inventing something. This only adds to the disappointment many feel. Rather than entering a sci-fi world filled with new domains of advanced technology, we are striding into a world only filled with new gimmicky apps and ever more efficient ways of doing whatever we already did.

Staying on the issue of technological disappointment, many problems are especially frustrating because they are the result of our culture rather than hurdles in engineering itself. Wurgaft makes a good point that privately funded labs don’t share their research and are “at risk of reinventing the wheel”. If we are to imagine a solution, it may be that governments should purchase the research of failed biotech start-ups, then hand it out freely with a goal to reduce any duplicated work and accelerate research.

It is my own observation that states are often capable of a significant amount of heavy lifting on the way to new technologies where private companies were not willing to take risks. Companies focused on new experimental technologies often leave it to engineers to solve the problem of scaling – work that too often simply doesn’t get done, as was the case with a lab-tested fuel production method using bacteria. It is possible that a state could learn best when to step in and could compensate both for the poor communication between innovators and the lack of engineering expertise and funding necessary for scaling.

On the topic of cultured meat specifically, maybe the focus should not currently be on replacing the most desired forms of meat (e.g., burgers and steaks) with cultured meat but in replacing at least a substantial percentage of lower-quality meat products with cultured meat. This, of course, depends on government adopting an agenda of phasing out industrial animal slaughter in much the same way carbon reduction targets were adopted.

A final consideration, for me, is that there may be alternative ways of achieving the same goals as cultured meat proponents. If genetic engineering could produce animals that efficiently yield greater quantities of meat, and of better quality, this may result in fewer individual animals suffering. Better yet, if synthetic biology is what it claims to be, it may eventually be possible to remake our favourite meats using the body of some wholly engineered or cognitively suppressed animal that does not experience suffering and exists its whole life as a steak.

To conclude, Wurgaft’s Meat Planet is quite nutritious food for thought. Beyond directly addressing and critically examining the hopes behind cultured meat, it raises a number of questions that should be asked of the advocates of other emerging technologies. The most important lesson is that we should not view new technology as morally neutral. It is almost certain to reconfigure our morality, whether it is for better or worse. I like to think technology only better supports us to make good moral choices in the long-term, even if there are short-term instances of abuse, as can be seen by looking at the overall course of human history.

More from me: Catalyst: A Techno-Liberation Thesis

We tried to be comprehensive here, but are there any big arguments against life extension (ethical or otherwise) that you always hear that we missed?

Thinking through the ethics of life extension can be complicated. Here are the main pros and cons of immortality from an ethical standpoint.

At the same time, there was indeed more action. In one major victory, Amazon, Microsoft, and IBM banned or suspended their sale of face recognition to law enforcement, after the killing of George Floyd spurred global protests against police brutality. It was the culmination of two years of fighting by researchers and civil rights activists to demonstrate the ineffective and discriminatory effects of the companies’ technologies. Another change was small yet notable: for the first time ever, NeurIPS, one of the most prominent AI research conferences, required researchers to submit an ethics statement with their papers.

So here we are at the start of 2021, with more public and regulatory attention on AI’s influence than ever before. My New Year’s resolution: Let’s make it count. Here are five hopes that I have for AI in the coming year.

The French military is starting exploratory work on the development of bionic supersoldiers, which officials describe as a necessary part of keeping pace with the rest of the world.

A military ethics committee gave its blessing to begin developing supersoldiers on Tuesday, according to The BBC, balancing the moral implications of augmenting and altering humanity with the desire to innovate and enhance the military’s capabilities. With the go-ahead, France joins countries like the U.S., Russia, and China that are reportedly attempting to give their soldiers high-tech upgrades.

The French armed forces now have permission to develop “augmented soldiers” following a report from a military ethics committee.

The report, released to the public on Tuesday, considers medical treatments, prosthetics and implants that improve “physical, cognitive, perceptive and psychological capacities,” and could allow for location tracking or connectivity with weapons systems and other soldiers.

I have the honor of being a guest on the USTP Enlightenment Salon today, many thanks to Gennady and David for the invitation.

I was a Linux sys/net admin.

I was never interested in politics until it became IMPOSSIBLE to avoid. Every action or inaction is now a political statement in some people’s minds. That’s a terrible state of affairs that has been imposed on us. So I put my hacker hat on and went to work to discover why there exists an abject division on truth and morals and how politics became the catalyst for the phenomenon.

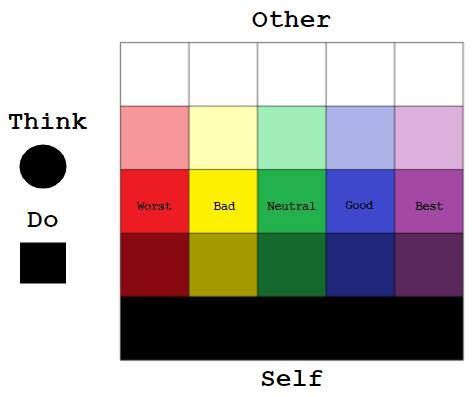

I’ll be discussing the roots of my theory: Physix, a mathematical model for thought and behavior. The political derivative is the Q-vote. It’s a novel approach to democracy.

Nell Watson (https://www.nellwatson.com/) will be using a derivative of Physix for machine learning and ethics on https://www.ethicsnet.org/, but I think the most interesting quality of Physix is it’s commercial value. It codifies the decision process: Q-Logic.

Every action or thought can be assigned one of 525 unique patterns on this 5×5 grid. 13,125 if you add voice. Economics, psychology, philosophy, religion, politics and every conceivable imaginary or spacetime event fits. Psychohistory. The matrix has been hacked.

Physix gives AI a finite vocabulary to analyze the infinite chaos of life and imagination. The patterns can be compared to both physical and psychological results, solve for the most preferred.

It’s odd to me that Youtube HASN’T thought of color ratings to highlight videos, Zoom could integrate it with their platform to rate conversations and for meetings. It could be used with any human interaction to rate quality of communication.

The key to real world solutions is that I present this as Open Source Human Nature. Free. Where there is commercial value derived, 10% of the profit/efficiency gained will go to a fund where the money will be spent 100% publicly and tracked (using the same polling system) over time to find the most efficient way to make people happy. I’m looking forward to working with the USTP on the political side, on the AI side Nell Watson is just dipping her toe in the water, I’m looking for a capable AI group to integrate the idea. David Kelley from what I can tell has done all the deep research, my idea is just a different tool to bring it together. I have a programmer, an investor waiting to hear from someone in the field to say they are interested in tackling this.

From all the interactions I’ve had, the USTP is the most progressive, rationally minded group I’ve found. I believe the people involved with this Party would be the best to understand the implications and help me navigate the shark infested waters of politics, NGO’s, Big Tech and Academia.

It’s a new world, AI has a new tool to analyze us and become an ally, this renders the current political paradigm an ancient, sclerotic remnant of brute force mass persuasion for power and money.

It’s time for a paradigm shift of consciousness, aided by AI. The USTP is uniquely suited to bring this to the political forefront.

USTP: Let’s go.

This year, Nature asked 480 researchers around the world who work in facial recognition, computer vision and artificial intelligence (AI) for their views on thorny ethical questions about facial-recognition research. The results of this first-of-a-kind survey suggest that some scientists are concerned about the ethics of work in this field — but others still don’t see academic studies as problematic.

Journals and researchers are under fire for controversial studies using this technology. And a Nature survey reveals that many researchers in this field think there is a problem.

Newfound Autonomy

There are ways that a robot companion could outperform humans, Jecker says, by providing sympathetic and patient support free of judgment and condescension around the clock.

“It relates to issues of dignity,” Jecker told the Times. “The ability to be sexual at any age relates to your ability to have a life. Not just to survive, but to have a life, and do things that have value. Relationships. Bodily integrity. These things are a matter of dignity.”

It has been really fun talking to the kids about AI. Should we help AI consciousness to emerge — or should we try to prevent it? Can you design a kindest AI? Can we use AI as an universal emotion translator? How to search for an AI civilization? And many many other questions that you can discuss with kids.

Ultimately, early introduction of AI is not limited to formal instruction. Just contemplating future scenarios of AI evolution provides plentiful material for engaging students with the subject. A survey on the future of AI, administered by the Future of Life Institute, is a great starting point for such discussions. Social studies classes, as well as school debate and philosophy clubs, could also launch a dialogue on AI ethics – an AI nurse selecting a medicine, an AI judge deciding on a criminal case, or an AI driverless car switching lanes to avoid collision.

Demystifying AI for our children in all its complexity while providing them with an early insight into its promises and perils will make them confident in their ability to understand and control this incredible technology, as it is bound to develop rapidly within their lifetimes.