Services to include spotting racial bias, developing guidelines around AI projects.

With moral purity inserted as a component to the internal processes for all academic publications, it will henceforth become impossible to pursue the vital schema of conjecture and refutation.

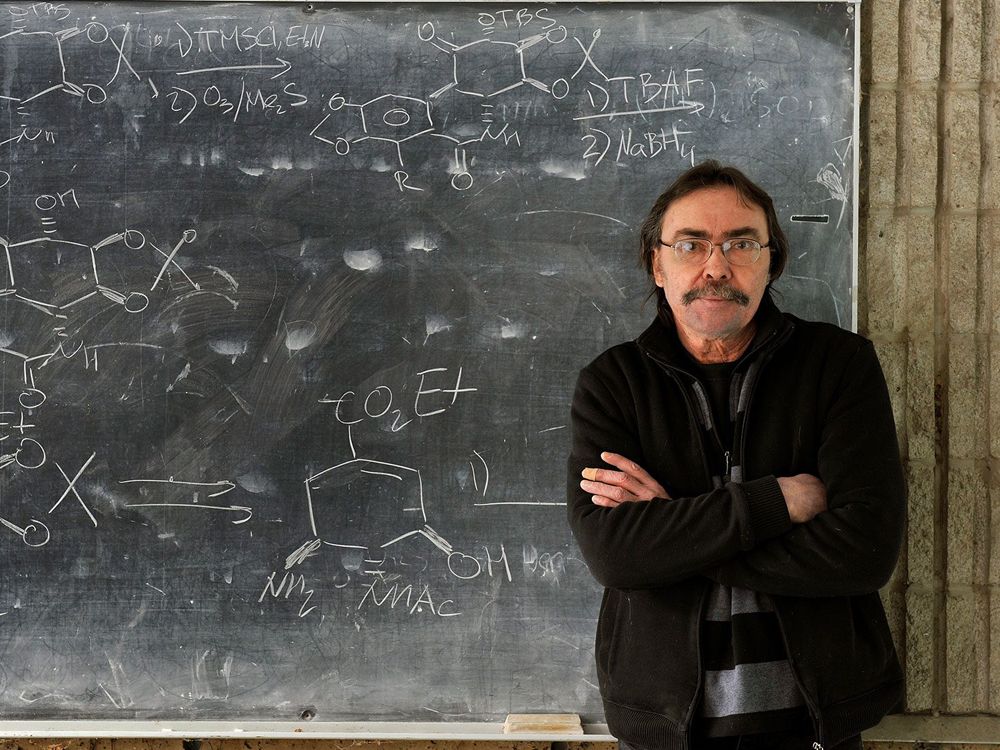

Shocked that one of their own could express a heterodox opinion on the value of de rigueur equity, diversity and inclusion policies, chemistry professors around the world immediately demanded the paper be retracted. Mob justice was swift. In an open letter to “our community” days after publication, the publisher of Angewandte Chemie announced it had suspended the two senior editors who handled the article, and permanently removed from its list of experts the two peer reviewers involved. The article was also expunged from its website. The publisher then pledged to assemble a “diverse group of external advisers” to thoroughly root out “the potential for discrimination and foster diversity at all levels” of the journal.

Not to be outdone, Brock’s provost also disowned Hudlicky in a press statement, calling his views “utterly at odds with the values” of the university; the school then drew attention to its own efforts to purge unconscious bias from its ranks and to further the goals of “accessibility, reconciliation and decolonization.” (None of which have anything to do with synthetic organic chemistry, by the way.) Brock’s knee-jerk criticism of Hudlicky is now also under review, following a formal complaint by another professor that the provost’s statement violates the school’s commitment to freedom of expression.

Hudlicky — who told Retraction Watch “the witch hunt is on” — clearly had the misfortune to make a few cranky comments at a time when putting heads on pikes is all the rage. But what of the implications his situation entails for the entirety of the peer-review process? Given the scorched earth treatment handed out to the editors and peer reviewers involved at Angewandte Chemie, the new marching orders for academic journals seem perfectly clear — peer reviewers are now expected to vet articles not just for coherence and relevance to the scientific field in question, but also for alignment with whatever political views may currently hold sway with the community-at-large. If a publication-worthy paper comes across your desk that questions or undermines orthodox public opinion in any way — even in a footnote — and you approve it, your job may be forfeit. Conform or disappear.

Since OpenAI first described its new AI language-generating system called GPT-3 in May, hundreds of media outlets (including MIT Technology Review) have written about the system and its capabilities. Twitter has been abuzz about its power and potential. The New York Times published an op-ed about it. Later this year, OpenAI will begin charging companies for access to GPT-3, hoping that its system can soon power a wide variety of AI products and services.

Earlier this year, the independent research organisation of which I am the Director, London-based Ada Lovelace Institute, hosted a panel at the world’s largest AI conference, CogX, called The Ethics Panel to End All Ethics Panels. The title referenced both a tongue-in-cheek effort at self-promotion, and a very real need to put to bed the seemingly endless offering of panels, think-pieces, and government reports preoccupied with ruminating on the abstract ethical questions posed by AI and new data-driven technologies. We had grown impatient with conceptual debates and high-level principles.

And we were not alone. 2020 has seen the emergence of a new wave of ethical AI – one focused on the tough questions of power, equity, and justice that underpin emerging technologies, and directed at bringing about actionable change. It supersedes the two waves that came before it: the first wave, defined by principles and dominated by philosophers, and the second wave, led by computer scientists and geared towards technical fixes. Third-wave ethical AI has seen a Dutch Court shut down an algorithmic fraud detection system, students in the UK take to the streets to protest against algorithmically-decided exam results, and US companies voluntarily restrict their sales of facial recognition technology. It is taking us beyond the principled and the technical, to practical mechanisms for rectifying power imbalances and achieving individual and societal justice.

Between 2016 and 2019, 74 sets of ethical principles or guidelines for AI were published. This was the first wave of ethical AI, in which we had just begun to understand the potential risks and threats of rapidly advancing machine learning and AI capabilities and were casting around for ways to contain them. In 2016, AlphaGo had just beaten Lee Sedol, promoting serious consideration of the likelihood that general AI was within reach. And algorithmically-curated chaos on the world’s duopolistic platforms, Google and Facebook, had surrounded the two major political earthquakes of the year – Brexit, and Trump’s election.

We are witnessing the birth of a new faith. It is not a theistic religion. Indeed, unlike Christianity, Judaism, and Islam, it replaces a personal relationship with a transcendent God in the context of a body of believers with a fervent and radically individualistic embrace of naked materialistic personal recreation.

Moreover, in contrast to the orthodox Christian, Judaic, and Islamic certainty that human beings are made up of both material body and immaterial soul – and that both matter – adherents of the new faith understand that we have a body, but what really counts is mind, which is ultimately reducible to mere chemical and electrical exchanges. Indeed, contrary to Christianity’s view of an existing Heaven or, say, Buddhism’s conception of the world as illusion, the new faith insists that the physical is all that has been, is, or ever will be.

Such thinking leads to nihilism. That’s where the new religion leaves past materialistic philosophies behind, by offering adherents hope. Where traditional theism promises personal salvation, the new faith offers the prospect of rescue via radical life-extension attained by technological applications – a postmodern twist, if you will, on faith’s promise of eternal life. This new religion is known as “transhumanism,” and it is all the rage among the Silicon Valley nouveau riche, university philosophers, and among bioethicists and futurists seeking the comforts and benefits of faith without the concomitant responsibilities of following dogma, asking for forgiveness, or atoning for sin – a foreign concept to transhumanists. Truly, transhumanism is a religion for our postmodern times.

Quantifications are produced by several disciplinary houses in a myriad of different styles. The concerns about unethical use of algorithms, unintended consequences of metrics, as well as the warning about statistical and mathematical malpractices are all part of a general malaise, symptoms of our tight addiction to quantification. What problems are shared by all these instances of quantification? After reviewing existing concerns about different domains, the present perspective article illustrates the need and the urgency for an encompassing ethics of quantification. The difficulties to discipline the existing regime of numerification are addressed; obstacles and lock-ins are identified. Finally, indications for policies for different actors are suggested.

We can’t evolve faster than our language does. Evolution is a linguistic, code-theoretic process. Do yourself a humongous favor, look over these 33 transhumanist neologisms. Here’s a fairly comprehensive glossary of thirty three newly-introduced concepts and terms from “The Syntellect Hypothesis: Five Paradigms of the Mind’s Evolution” by futurist, philosopher and evolutionary cyberneticist Alex M. Vikoulov. In parts written as an academic paper, in parts as a belletristic masterpiece, this recent book is an exceptionally easy read for an intellectual reader — a philosophical treatise that is fine-tuned with apt neologisms readily explained by given definitions and contextually… https://medium.com/@alexvikoulov/33-crucial-terms-every-futurist-transhumanist-and-philosopher-should-know-going-forward-2ba1c8b993c8

#evolution #consciousness #futurism #transhumanism #philosophy

“A powerful work! As a transhumanist, I especially loved one of the main ideas of the book that the Syntellect Emergence, merging of us into one Global Mind, constitutes the quintessence of the coming Technological Singularity. The novel conceptual visions of mind-uploading and achieving digital immortality are equally fascinating. The Chrysalis Conjecture as a solution to the Fermi Paradox is mind-bending. I would highly recommend The Syntellect Hypothesis to anyone with transhumanist aspirations and exponential thinking!” -Zoltan Istvan, futurist, author, founder of the U.S. Transhumanist Party

Terms such as ‘Artificial Intelligence’ or ‘Neurotechnology’ were new some time not so long ago. We can’t evolve faster than our language does. Evolution is a linguistic, code-theoretic process. Do yourself a humongous favor, look over these 33 transhumanist neologisms. Here’s a fairly comprehensive glossary of thirty three newly-introduced concepts and terms from “The Syntellect Hypothesis: Five Paradigms of the Mind’s Evolution” by futurist, philosopher and evolutionary cyberneticist Alex M. Vikoulov. In parts written as an academic paper, in parts as a belletristic masterpiece, this recent book is an exceptionally easy read for an intellectual reader — a philosophical treatise that is fine-tuned with apt neologisms readily explained by given definitions and contextually:

AGI Naturalization Protocol, AGI(NP) — initiating AGI (Artificial General Intelligence) via human life simulation training program, infusing AGI with a value system, ethics, morality and generally civilized manners to ensure functioning in the best interests of society as a self-aware agent. Read more: http://www.ecstadelic.net/top-stories/how-to-create-friendly-ai-and-survive-the-coming-intelligence-explosion #AGINaturalizationProtocol #AGINP

On March 11, 2011, a 9.1-magnitude earthquake triggered a powerful tsunami, generating waves higher than 125 feet that ravaged the coast of Japan, particularly the Tohoku region of Honshu, the largest and most populous island in the country.nnNearly 16,000 people were killed, hundreds of thousands displaced, and millions left without electricity and water. Railways and roads were destroyed, and 383,000 buildings damaged—including a nuclear power plant that suffered a meltdown of three reactors, prompting widespread evacuations.nnIn lessons for today’s businesses deeply hit by pandemic and seismic culture shifts, it’s important to recognize that many of the Japanese companies in the Tohoku region continue to operate today, despite facing serious financial setbacks from the disaster. How did these businesses manage not only to survive, but thrive?nnOne reason, says Harvard Business School professor Hirotaka Takeuchi, was their dedication to responding to the needs of employees and the community first, all with the moral purpose of serving the common good. Less important for these companies, he says, was pursuing layoffs and other cost-cutting measures in the face of a crippled economy.nn

As demonstrated after the 2011 earthquake and tsunami, Japanese businesses have a unique capability for long-term survival. Hirotaka Takeuchi explains their strategy of investing in community over profits during turbulent times.

Nearly 200 covid-19 vaccines are in development and some three dozen are at various stages of human testing. But in what appears to be the first “citizen science” vaccine initiative, Estep and at least 20 other researchers, technologists, or science enthusiasts, many connected to Harvard University and MIT, have volunteered as lab rats for a do-it-yourself inoculation against the coronavirus. They say it’s their only chance to become immune without waiting a year or more for a vaccine to be formally approved.

Preston Estep was alone in a borrowed laboratory, somewhere in Boston. No big company, no board meetings, no billion-dollar payout from Operation Warp Speed, the US government’s covid-19 vaccine funding program. No animal data. No ethics approval.

What he did have: ingredients for a vaccine. And one willing volunteer.

Estep swirled together the mixture and spritzed it up his nose.

The U.S. intelligence community (IC) on Thursday rolled out an “ethics guide” and framework for how intelligence agencies can responsibly develop and use artificial intelligence (AI) technologies.

Among the key ethical requirements were shoring up security, respecting human dignity through complying with existing civil rights and privacy laws, rooting out bias to ensure AI use is “objective and equitable,” and ensuring human judgement is incorporated into AI development and use.

The IC wrote in the framework, which digs into the details of the ethics guide, that it was intended to ensure that use of AI technologies matches “the Intelligence Community’s unique mission purposes, authorities, and responsibilities for collecting and using data and AI outputs.”