Yes this says a 3 year epigenetic clock reversal in just 8 weeks thanks to diet and lifestyle changes. There is a list of supplements too:

Alpha ketoglutarate, vitamin C and vitamin A curcumin, epigallocatechin gallate (EGCG), rosmarinic acid, quercetin, luteolin.

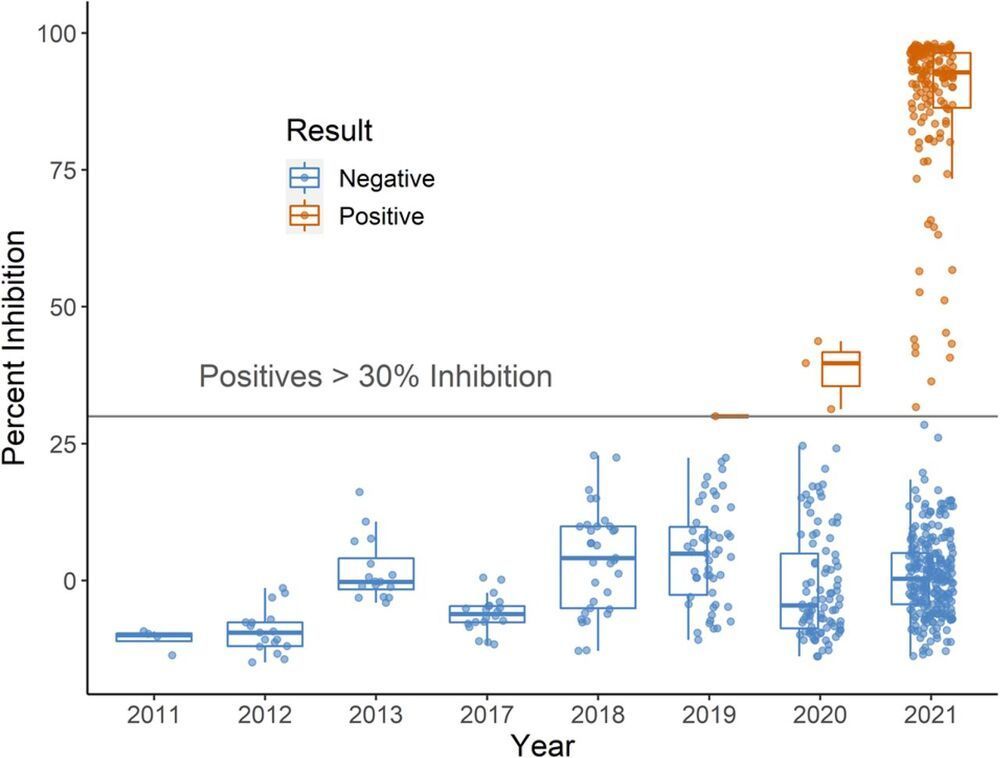

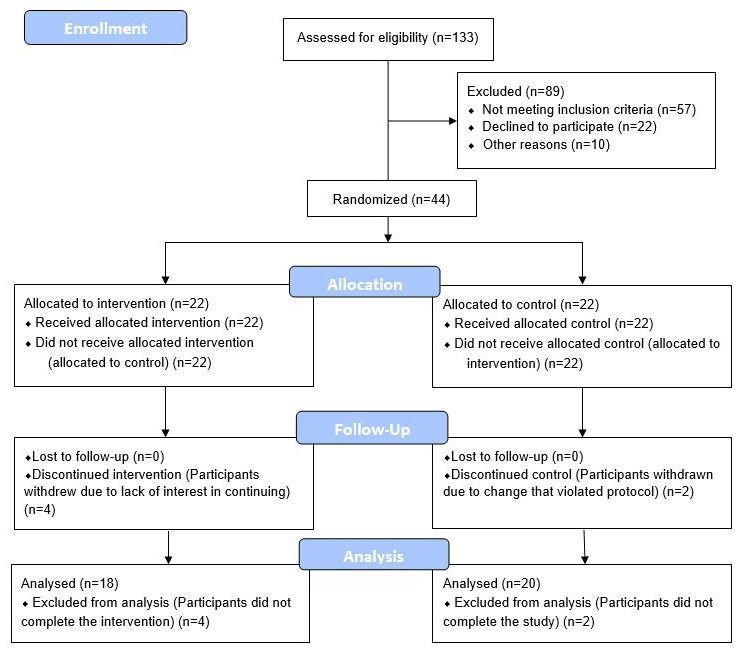

Manipulations to slow biological aging and extend healthspan are of interest given the societal and healthcare costs of our aging population. Herein we report on a randomized controlled clinical trial conducted among 43 healthy adult males between the ages of 50–72. The 8-week treatment program included diet, sleep, exercise and relaxation guidance, and supplemental probiotics and phytonutrients. The control group received no intervention. Genome-wide DNA methylation analysis was conducted on saliva samples using the Illumina Methylation Epic Array and DNAmAge was calculated using the online Horvath DNAmAge clock (2013). The diet and lifestyle treatment was associated with a 3.23 years decrease in DNAmAge compared with controls (p=0.018). DNAmAge of those in the treatment group decreased by an average 1.96 years by the end of the program compared to the same individuals at the beginning with a strong trend towards significance (p=0.066). Changes in blood biomarkers were significant for mean serum 5-methyltetrahydrofolate (+15%, p=0.004) and mean triglycerides (−25%, p=0.009). To our knowledge, this is the first randomized controlled study to suggest that specific diet and lifestyle interventions may reverse Horvath DNAmAge (2013) epigenetic aging in healthy adult males. Larger-scale and longer duration clinical trials are needed to confirm these findings, as well as investigation in other human populations.

Keywords: DNA methylation, epigenetic, aging, lifestyle, biological clock.

Advanced age is the largest risk factor for impaired mental and physical function and many non-communicable diseases including cancer, neurodegeneration, type 2 diabetes, and cardiovascular disease [1, 2]. The growing health-related economic and social challenges of our rapidly aging population are well recognized and affect individuals, their families, health systems and economies. Considering economics alone, delaying aging by 2.2 years (with associated extension of healthspan) could save $7 trillion over fifty years [3]. This broad approach was identified to be a much better investment than disease-specific spending. Thus, if interventions can be identified that extend healthspan even modestly, benefits for public health and healthcare economics will be substantial.

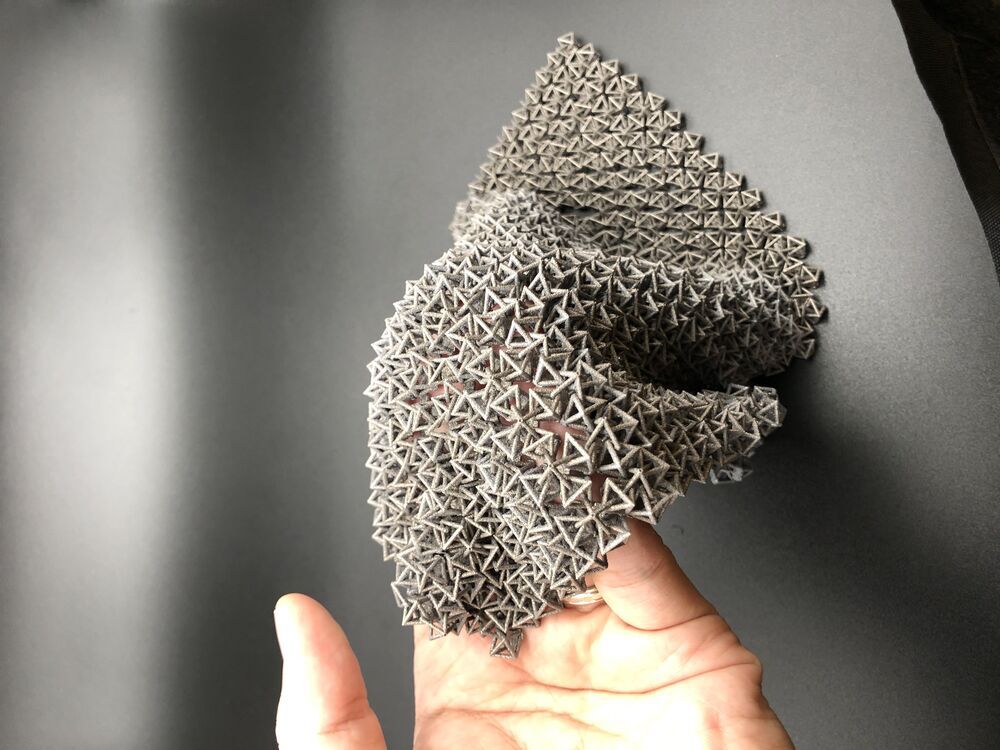

Lifelike & Realistic Pets on Amazon: https://amzn.to/3uegCXk

Lifelike & Realistic Pets on Amazon: https://amzn.to/3uegCXk

Robot Dog Kit on Amazon: https://amzn.to/3jirOw9

Robot Dog Kit on Amazon: https://amzn.to/3jirOw9 Robot Dogs that are just like Real Dogs: https://youtu.be/pifs1DE-Ys4

Robot Dogs that are just like Real Dogs: https://youtu.be/pifs1DE-Ys4 Subscribe: https://bit.ly/3eLtWLS

Subscribe: https://bit.ly/3eLtWLS Instagram: https://www.instagram.com/Robotix_with_Sina.

Instagram: https://www.instagram.com/Robotix_with_Sina. My Amazon Pick: https://www.amazon.com/shop/iloverobotics.

My Amazon Pick: https://www.amazon.com/shop/iloverobotics.