Amber Alert for Human Freedom

By Michael Lee

We’re witnessing increased violations of the air space of sovereign nations by drones and of the privacy of individuals by a variety of surveillance and monitoring technologies. And, as Al Gore pointed out in his latest book, The Future, the quality of democracy has been degraded in our times, largely as a result of the role of big money lobby groups influencing public policy to the exclusion of “citizen power”. Ironically, deep below the surface of the high tech, liberating mobile-digital world evolving in front of our bedazzled eyes, our Western concept of freedom is undergoing its sternest test since the end of the Cold War.

For behind the glittering success of the communications revolution powered by internet and mobile telephony, a battle is being waged for global control of the means of information. In the eyes of owners of the digital means of information, whether governments or corporations, the privacy of the individual has been subordinated to the value and leverage of digital content.

Regarding the threat to our privacy represented by these developments, on a scale from low (green) to severe (red)[1], I’d suggest we’ve already reached an amber (orange) alert, the second highest level. Let’s assess intrusions into air space and into our privacy.

- Edward Snowdon has made disclosures about a state surveillance program called PRISM. It’s a clandestine national security electronic surveillance program which has been operated by the National Security Agency (NSA) since 2007.[2] It appears to empower the government to take customer information from telecommunications companies like Verizon and internet giants like Google and Facebook, enabling the monitoring of phone calls and emails of private citizens. Some senior EU officials say they’re shocked by these reports of state spying on private persons as well as by the bugging of EU offices in Brussels and Washington DC. The PRISM program is clearly intrusive. And it’s endorsed by a democratically elected liberal president.

- Drones are being used extensively on both domestic and foreign soil under Obama’s presidency, whether reconnaissance drones or ones armed with missiles and bombs. They’re operated by the US Air Force and the CIA. FBI Director Robert Mueller has recently admitted in public that drones have been used domestically for surveillance of some American citizens.[3] (My jaw literally dropped when I saw him matter-of-factually confirm this inappropriate Big Brother-style deployment of military technology, however “targeted”.)

- Google Street View and satellite photography peep inside the perimeters of people’s homes to expose them to unsolicited public and governmental viewing. Without permission, Street View cameras take photos from an elevated position, overlooking hedges and walls specifically erected to preclude public viewing of some areas of private homes. It seems homes and back gardens are now under the spotlight of Google and the government as they attempt to digitally map out our lives as comprehensively as they can. This is virtual trespassing into the world’s residential areas.[4] It’s an Orwellian practice. To read Google’s viewpoint see http://www.google.com/help/maps/streetview/privacy.html[5]

- Physical movements of citizens in cities and towns are under increasing surveillance by a growing number of CCTV cameras as well as GPS devices in mobile phones.

- Financial transactions are all tracked by card associations, networks and financial institutions. In addition, card schemes are aggressively attempting to inaugurate the cashless society in order to obliterate the anonymity and privacy afforded by cash payments, while card and bank details on customer databases are all-too frequently hacked and stolen by fraudsters and identity thieves.

- Digital profiles of individuals are routinely compiled by both corporations and governments for marketing and monitoring purposes.

- Social media broadcast on their global platforms personal (and sometimes intimate) photos and comments, unwittingly exposing the material to unsolicited viewing by undesirable persons such as sexual predators and online bullies, even though this is an unintended consequence since the material was voluntarily submitted by the social media users.

In addition to these forms of surveillance of the individual and populations, there’s also the ingrained practices of thought control and groupthink often operational in academia and in the media. As an example, political correctness has created an atmosphere of reverse intolerance, whereby primarily conservative thinkers, and billions of religious persons, who may believe in traditional values frowned upon by the advocates of correctness, are subjected to the very name-calling and insults I assumed political correctness would be keen to eradicate if it aspired at all to be even-handed.[6] True scientific thinking, by contrast, creates an ideology-neutral atmosphere for healthy, open-minded intellectual discussion and efficient production of knowledge.

In sum, the following aspects of an individual’s life are being tracked, mapped and monitored: his/her private home, physical whereabouts, transactions, data, personal communications, thoughts and values. Taken together, all these kinds of intrusion into private lives of individuals and populations add up to total surveillance. That equates to a subtle, but comprehensive, assault on privacy.

Figure 1 shows technologies monitoring, mapping and policing our physical world: drones used for assassinations and for surveillance, Google videos of residential areas, satellite photos of our homes and gardens and CCTV cameras recording our movements in cities and on urban premises. Digital profiles of our homes and lives are assembled, which can be used for both marketing and surveillance, usually without our consent. On top of that, as already mentioned, there’s increased thought control in education and in the media under the regime of correctness.

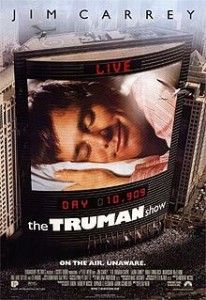

What does this brave new world of total surveillance of the individual mean for human freedom? Are we all destined to become unwitting Trumans in a reality show written, directed and produced by powerful figures in corporations and governments?

Figure 2: Poster for the Truman Show

In the global struggle to control the means of information, we’re being offered a deal, a Faustian pact for the digital age. We’re being asked to exchange our claim to privacy for a kind of remotely monitored freedom and security. While there are undoubtedly some public benefits arising from this increased surveillance, such as fraud prevention in financial services and provision of CCTV evidence for crimes and misdemeanours, even a cursory cost-to-benefit analysis shows that we’re already paying too high a price for increased security measured against the diminishment of our freedom.

As long as we hand over our basic privacy to our digital masters, and don’t make a stink like Edward Snowdon, we’ll be left in peace. Until we start to think and believe differently from the mainstream, that is. Then individuals can be targeted with soft power like spying software and phone taps, with medium power like vilification in the media or, in exceptional cases, with hard power from a military drone which can blow human targets up in their vehicles in any public place on earth with pinpoint accuracy.

So we can be free as long as Google street cars and satellite cameras can film our back yards and driveways, as long as drones can violate the air space above us, as long as our emails and phone conversations can be tapped, as long as we conform to political correctness.

In essence, the freedom on offer for our digital age is being stripped of human privacy and of its distinctly human character of independence. But freedom without privacy and independence lacks real substance. Freedom to think and to believe differently is being sucked out of public life. Freedom is becoming an empty shell.

I don’t believe human populations are going to continue to buy this false offer of compromised freedom or to put up forever with surveillance “creep” by the owners of the global means of information. This kind of conformist, highly monitored, impersonal freedom sucks.

As today’s digital masters continue their conquest of the physical world, trying to map it out, digitize it and control it, we notice there’s no underlying social contract between the current powers-that-be and the populations of nations. That’s because the digital world is largely unregulated and borderless, whereas social contracts (such as democracy), which govern politics and society in the real world, go back to a previous era dominated by largely democratic nation-states.

But there’s no social contract for the Information Age. There’s no social contract for Internet. Nothing has been negotiated between population groups and the digital powers of corporations and governments. This represents a dangerous global power vacuum. No wonder our human freedom is dissipating. And all the while, technology is accelerating faster than both knowledge accumulation and the evolution of governance.

It’s the creeping totality of surveillance and the combined use of intrusive technologies which is menacing, not Google Street View on its own, or drone attacks, or the tracking of financial transactions, or the use of CCTV on street corners. Absent a social contract for the digital age, these technologies and practices are turning us into the unfree subjects of a new commercially driven world we can call Globurbia.

Privacy is dying all around us. Independence of thought is shrinking. Democracy is being diluted and undermined by abuses of virtually unbridled power. Information and data are being aggregated and bureaucratized. Nothing is private, nothing is sacred. We’re in the thrall of the owners of the means of information, the new masters of society. It’s not so much a police state as a soul-less vacuum.

If we’re not vigilant, we’ll all end up, within this generation, living in a conformist, militarized, paranoid, drone-policed society of powerless, remotely monitored, robotic individuals, spiritually drugged by consumerism and mentally bullied by correctness.

Conclusions

We urgently need a new international political protocol for the digital age which promotes the protection of privacy, freedom and independent thinking. We need to embrace an ideology-neutral scientific ethos for solving common human and social problems.

The next steps for halting surveillance creep in order to protect human freedom would be for Google to permanently ban Street View cars from all residential areas, for the current imperialistic drone policy[7] to be completely overhauled, for a new Digital Age political protocol to be developed and for global scientific progress, guaranteeing freedom and independence of thought, to be embraced.

Michael Lee’s book Knowing our Future – the startling case for futurology is available at the publisher http://www.infideas.com/pages/store/products/ec_view.asp?PID=1804 or on Amazon.com.

Acknowledgements & websites

Bergen, P & Braun, M. Drone. September 19, 2012. Drone is Obama’s weapon of choice.

http://www.cnn.com/2012/09/05/opinion/bergen-obama-drone

Center for Civilians in Conflict. http://civiliansinconflict.org

Clarke, R. (Original of 15 August 1997, latest revs. 16 September 1999, 8 December 2005, 7 August 2006). Introduction to Dataveillance and Information Privacy, and Definitions of Terms. http://www.rogerclarke.com/DV/Intro.html

Gallagher, R. Separating fact from fiction in NSA surveillance scandal. http://www.dallasnews.com/opinion/sunday-commentary/20130628-ryan-gallagher-separating-fact-from-fiction-in-nsa-surveillance-scandal.ece

Gore, A. 2013. The Future. New York: Random House Publishing Group.

http://www.algore.com

Surveillance Studies Network (SSN). 2006. A Report on the Surveillance Society: Summary Report

http://library.queensu.ca/ojs/index.php/surveillance-and-society/index

Surveillance & Society — http://www.surveillance-studies.net/

Wikipedia — http://en.wikipedia.org/wiki/Drone_attacks_in_Pakistan

[1] Homeland Security employed a system rating terror threats with a five color-code reflecting the probability of a terrorist attack and its potential gravity: Red- severe risk, Orange — high risk, Yellow — significant risk, Blue — general risk, Green — low risk.

[2] http://en.wikipedia.org/wiki/PRISM_(surveillance_program) PRISM is a government code name for a data collection effort known officially by the SIGAD US-984XN.[8][9] The program is operated under the supervision of the United States Foreign Intelligence Surveillance Court pursuant to the Foreign Intelligence Surveillance Act (FISA).[citation needed] Its existence was leaked by NSA contractor Edward Snowden, who claimed the extent of mass data collection was far greater than the public knew, and included “dangerous” and “criminal” activities in law.

[3] (http://www.washingtontimes.com/news/2013/jun/21/surveillance-scandals-swirl-obama-sit-down-privacy/#ixzz2XlO7m4H8)

[5] Google’s Privacy statement regarding Street View reads: “Your privacy and security are important to us. The Street View team takes a number of steps to help protect the privacy and anonymity of individuals when images are collected for Street View, including blurring faces and license plates. You can easily contact the Street View team if you see an image that should be protected or if you see a concerning image.” My view is that we own our homes and none of us have given personal permission for our premises to be photographed and videotaped to be watched by a global audience. Not that Google asked us anyway.

[6] Political correctness is defined as “the avoidance of forms of expression or action that are perceived to exclude, marginalize, or insult groups of people who are socially disadvantaged or discriminated against.” (The New Oxford Dictionary of English, p. 1435.) Paradoxically, though, this doctrine has itself become discriminatory, promoting stereotyping of some social groups which are not perceived as socially disadvantaged. Consequently, political correctness has loaded the dice. The oppressive atmosphere of linguistic bias created by political correctness has hardened into a form of thought control which has entirely lost its sense of proportion and blunted the academic search for truth, independent thought and healthy discourse. Prejudice in reverse is prejudice nonetheless.

[7] “Covert drone strikes are one of President Obama’s key national security policies. He has already authorized 283 strikes in Pakistan, six times more than the number during President George W. Bush’s eight years in office. As a result, the number of estimated deaths from the Obama administration’s drone strikes is more than four times what it was during the Bush administration — somewhere between 1,494 and 2,618.” Bergen, P & Braun, M. Drone. September 19, 2012. Drone is Obama’s weapon of choice. http://www.cnn.com/2012/09/05/opinion/bergen-obama-drone