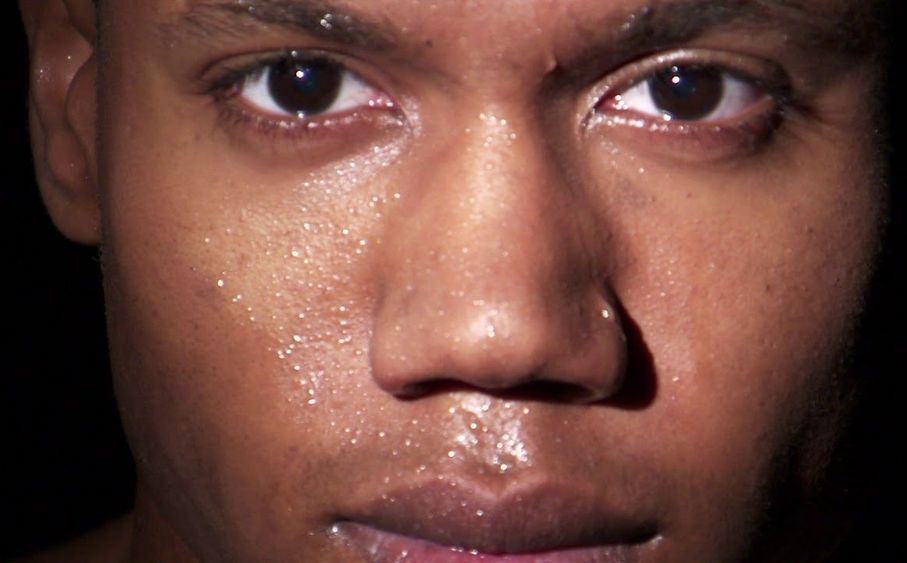

Researchers at Tufts University’s School of Engineering have developed biomaterial-based inks that respond to and quantify chemicals released from the body (e.g. in sweat and potentially other biofluids) or in the surrounding environment by changing color. The inks can be screen printed onto textiles such as clothes, shoes, or even face masks in complex patterns and at high resolution, providing a detailed map of human response or exposure. The advance in wearable sensing, reported in Advanced Materials, could simultaneously detect and quantify a wide range of biological conditions, molecules and, possibly, pathogens over the surface of the body using conventional garments and uniforms.

“The use of novel bioactive inks with the very common method of screen printing opens up promising opportunities for the mass-production of soft, wearable fabrics with large numbers of sensors that could be applied to detect a range of conditions,” said Fiorenzo Omenetto, corresponding author and the Frank C. Doble Professor of Engineering at Tufts’ School of Engineering. “The fabrics can end up in uniforms for the workplace, sports clothing, or even on furniture and architectural structures.”

Wearable sensing devices have attracted considerable interest in monitoring human performance and health. Many such devices have been invented incorporating electronics in wearable patches, wristbands, and other configurations that monitor either localized or overall physiological information such as heart rate or blood glucose. The research presented by the Tufts team takes a different, complementary approach—non-electronic, colorimetric detection of a theoretically very large number of analytes using sensing garments that can be distributed to cover very large areas: anything from a patch to the entire body, and beyond.