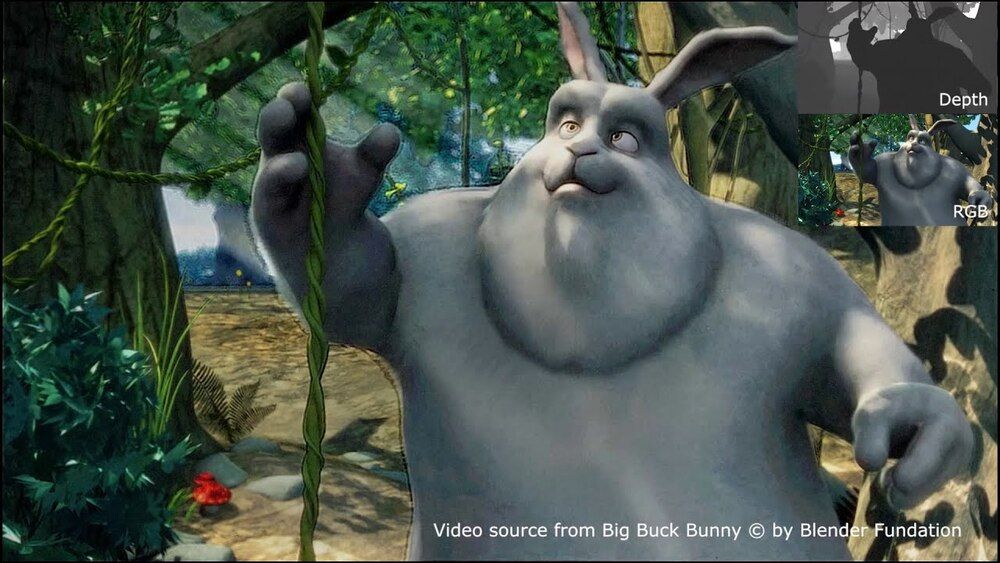

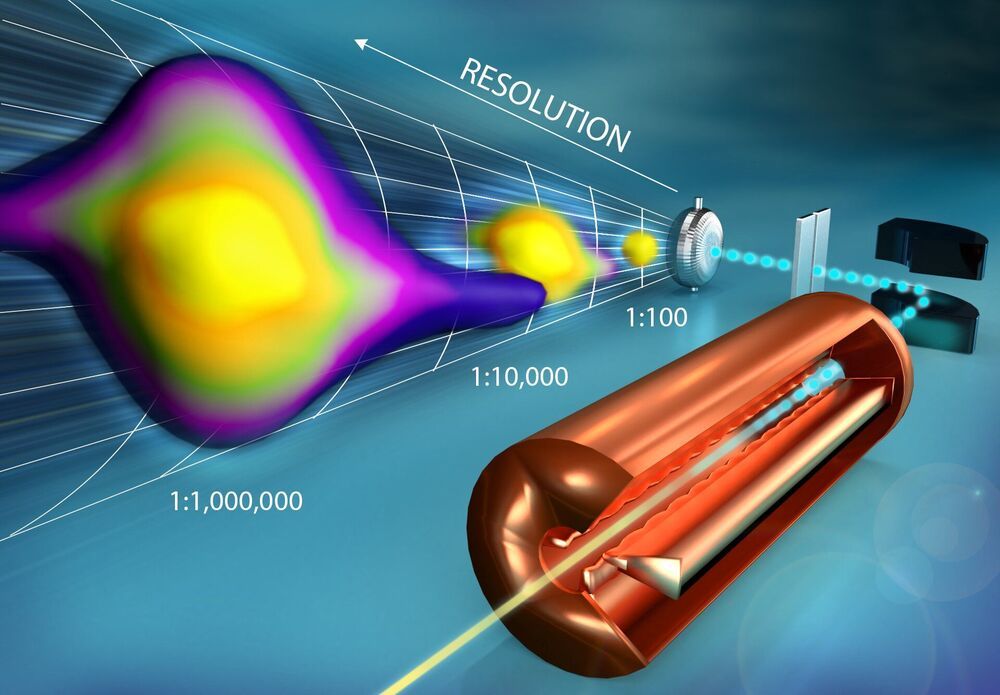

Researchers from Tokyo Metropolitan University have devised and implemented a simplified algorithm for turning freely drawn lines into holograms on a standard desktop CPU. They dramatically cut down the computational cost and power consumption of algorithms that require dedicated hardware. It is fast enough to convert writing into lines in real time, and makes crisp, clear images that meet industry standards. Potential applications include hand-written remote instructions superimposed on landscapes and workbenches.

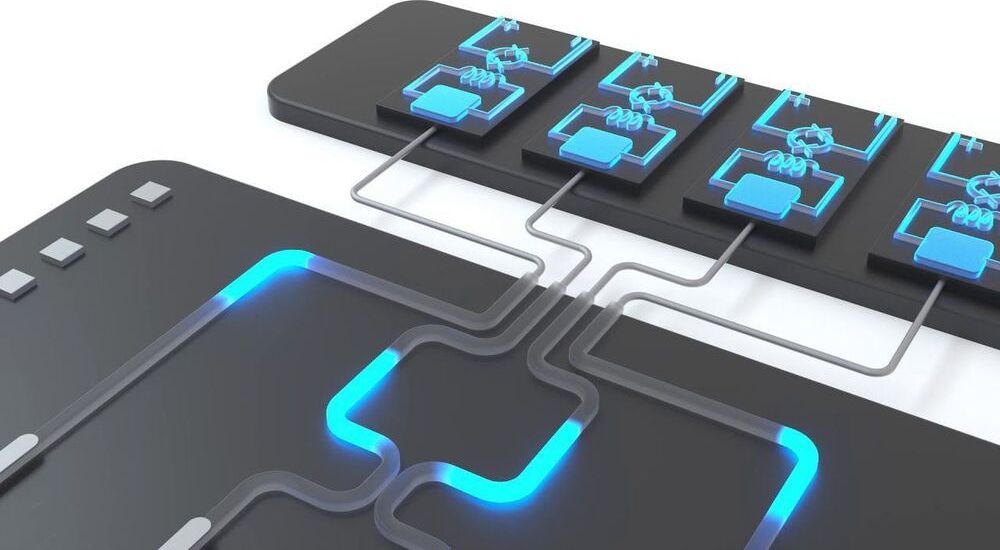

T potential applications of holography include important enhancements to vital, practical tasks, including remote instructions for surgical procedures, electronic assembly on circuit boards, or directions projected on landscapes for navigation. Making holograms available in a wide range of settings is vital to bringing this technology out of the lab and into daily life.

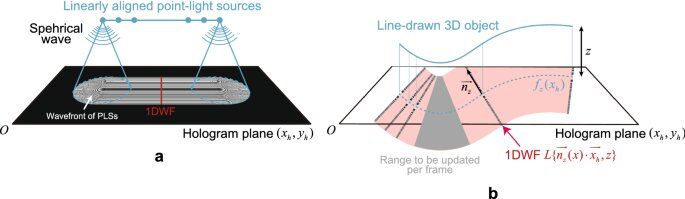

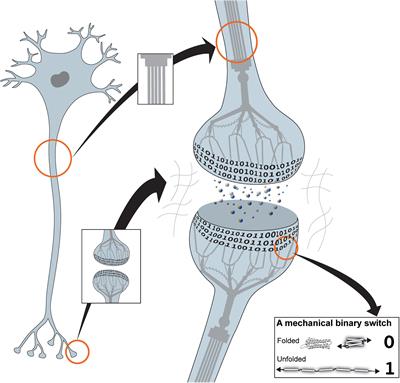

One of the major drawbacks of this state-of-the-art technology is the computational load of hologram generation. The kind of quality we’ve come to expect in our 2D displays is prohibitive in 3D, requiring supercomputing levels of number crunching to achieve. There is also the issue of power consumption. More widely available hardware like GPUs in gaming rigs might be able to overcome some of these issues with raw power, but the amount of electricity they use is a major impediment to mobile applications. Despite improvements to available hardware, the solution can’t be achieved by brute force.