The rise of AI has been accompanied by an explosion of processing horsepower.

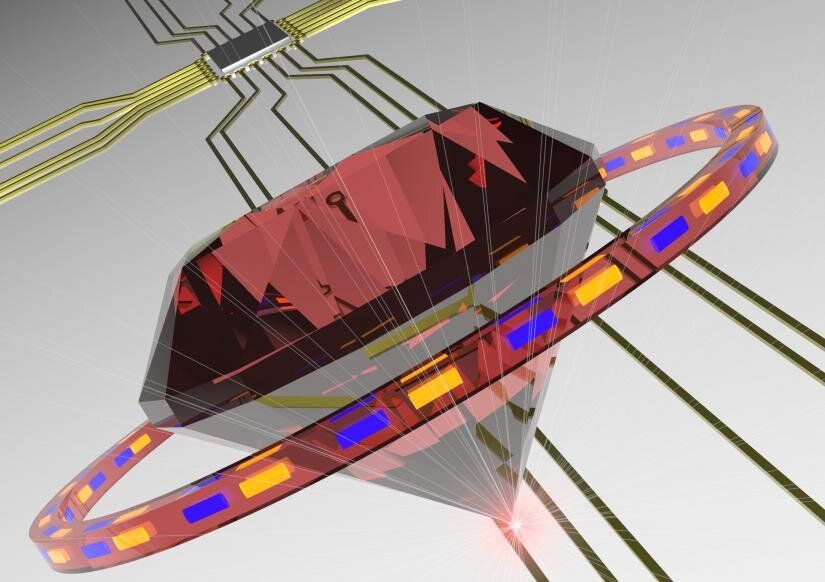

Marilyn Monroe famously sang that diamonds are a girl’s best friend, but they are also very popular with quantum scientists—with two new research breakthroughs poised to accelerate the development of synthetic diamond-based quantum technology, improve scalability, and dramatically reduce manufacturing costs.

While silicon is traditionally used for computer and mobile phone hardware, diamond has unique properties that make it particularly useful as a base for emerging quantum technologies such as quantum supercomputers, secure communications and sensors.

However there are two key problems; cost, and difficulty in fabricating the single crystal diamond layer, which is smaller than one millionth of a meter.

Getting closer.

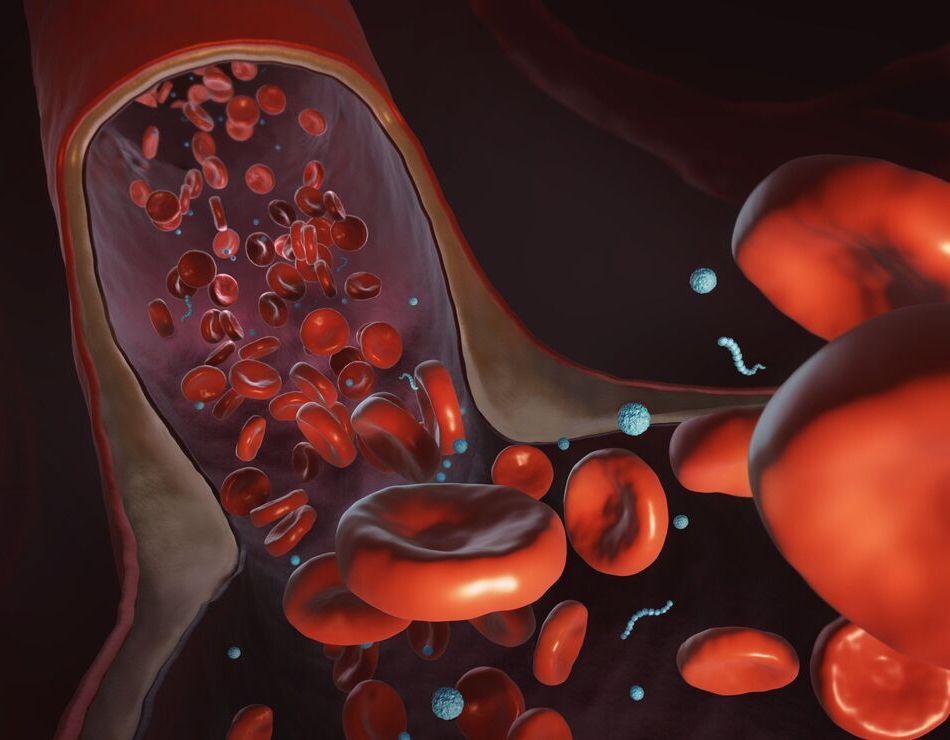

Drugs and vaccines circulate through the vascular system reacting according to their chemical and structural nature. In some cases, they are intended to diffuse. In other cases, like cancer treatments, the intended target is highly localized. The effectiveness of a medicine —and how much is needed and the side effects it causes —are a function of how well it can reach its target.

“A lot of medicines involve intravenous injections of drug carriers,” said Ying Li, an assistant professor of mechanical engineering at the University of Connecticut. “We want them to be able to circulate and find the right place at the right time and to release the right amount of drugs to safely protect us. If you make mistakes, there can be terrible side effects.”

Li studies nanomedicines and how they can be designed to work more efficiently. Nanomedicine involves the use of nanoscale materials, such as biocompatible nanoparticles and nanorobots, for diagnosis, delivery, sensing or actuation purposes in a living organism. His work harnesses the power of supercomputers to simulate the dynamics of nanodrugs in the blood stream, design new forms of nanoparticles, and find ways to control them.

Others think we’re still missing fundamental aspects of how intelligence works, and that the best way to fill the gaps is to borrow from nature. For many that means building “neuromorphic” hardware that more closely mimics the architecture and operation of biological brains.

The problem is that the existing computer technology we have at our disposal looks very different from biological information processing systems, and operates on completely different principles. For a start, modern computers are digital and neurons are analog. And although both rely on electrical signals, they come in very different flavors, and the brain also uses a host of chemical signals to carry out processing.

Now though, researchers at NIST think they’ve found a way to combine existing technologies in a way that could mimic the core attributes of the brain. Using their approach, they outline a blueprint for a “neuromorphic supercomputer” that could not only match, but surpass the physical limits of biological systems.

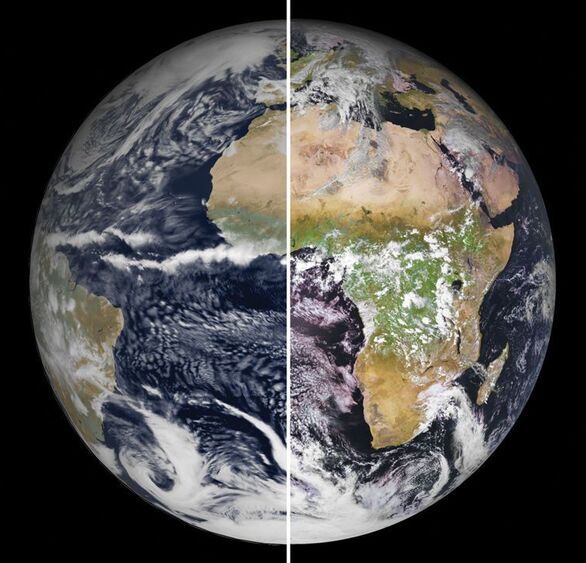

The European Union is finalizing plans for an ambitious “digital twin” of planet Earth that would simulate the atmosphere, ocean, ice, and land with unrivaled precision, providing forecasts of floods, droughts, and fires from days to years in advance. Destination Earth, as the effort is called, won’t stop there: It will also attempt to capture human behavior, enabling leaders to see the impacts of weather events and climate change on society and gauge the effects of different climate policies.

“It’s a really bold mission, I like it a lot,” says Ruby Leung, a climate scientist at the U.S. Department of Energy’s (DOE’s) Pacific Northwest National Laboratory. By rendering the planet’s atmosphere in boxes only 1 kilometer across, a scale many times finer than existing climate models, Destination Earth can base its forecasts on far more detailed real-time data than ever before. The project, which will be described in detail in two workshops later this month, will start next year and run on one of the three supercomputers that Europe will deploy in Finland, Italy, and Spain.

Destination Earth rose out of the ashes of Extreme Earth, a proposal led by the European Centre for Medium-Range Weather Forecasts (ECMWF) for a billion-euro flagship research program. The European Union ultimately canceled the flagship program, but retained interest in the idea. Fears that Europe was falling behind China, Japan, and the United States in supercomputing led to the European High-Performance Computing Joint Undertaking, an €8 billion investment to lay the groundwork for eventual “exascale” machines capable of 1 billion billion calculations per second. The dormant Extreme Earth proposal offered a perfect use for such capacity. “This blows a soul into your digital infrastructure,” says Peter Bauer, ECMWF’s deputy director of research, who coordinated Extreme Earth and has been advising the European Union on the new program.

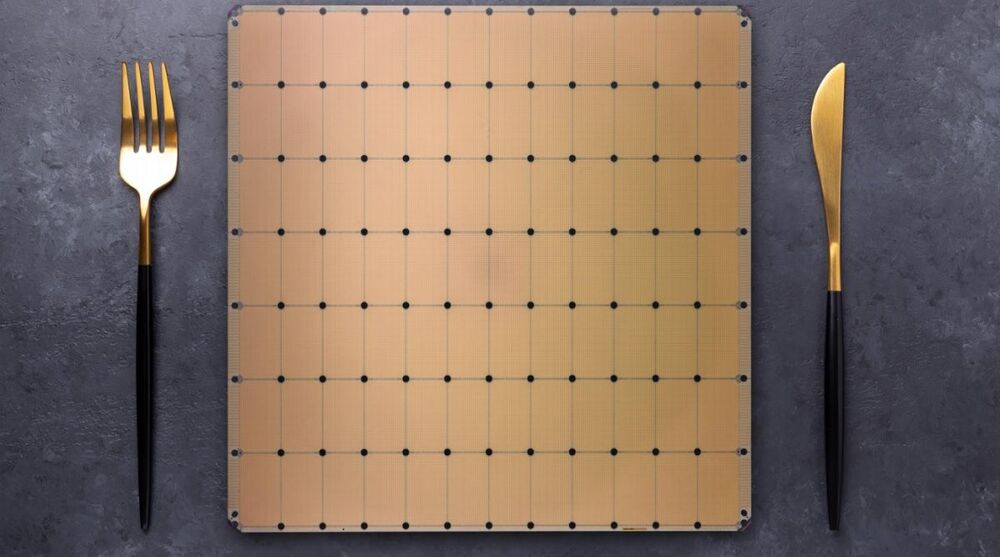

Cerebras Systems has unveiled its new Wafer Scale Engine 2 processor with a record-setting 2.6 trillion transistors and 850000 AI-optimized cores. It’s built for supercomputing tasks, and it’s the second time since 2019 that Los Altos, California-based Cerebras has unveiled a chip that is basically an entire wafer.

Chipmakers normally slice a wafer from a 12-inch-diameter ingot of silicon to process in a chip factory. Once processed, the wafer is sliced into hundreds of separate chips that can be used in electronic hardware.

But Cerebras, started by SeaMicro founder Andrew Feldman, takes that wafer and makes a single, massive chip out of it. Each piece of the chip, dubbed a core, is interconnected in a sophisticated way to other cores. The interconnections are designed to keep all the cores functioning at high speeds so the transistors can work together as one.

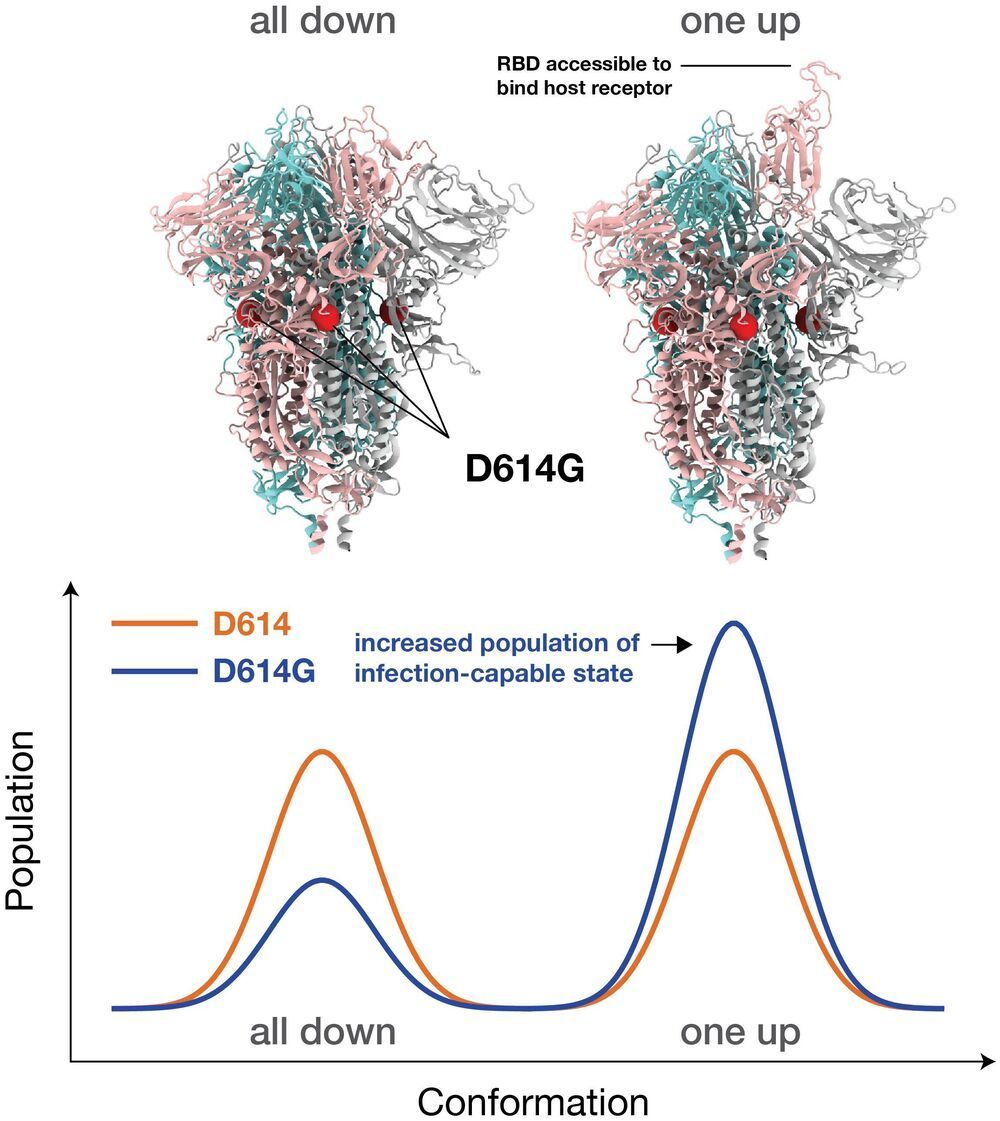

Large-scale supercomputer simulations at the atomic level show that the dominant G form variant of the COVID-19-causing virus is more infectious partly because of its greater ability to readily bind to its target host receptor in the body, compared to other variants. These research results from a Los Alamos National Laboratory-led team illuminate the mechanism of both infection by the G form and antibody resistance against it, which could help in future vaccine development.

“We found that the interactions among the basic building blocks of the Spike protein become more symmetrical in the G form, and that gives it more opportunities to bind to the receptors in the host—in us,” said Gnana Gnanakaran, corresponding author of the paper published today in Science Advances. “But at the same time, that means antibodies can more easily neutralize it. In essence, the variant puts its head up to bind to the receptor, which gives antibodies the chance to attack it.”

Researchers knew that the variant, also known as D614G, was more infectious and could be neutralized by antibodies, but they didn’t know how. Simulating more than a million individual atoms and requiring about 24 million CPU hours of supercomputer time, the new work provides molecular-level detail about the behavior of this variant’s Spike.

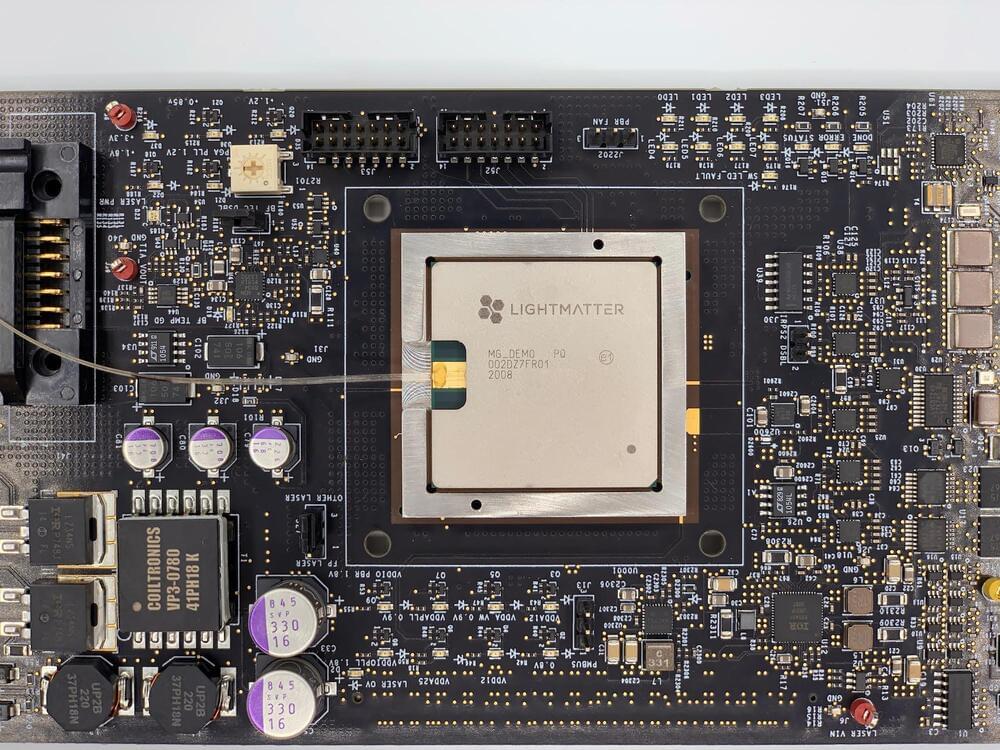

The Lightmatter photonic computer is 10 times faster than the fastest NVIDIA artificial intelligence GPU while using far less energy. And it has a runway for boosting that massive advantage by a factor of 100, according to CEO Nicholas Harris.

In the process, it may just restart a moribund Moore’s Law.

Or completely blow it up.

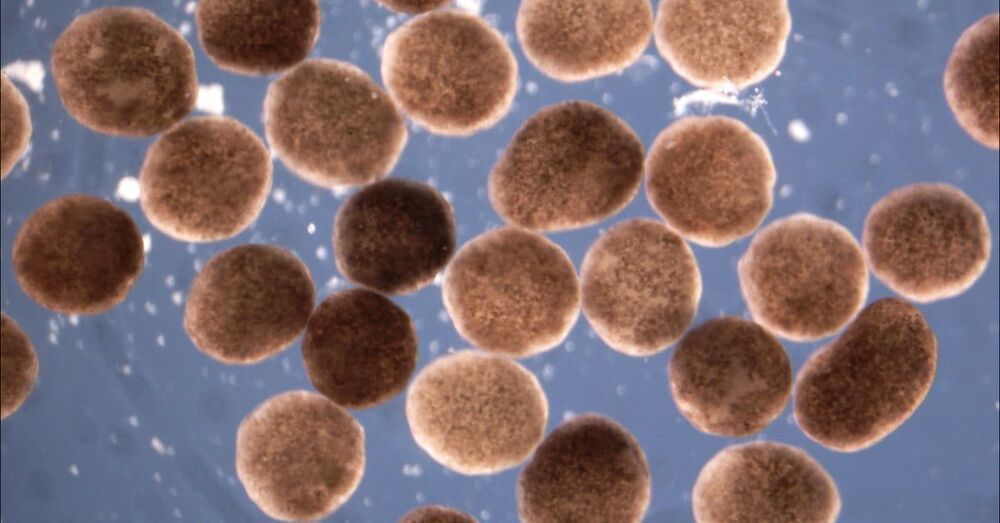

As the Tufts scientists were creating the physical xenobot organisms, researchers working in parallel at the University of Vermont used a supercomputer to run simulations to try and find ways of assembling these living robots in order to perform useful tasks.

Scientists at Tufts University have created a strange new hybrid biological/mechanical organism that’s made of living cells, but operates like a robot.