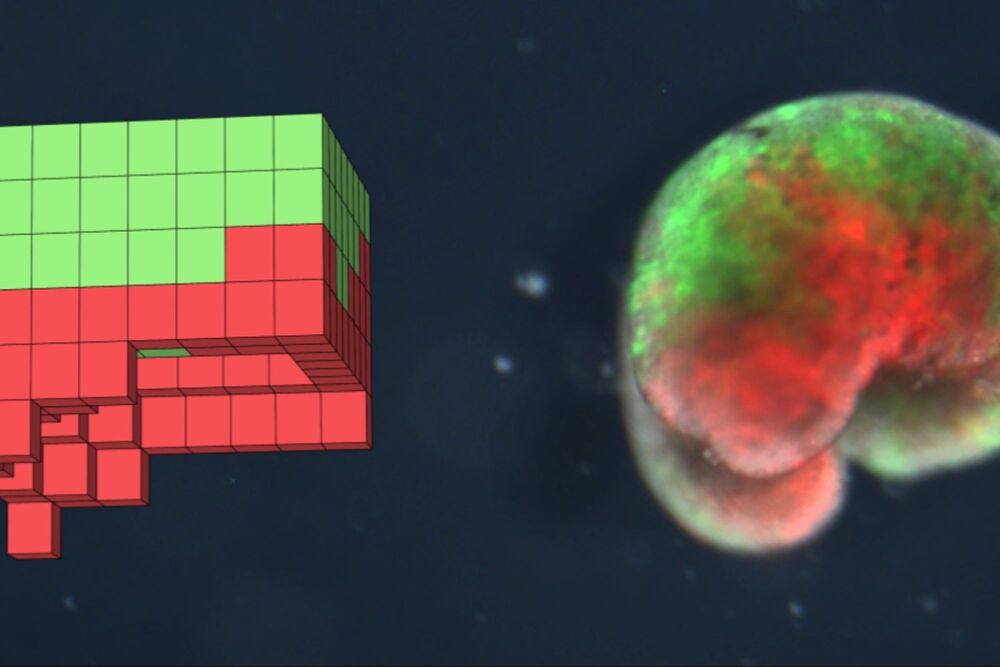

“These are novel living machines. They are not a traditional robot or a known species of animals. It is a new class of artifacts: a living and programmable organism,” says Joshua Bongard, an expert in computer science and robotics at the University of Vermont (UVM) and one of the leaders of the find.

As the scientist explains, these living bots do not look like traditional robots : they do not have shiny gears or robotic arms. Rather, they look more like a tiny blob of pink meat in motion, a biological machine that researchers say can accomplish things traditional robots cannot.

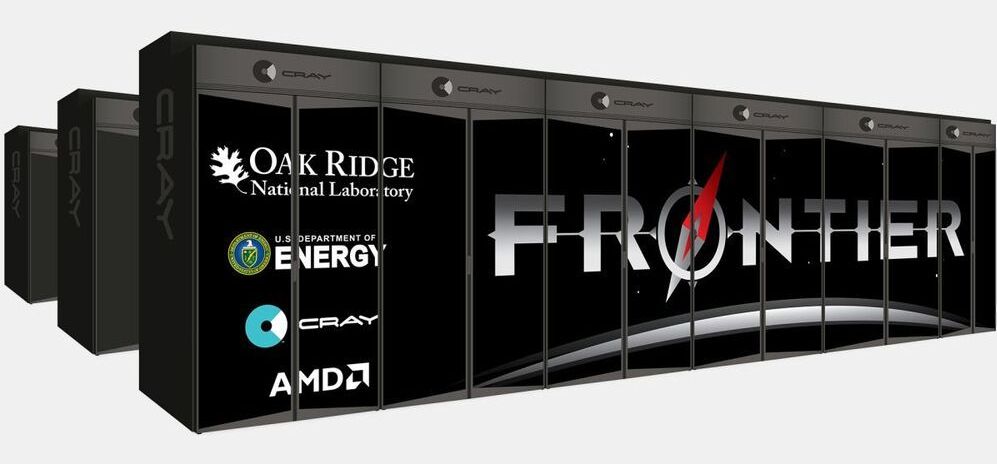

Xenobots are synthetic organisms designed automatically by a supercomputer to perform a specific task, using a process of trial and error (an evolutionary algorithm), and are built by a combination of different biological tissues.