I get this question a lot. Today, I was asked to write an answer at Quora.com, a Q&A web site at which I am the local cryptocurrency expert. It’s time to address this issue here at Lifeboat.

Question

I have many PCs laying around my home and office.

Some are current models with fast Intel CPUs. Can

I mine Bitcoin to make a little money on the side?

Answer

Other answers focus on the cost of electricity, the number of hashes or teraflops achieved by a computer CPU or the size of the current Bitcoin reward. But, you needn’t dig into any of these details to understand this answer.

You can find the mining software to mine Bitcoin or any other coin on any equipment. Even a phone or wristwatch. But, don’t expect to make money. Mining Bitcoin with an x86 CPU (Core or Pentium equivalent) is never cost effective—not even when Bitcoin was trading at nearly $20,000. A computer with a fast $1500 graphics card will bring you closer to profitability, but not by much.

The problem isn’t that an Intel or AMD processor is too weak to mine for Bitcoin. It’s just as powerful as it was in the early days of Bitcoin. Rather, the problem is that the mining game is a constantly evolving competition. Miners with the fastest hardware and the cheapest power are chasing a shrinking pool of rewards.

The problem arises from a combination of things:

- There is a fixed rate of rewards available to all miners—and yet, over the past 2 years, hundreds of thousands of new CPUs have been added to the task. You are competing with all of them.

- Despite a large drop in the Bitcoin exchange rate (from $19,783.21 on Dec. 17, 2017), we know that it is generally a rising commodity, because both speculation and gradual grassroots adoption outpaces the very gradual increase in supply. The rising value of Bitcoin attracts even more individuals and organizations into the game of mining. They are all fighting for a pie that is shrinking in overall size. Here’s why…

- The math (a built-in mechanism) halves the size of rewards every 4 years. We are currently between two halving events, the next one will occur in May 2020. This halving forces miners to be even more efficient to eke out any reward.

- In the past few years, we have seen a race among miners and mining pools to acquire the best hardware for the task. At first, it was any CPU that could crunch away at the math. Then, miners quickly discovered that an nVidia graphics processor was better suited to the task. Then ASICS became popular, and now; specialized, large-scale integrated circuits that were designed specifically for mining.

- Advanced mining pools have the capacity to instantly switch between mining for Bitcoin, Ethereum classic, Litecoin, Bitcoin Cash and dozens of other coins depending upon conditions that change minute-by-minute. Although you can find software that does the same thing, it is unlikely that you can outsmart the big boys at this game, because they have super-fast internet connections and constant software maintenance.

- Some areas of the world have a surplus of wind, water or solar energy. In fact, there are regions where electricity is free.* Although regional governments would rather that this surplus be used to power homes and businesses (benefiting the local economy), electricity is fungible! And so, local entrepreneurs often “rent” out their cheap electricity by offering shelf space to miners from around the world. Individuals with free or cheap electricity (and some, with a cold climate to keep equipment cool) split this energy savings with the miner. This further stacks the deck against the guy with a fast PC in New York or Houston.

Of course, with Bitcoin generally rising in value (over the long term), this provides continued incentive to mine. It is the only thing that makes this game worthwhile to the individuals who participate.

So, while it is not impossible to profit by mining on a personal computer, if you don’t have very cheap power, the very latest specialized mining rigs, and the skills to constantly tweak your configuration—then your best bet is to join a reputable mining pool. Take your fraction of the mining rewards and let them take a small cut. Cash out frequently, so that you are not locked into their ability to resist hacking or remain solvent.

Related: Largest US operation mines 0.4% of daily Bitcoin rewards. Listen to the owner describe the effiiency of his ASIC processors and the enormous capacity he is adding. This will not produce more Bitcoin. The total reward rate is fixed and falling every 4 years. His build out will consume a massive amount of electricity, but it will only grab share from other miners—and encourage them to increase consuption just to keep up.

* Several readers have pointed out that they have access to “free power” in their office — or more typically, in a college dormitory. While this may be ‘free’ to the student or employee, it is most certainly not free. In the United States, even the most efficient mining, results in a 20 or 30% return on electric cost—and with the added cost of constant equipment updates. This is not the case for personal computers. They are sorely unprofitable…

So, for example, if you have 20 Intel computers cooking for 24 hours each day, you might receive $115 rewards at the end of a year, along with an electric bill for $3500. Long before this happens, you will have tripped the circuit breaker in your dorm room or received an unpleasant memo from your boss’s boss.

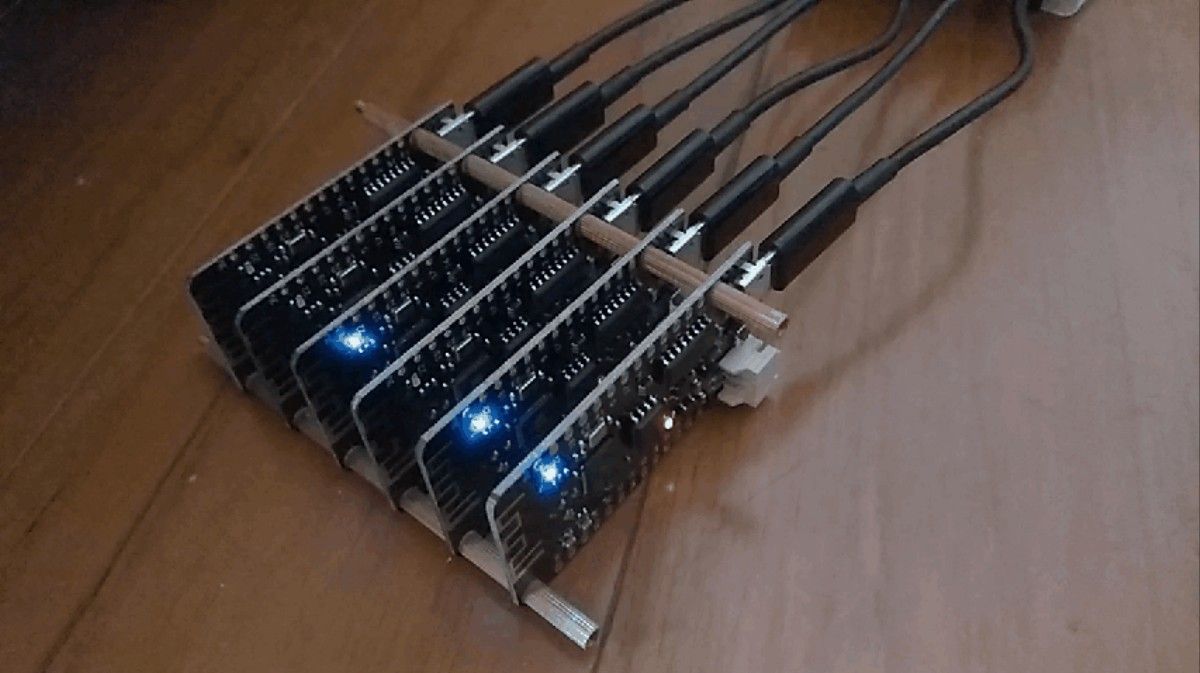

Bitcoin mining farms —

- Professional mining pool (above photo and top row below)

- Amateur mining rigs (bottom row below)

This is what you are up against. Even the amateur mining operations depicted in the bottom row require access to very cheap electricity, the latest processors and the skill to expertly maintain hardware, software and the real-time, mining decision-process.

Philip Raymond co-chairs CRYPSA, hosts the New York Bitcoin Event and is keynote speaker at Cryptocurrency Conferences. He sits on the New Money Systems board of Lifeboat Foundation. Book a presentation or consulting engagement.