But where advocates like Foxx mostly see the benefits of transhumanism, some critics say it raises ethical concerns in terms of risk, and others point out its potential to exacerbate social inequality.

Foxx says humans have long used technology to make up for physical limitations — think of prosthetics, hearing aids, or even telephones. More controversial technology aimed to enhance or even extend life, like cryogenic freezing, is also charted terrain.

The transhumanist movement isn’t large, but Foxx says there is a growing awareness and interest in technology used to enhance or supplement physical capability.

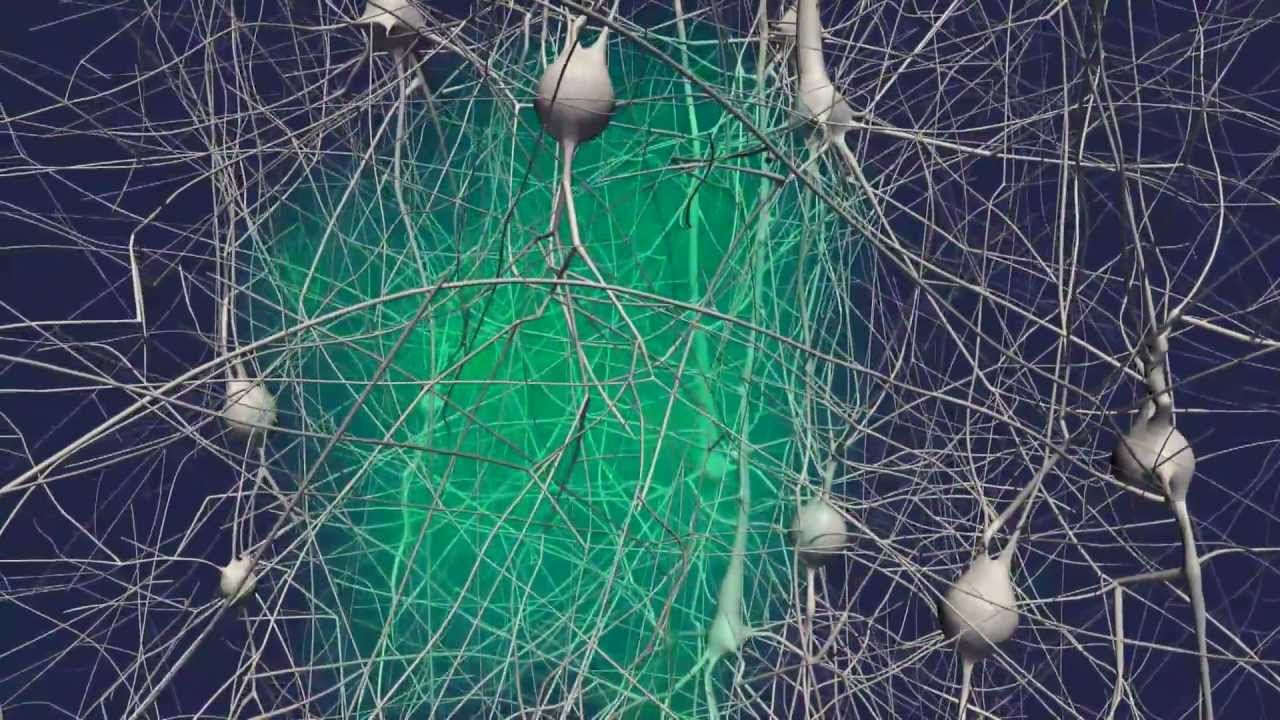

This is perhaps unsurprising given that we live in an era where scientists are working to create artificial intelligence that can read your mind and millions of people spend most of their day clutching a supercomputer in their hands in the form of a smartphone.

Credit: HPE

Credit: HPE