If you shrank yourself down and entered a proton, you’d experience among the most intense pressures found anywhere in the universe.

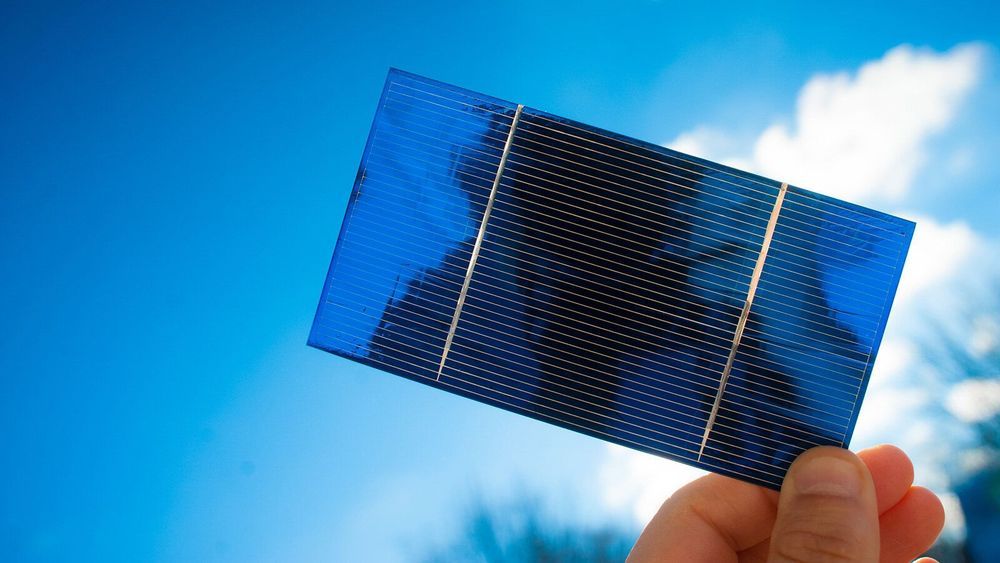

Finding the best light-harvesting chemicals for use in solar cells can feel like searching for a needle in a haystack. Over the years, researchers have developed and tested thousands of different dyes and pigments to see how they absorb sunlight and convert it to electricity. Sorting through all of them requires an innovative approach.

Now, thanks to a study that combines the power of supercomputing with data science and experimental methods, researchers at the U.S. Department of Energy’s (DOE) Argonne National Laboratory and the University of Cambridge in England have developed a novel “design to device” approach to identify promising materials for dye-sensitized solar cells (DSSCs). DSSCs can be manufactured with low-cost, scalable techniques, allowing them to reach competitive performance-to-price ratios.

The team, led by Argonne materials scientist Jacqueline Cole, who is also head of the Molecular Engineering group at the University of Cambridge’s Cavendish Laboratory, used the Theta supercomputer at the Argonne Leadership Computing Facility (ALCF) to pinpoint five high-performing, low-cost dye materials from a pool of nearly 10,000 candidates for fabrication and device testing. The ALCF is a DOE Office of Science User Facility.

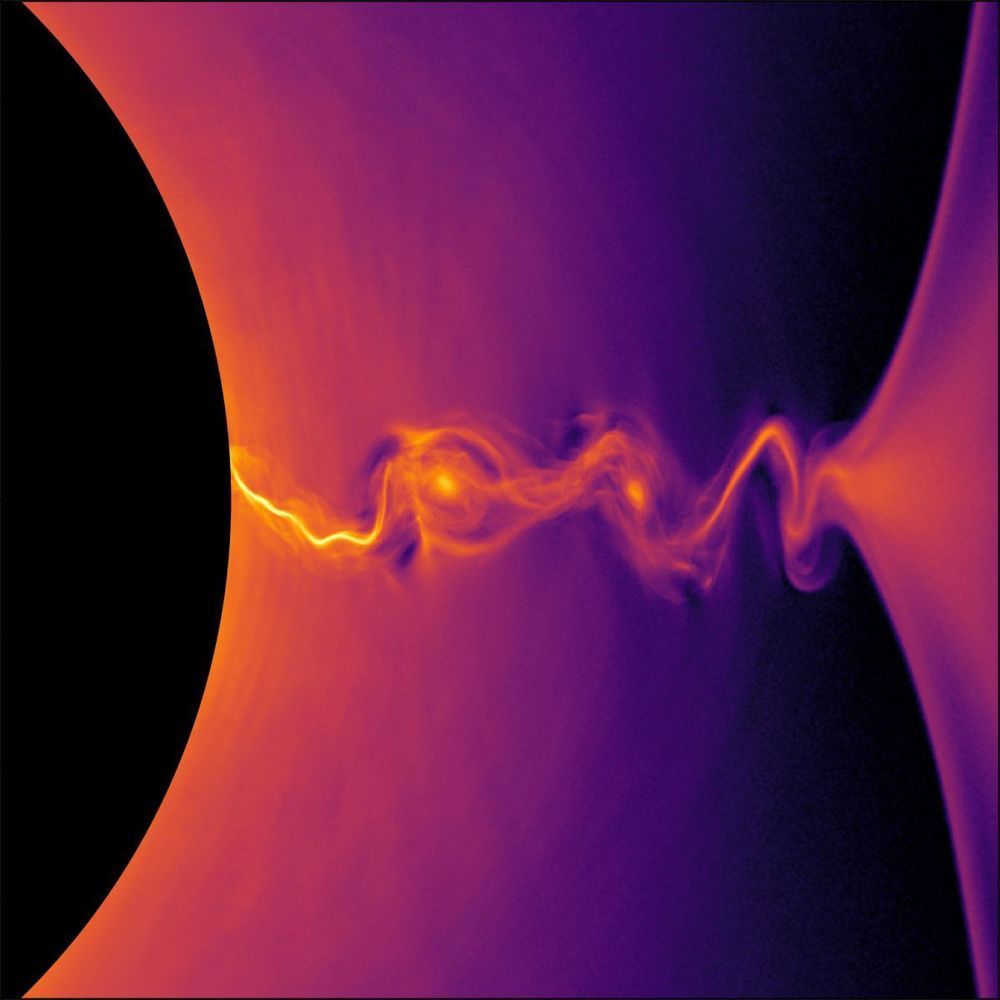

The gravitational pull of a black hole is so strong that nothing, not even light, can escape once it gets too close. However, there is one way to escape a black hole — but only if you’re a subatomic particle.

As black holes gobble up the matter in their surroundings, they also spit out powerful jets of hot plasma containing electrons and positrons, the antimatter equivalent of electrons. Just before those lucky incoming particles reach the event horizon, or the point of no return, they begin to accelerate. Moving at close to the speed of light, these particles ricochet off the event horizon and get hurled outward along the black hole’s axis of rotation.

Known as relativistic jets, these enormous and powerful streams of particles emit light that we can see with telescopes. Although astronomers have observed the jets for decades, no one knows exactly how the escaping particles get all that energy. In a new study, researchers with Lawrence Berkeley National Laboratory (LBNL) in California shed new light on the process. [The Strangest Black Holes in the Universe].

Dr. Rico explained: “When we compare human genomes from different people, we see that they are way more different than we initially expected when the Human Genome Project was declared to be ”completed” in 2003. One of the main contributions to these differences are the so called Copy Number Variable (CNV) regions. CNV regions are in different copy number depending on each individual, and their variability can be greater in some human populations than others. The number of copies of CNV regions can contribute to both normal phenotypic variability in the populations and susceptibility to certain diseases.

Research has shown a direct relationship between mutations in introns and variability in human populations.

One of the greatest challenges of genomics is to reveal what role the ”dark side” of the human genome plays: those regions where it has not yet been possible to find specific functions. The role that introns play within that immense part of the genome is especially mysterious. The introns, which represent almost half the size of the human genome, are constitutive parts of genes that alternate with regions that code for proteins, called exons.

Research published in PLOS Genetics, led by Alfonso Valencia, ICREA, director of the Life Sciences department of the Barcelona Supercomputing Center-National Supercomputing Center (BSC) and Dr. Daniel Rico of the Institute of Cellular Medicine, Newcastle University has analysed how introns are affected by copy number variants (CNV). CNVs are genomic variants that result in the presence (even in multiple copies) or absence of regions of the genome in different individuals.

Trying to streamline an operation that spends more than $5 billion a year on developing new drugs, Novartis dispatched teams to jetmaker Boeing Co. and Swissgrid AG, a power company, to observe how they use technology-laden crisis centers to prevent failures and blackouts. That led to the design of something that looks like the pharma version of NASA’s Mission Control: a global surveillance hub where supercomputers map and chart Novartis’s network of 500 drug studies in 70 countries, trying to predict potential problems on a minute-by-minute basis.

A third of development costs comes from clinical trials. Novartis wants to make them cheaper and faster.

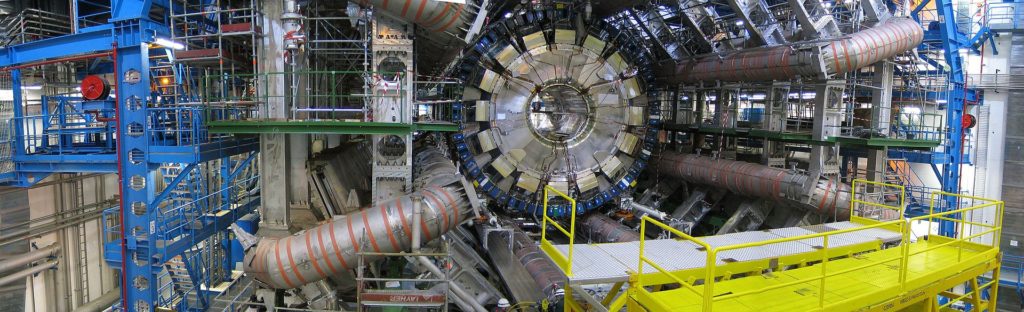

CERN has revealed plans for a gigantic successor of the giant atom smasher LHC, the biggest machine ever built. Particle physicists will never stop to ask for ever larger big bang machines. But where are the limits for the ordinary society concerning costs and existential risks?

CERN boffins are already conducting a mega experiment at the LHC, a 27km circular particle collider, at the cost of several billion Euros to study conditions of matter as it existed fractions of a second after the big bang and to find the smallest particle possible – but the question is how could they ever know? Now, they pretend to be a little bit upset because they could not find any particles beyond the standard model, which means something they would not expect. To achieve that, particle physicists would like to build an even larger “Future Circular Collider” (FCC) near Geneva, where CERN enjoys extraterritorial status, with a ring of 100km – for about 24 billion Euros.

Experts point out that this research could be as limitless as the universe itself. The UK’s former Chief Scientific Advisor, Prof Sir David King told BBC: “We have to draw a line somewhere otherwise we end up with a collider that is so large that it goes around the equator. And if it doesn’t end there perhaps there will be a request for one that goes to the Moon and back.”

“There is always going to be more deep physics to be conducted with larger and larger colliders. My question is to what extent will the knowledge that we already have be extended to benefit humanity?”

There have been broad discussions about whether high energy nuclear experiments could pose an existential risk sooner or later, for example by producing micro black holes (mBH) or strange matter (strangelets) that could convert ordinary matter into strange matter and that eventually could start an infinite chain reaction from the moment it was stable – theoretically at a mass of around 1000 protons.

CERN has argued that micro black holes eventually could be produced, but they would not be stable and evaporate immediately due to „Hawking radiation“, a theoretical process that has never been observed.

Furthermore, CERN argues that similar high energy particle collisions occur naturally in the universe and in the Earth’s atmosphere, so they could not be dangerous. However, such natural high energy collisions are seldom and they have only been measured rather indirectly. Basically, nature does not set up LHC experiments: For example, the density of such artificial particle collisions never occurs in Earth’s atmosphere. Even if the cosmic ray argument was legitimate: CERN produces as many high energy collisions in an artificial narrow space as occur naturally in more than hundred thousand years in the atmosphere. Physicists look quite puzzled when they recalculate it.

Others argue that a particle collider ring would have to be bigger than the Earth to be dangerous.

A study on “Methodological Challenges for Risks with Low Probabilities and High Stakes” was provided by Lifeboat member Prof Raffaela Hillerbrand et al. Prof Eric Johnson submitted a paper discussing juridical difficulties (lawsuits were not successful or were not accepted respectively) but also the problem of groupthink within scientific communities. More of important contributions to the existential risk debate came from risk assessment experts Wolfgang Kromp and Mark Leggett, from R. Plaga, Eric Penrose, Walter Wagner, Otto Roessler, James Blodgett, Tom Kerwick and many more.

Since these discussions can become very sophisticated, there is also a more general approach (see video): According to present research, there are around 10 billion Earth-like planets alone in our galaxy, the Milky Way. Intelligent life might send radio waves, because they are extremely long lasting, though we have not received any (“Fermi paradox”). Theory postulates that there could be a ”great filter“, something that wipes out intelligent civilizations at a rather early state of their technical development. Let that sink in.

All technical civilizations would start to build particle smashers to find out how the universe works, to get as close as possible to the big bang and to hunt for the smallest particle at bigger and bigger machines. But maybe there is a very unexpected effect lurking at a certain threshold that nobody would ever think of and that theory does not provide. Indeed, this could be a logical candidate for the “great filter”, an explanation for the Fermi paradox. If it was, a disastrous big bang machine eventually is not that big at all. Because if civilizations were to construct a collider of epic dimensions, a lack of resources would have stopped them in most cases.

Finally, the CERN member states will have to decide on the budget and the future course.

The political question behind is: How far are the ordinary citizens paying for that willing to go?

LHC-Critique / LHC-Kritik

Network to discuss the risks at experimental subnuclear particle accelerators

LHC-Critique[at]gmx.com

Particle collider safety newsgroup at Facebook:

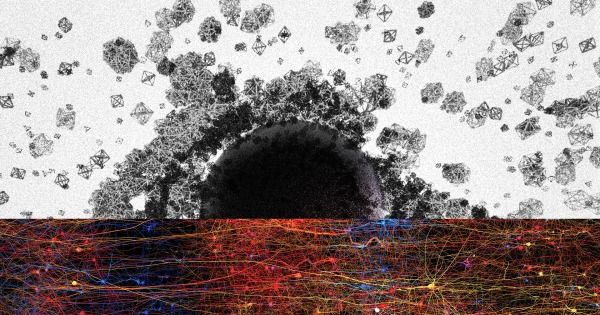

Neuroscientists have used a classic branch of maths in a totally new way to peer into the structure of our brains. What they’ve discovered is that the brain is full of multi-dimensional geometrical structures operating in as many as 11 dimensions.

We’re used to thinking of the world from a 3D perspective, so this may sound a bit tricky, but the results of this new study could be the next major step in understanding the fabric of the human brain – the most complex structure we know of.

This latest brain model was produced by a team of researchers from the Blue Brain Project, a Swiss research initiative devoted to building a supercomputer-powered reconstruction of the human brain.

The Cray-1 supercomputer, the world’s fastest back in the 1970s, does not look like a supercomputer. It looks like a mod version of that carnival ride The Round Up, the one where you stand, strapped in, as it dizzies you up. It’s surrounded by a padded bench that conceals its power supplies, like a cake donut, if the hole was capable of providing insights about nuclear weapons.

After Seymour Cray first built this computer, he gave Los Alamos National Laboratory a six-month free trial. But during that half-year, a funny thing happened: The computer experienced 152 unattributable memory errors. Later, researchers would learn that cosmic-ray neutrons can slam into processor parts, corrupting their data. The higher you are, and the bigger your computers, the more significant a problem this is. And Los Alamos—7,300 feet up and home to some of the world’s swankiest processors—is a prime target.

The world has changed a lot since then, and so have computers. But space has not. And so Los Alamos has had to adapt—having its engineers account for space particles in its hard- and software. “This is not really a problem we’re having,” explains Nathan DeBardeleben of the High Performance Computing Design group. “It’s a problem we’re keeping at bay.”

This new law was signed just as a partial US government shutdown began.

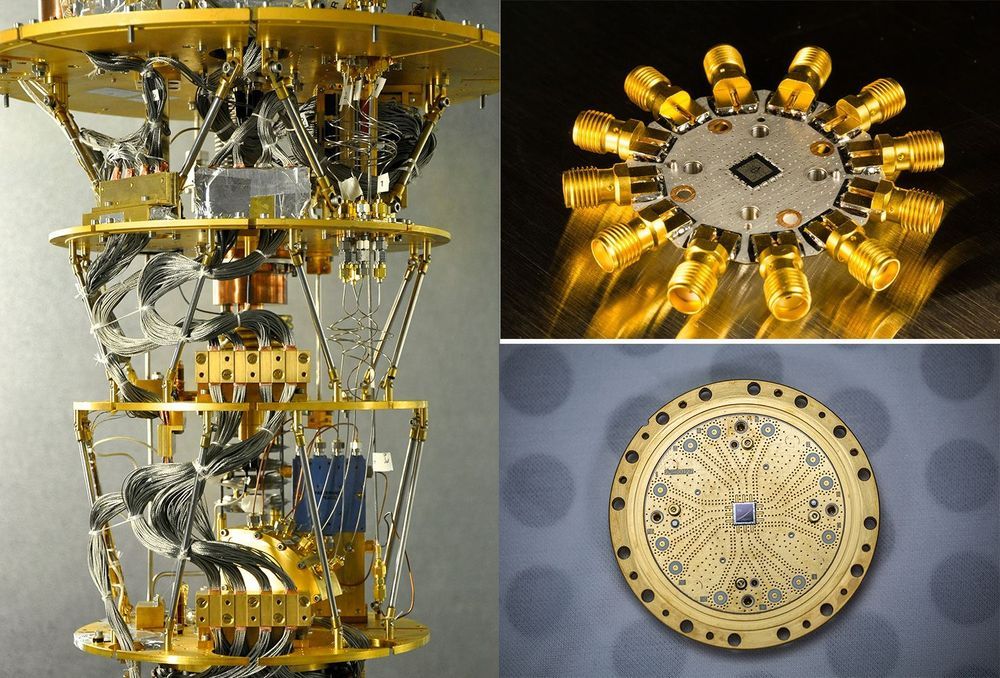

The new National Quantum Initiative Act will give America a national masterplan for advancing quantum technologies.

The news: The US president just signed into law a bill that commits the government to providing $1.2 billion to fund activities promoting quantum information science over an initial five-year period. The new law, which was signed just as a partial US government shutdown began, will provide a significant boost to research, and to efforts to develop a future quantum workforce in the country.

The background: Quantum computers leverage exotic phenomena from quantum physics to produce exponential leaps in computing power. The hope is that these machines will ultimately be able to outstrip even the most powerful classical supercomputers. Those same quantum phenomena can also be tapped to create highly secure communications networks and other advances.