Step inside the portal and everything is white, calm, silent: this is where researchers are helping craft the future of virtual reality. I speak out loud, and my voice echoes around the empty space. In place of the clutter on the outside, each panel is unadorned, save for a series of small black spots: cameras recording your every move. There are 480 VGA cameras and 30 HD cameras, as well as 10 RGB-D depth sensors borrowed from Xbox gaming consoles. The massive collection of recording apparatus is synced together, and its collective output is combined into a single, digital file. One minute of recording amounts to 600GB of data.

The hundreds of cameras record people talking, bartering, and playing games. Imagine the motion-capture systems used by Hollywood filmmakers, but on steroids. The footage it records captures a stunningly accurate three-dimensional representation of people’s bodies in motion, from the bend in an elbow to a wrinkle in your brow. The lab is trying to map the language of our bodies, the signals and social cues we send one another with our hands, posture, and gaze. It is building a database that aims to decipher the constant, unspoken communication we all use without thinking, what the early 20th century anthropologist Edward Sapir once called an “elaborate code that is written nowhere, known to no one, and understood by all.”

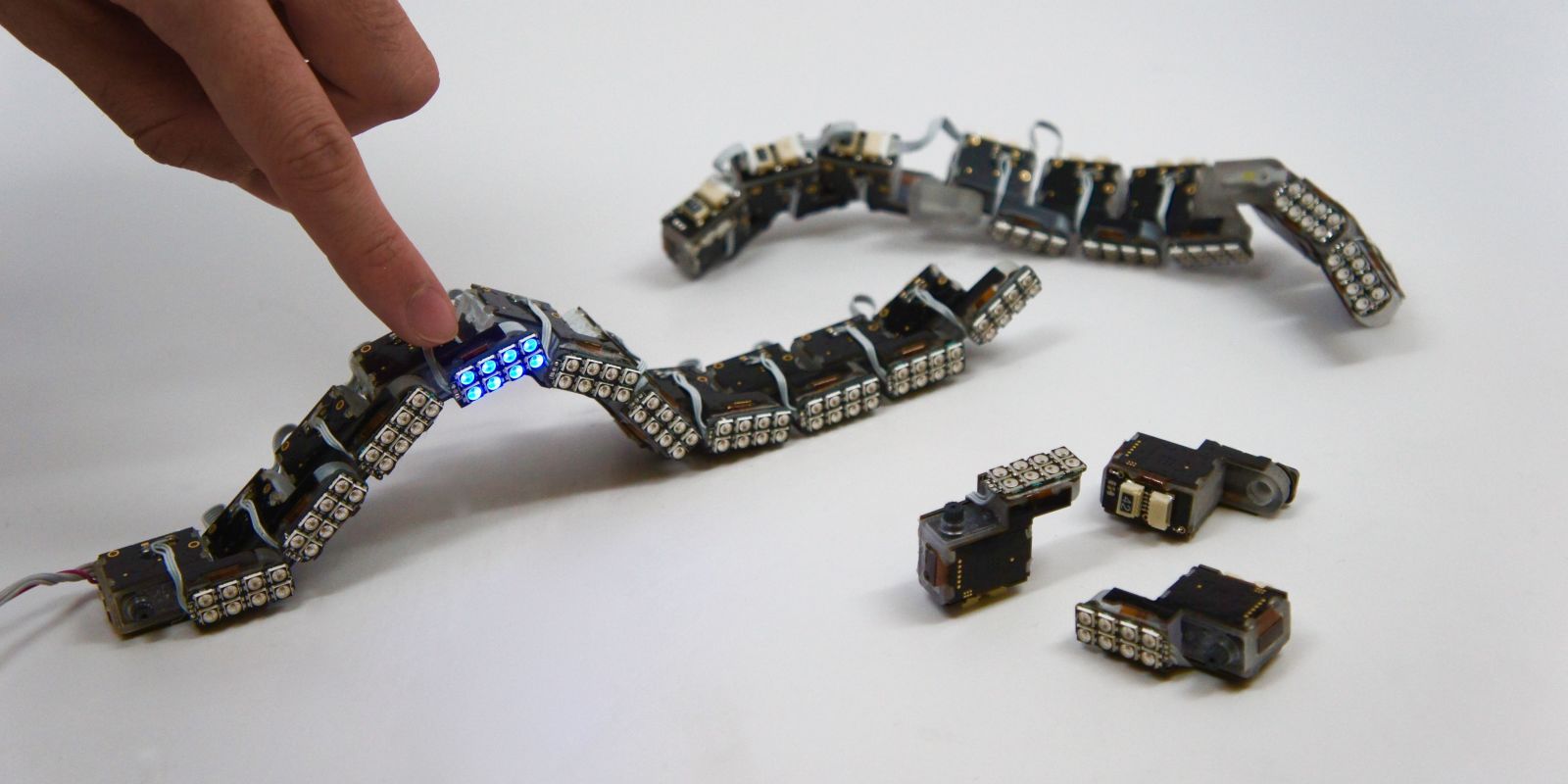

The original goal of the Panoptic Studio was to use this understanding of body language to improve the way robots relate to human beings, to make them more natural partners at work or in play. But the research being done here has recently found another purpose. What works for making robots more lifelike and social could also be applied to virtual characters. That’s why this basement lab caught the attention of one of the biggest players in virtual reality: Facebook. In April 2015, the Silicon Valley giant hired Yaser Sheikh, an associate professor at Carnegie Mellon and director of the Panoptic Studio, to assist in research to improve social interaction in VR.

Read more