On why AI must be able to compose these models dynamically to generate combinatorial representations. Dr. Jonathan Mugan is a principal scientist at DeUmbra and is the author of The Curiosity Cycle.

On why AI must be able to compose these models dynamically to generate combinatorial representations. Dr. Jonathan Mugan is a principal scientist at DeUmbra and is the author of The Curiosity Cycle.

Thanks to this new category of algorithms that has proved its power of mimicking human skills just by learning through examples. Deep learning is a technology representing the next era of machine learning. Algorithms used in machine learning are created by programmers and they hold the responsibility for learning through data. Decisions are made based on such data.

Some of the AI experts say, t here will a shift in AI trends. For instance, the late 1990s and early 2000s saw the rise of machine learning. Neural networks gained its popularity in the early 2010s, and growth in reinforcement came into light recently.

Well, these are just a couple of caveats we’re experienced throughout the past years.

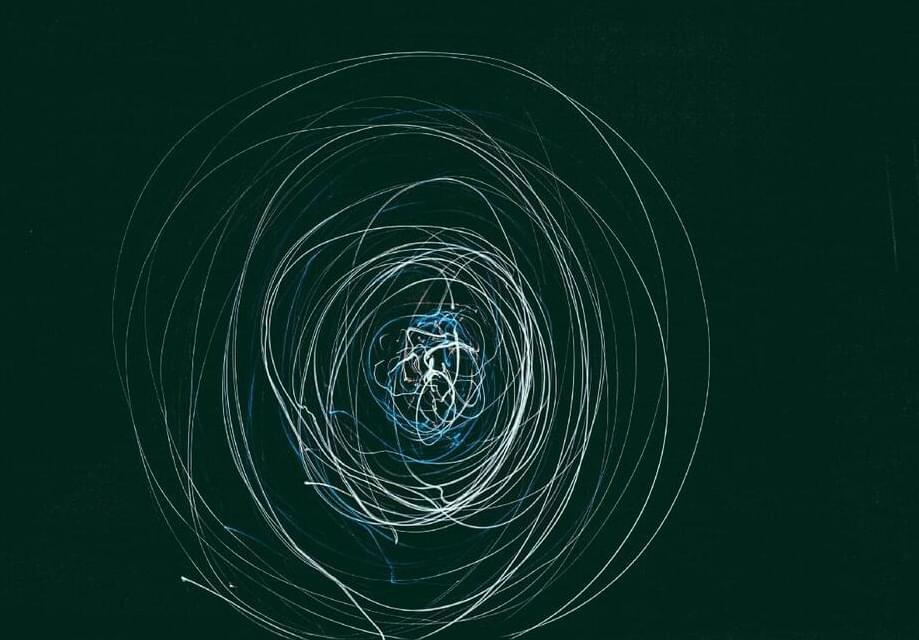

Australian researchers have developed disruptive technology allowing autonomous vehicles to track running pedestrians hidden behind buildings, and cyclists obscured by larger cars, trucks, and buses.

The autonomous vehicle uses game changing technology that allows it to “see” the world around it, including using X-ray style vision that penetrates through to pedestrians in blind spots and to detect cyclists obscured by fast-moving vehicles.

The iMOVE Cooperative Research Centre-funded project collaborating with the University of Sydney’s Australian Centre for Field Robotics and Australian connected vehicle solutions company Cohda Wireless has just released its new findings in a final report following three years of research and development.

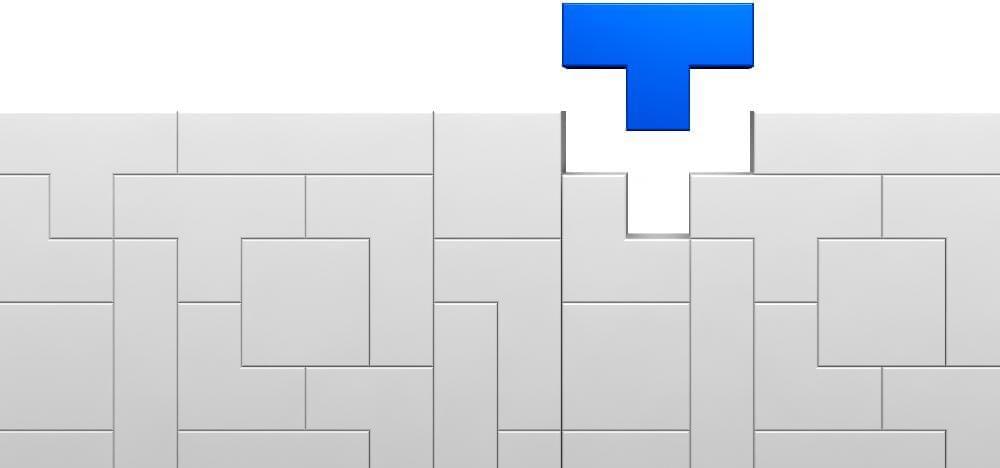

The big question that may be on many viewers’ minds is whether the robots are truly navigating the course on their own—making real-time decisions about how high to jump or how far to extend a foot—or if they’re pre-programmed to execute each motion according to a detailed map of the course.

As engineers explain in a second new video and accompanying blog post, it’s a combination of both.

Atlas is equipped with RGB cameras and depth sensors to give it “vision,” providing input to its control system, which is run on three computers. In the dance video linked above and previous videos of Atlas doing parkour, the robot wasn’t sensing its environment and adapting its movements accordingly (though it did make in-the-moment adjustments to keep its balance).

DeepMind, part of Google, announces General AI breakthrough with a true learning AI. First, they built a dynamic environment (like a game) that can change it’s own layout — XLand. Then, they use Deep Learning and Reinforcement Learning combined — Deep Reinforcement Learning — to create an AI the can learn without training at all or data about what it’s doing. The AI played 700,000 games in 4,000 unique worlds! The AI performed 200 BILLION training steps while performing 3.4 million UNIQUE (non-taught/programmed) tasks.

Support The Show On Patreon:

https://www.patreon.com/theaiguide.

Follow The AI Guide on Twitter:

http://www.twitter.com/DavidRa20284933

Like The AI Guide on Facebook:

http://www.facebook.com/DavidTheAIGuide.

Follow The AI Guide on Instagram:

https://www.instagram.com/theaiguide/

Follow The AI Guide on LinkedIn:

https://www.linkedin.com/in/davidmrainey.

The technology aims to deliver cost and power consumption improvements for deep learning use cases of inference, the companies said. This development follows NeuReality’s emergence from stealth earlier in February with an $8 million seed round to accelerate AI workloads at scale.

AI inference is a growing area of focus for enterprises, because it’s the part of AI where neural networks actually are applied in real application and yield results. IBM and NeuReality claim their partnership will allow the deployment of computer vision, recommendation systems, natural language processing, and other AI use cases in critical sectors like finance, insurance, healthcare, manufacturing, and smart cities. They also claim the agreement will accelerate deployments in today’s ever-growing AI use cases, which are already deployed in public and private cloud datacenters.

NeuReality has competition in Cast AI, a technology company offering a platform that “allows developers to deploy, manage, and cost-optimize applications in multiple clouds simultaneously.” Some other competitors include Comet.ml, Upright Project, OctoML, Deci, and DeepCube. However, this partnership with IBM will see NeuReality become the first start-up semiconductor product member of the IBM Research AI Hardware Center and a licensee of the Center’s low-precision high performance Digital AI Cores.