To check out any of the lectures available from Great Courses Plus go to http://ow.ly/dweH302dILJ

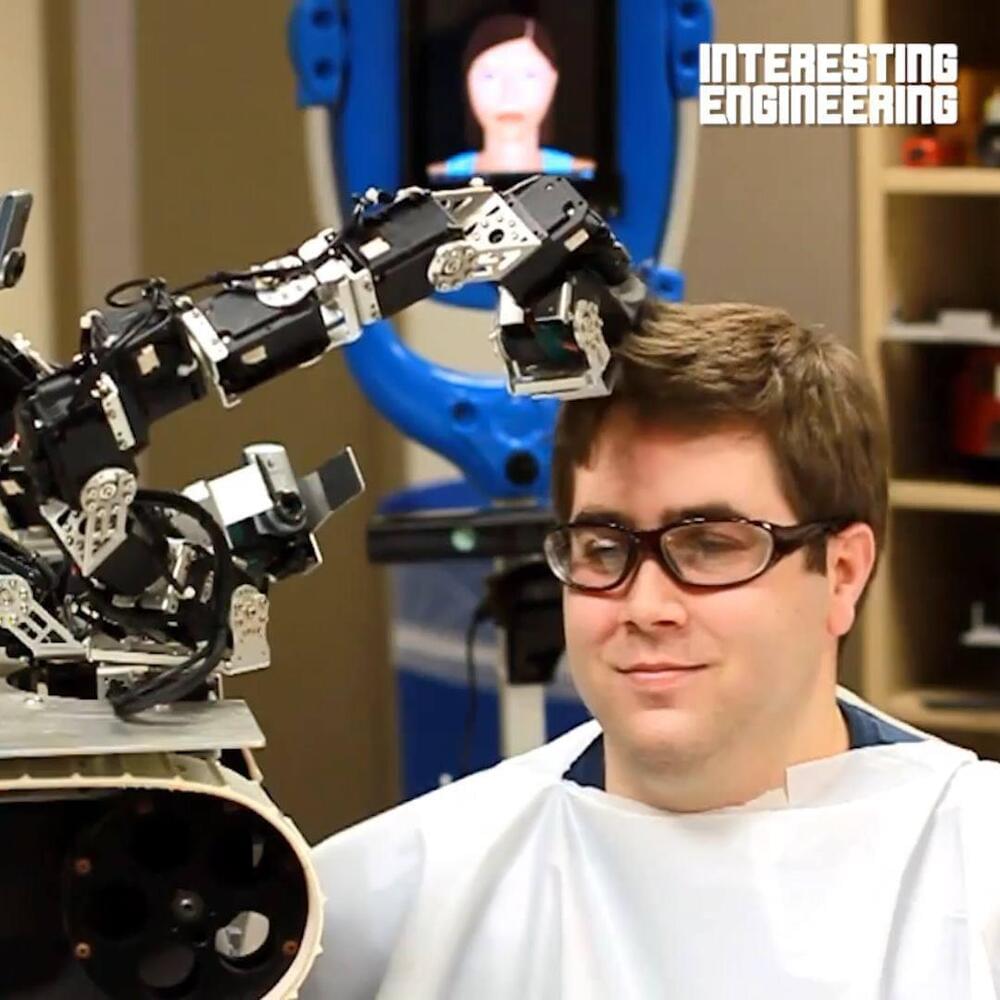

We’ll soon be capable of building self-replicating robots. This will not only change humanity’s future but reshape the galaxy as we know it.

Get your own Space Time tshirt at http://bit.ly/1QlzoBi.

Tweet at us! @pbsspacetime.

Facebook: facebook.com/pbsspacetime.

Email us! pbsspacetime [at] gmail [dot] com.

Comment on Reddit: http://www.reddit.com/r/pbsspacetime.

Support us on Patreon! http://www.patreon.com/pbsspacetime.

Help translate our videos! http://www.youtube.com/timedtext_cs_panel?tab=2&c=UC7_gcs09iThXybpVgjHZ_7g.

Previous Episode — Is there a 5th Fundamental Force.

https://www.youtube.com/watch?v=MuvwcsfXIIo.

Should we Build a Dyson Sphere?

https://www.youtube.com/watch?v=jW55cViXu6s.