Imagine this: a driverless car cruises around in search of passengers.

After dropping someone off, the car uses its profits for a trip to a charging station. Except for it’s initial programming, the car doesn’t need outside help to determine how to carry out its mission.

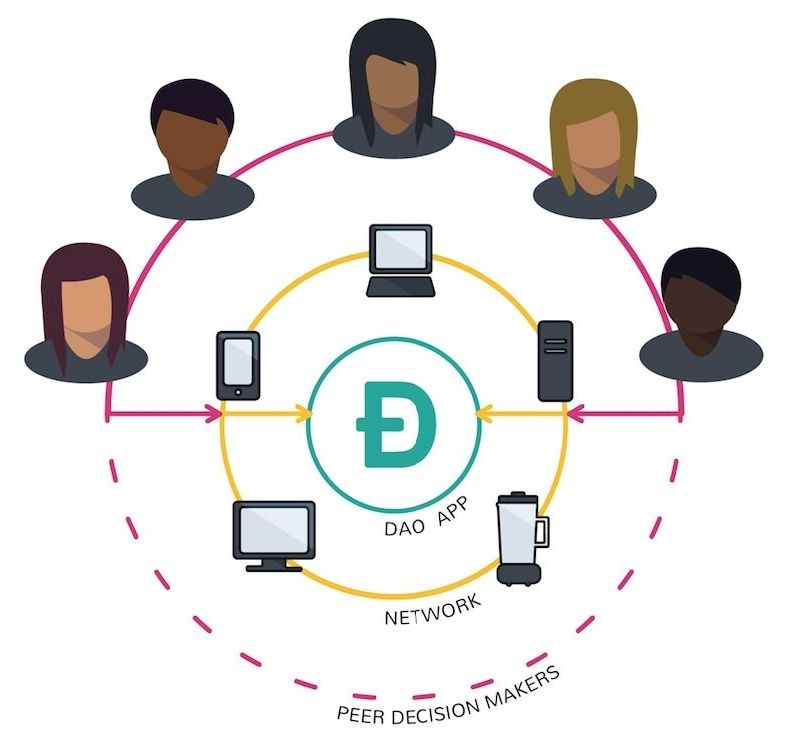

That’s one “thought experiment” brought to you by former bitcoin contributor Mike Hearn in which he describes how bitcoin could help power leaderless organizations 30-or-so years into the future.