https://www.youtube.com/watch?v=ytva8DDV_Ic

What Is Big Data? & How Big Data Is Changing The World! https://www.facebook.com/singularityprosperity/videos/439181406563439/

In this video, we’ll be discussing big data – more specifically, what big data is, the exponential rate of growth of data, how we can utilize the vast quantities of data being generated as well as the implications of linked data on big data.

[0:30–7:50] — Starting off we’ll look at, how data has been used as a tool from the origins of human evolution, starting at the hunter-gatherer age and leading up to the present information age. Afterwards, we’ll look into many statistics demonstrating the exponential rate of growth and future growth of data.

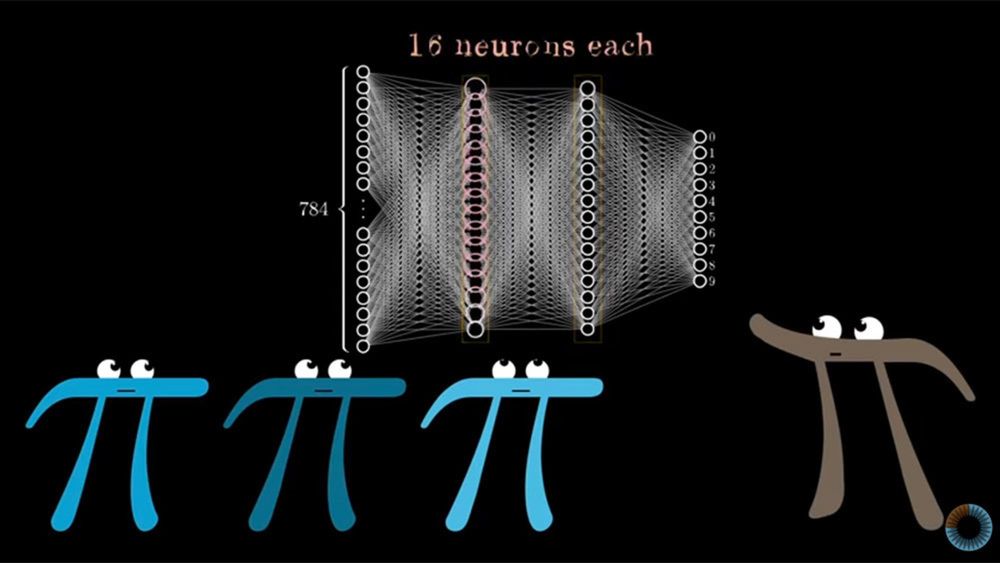

[7:50–18:55] — Following that we’ll discuss, what exactly big data is and delving deeper into the types of data, structured and unstructured and how they will be analyzed both by humans and machine learning (AI).