Robot vending machines designed specifically for pizza is the future we need right now.

Robot vending machines designed specifically for pizza is the future we need right now.

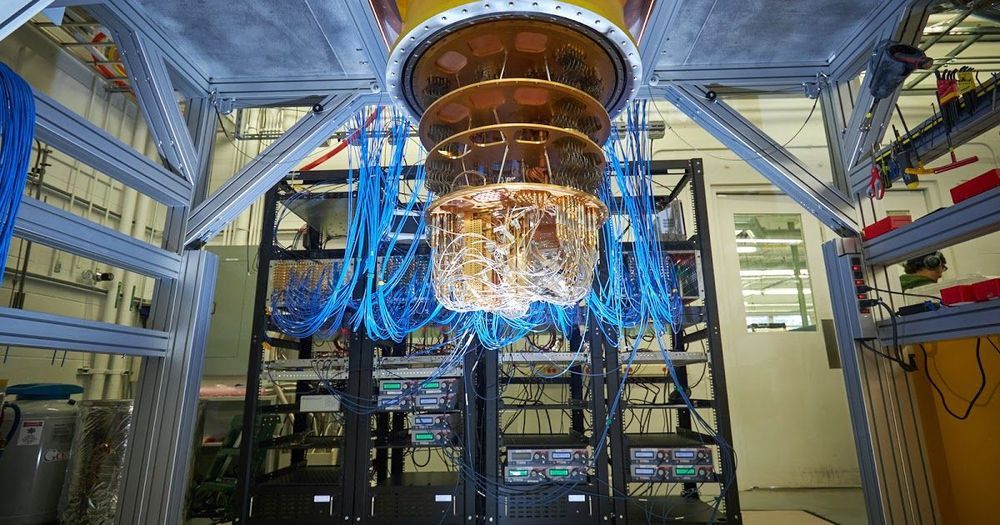

Accurate computational prediction of chemical processes from the quantum mechanical laws that govern them is a tool that can unlock new frontiers in chemistry, improving a wide variety of industries. Unfortunately, the exact solution of quantum chemical equations for all but the smallest systems remains out of reach for modern classical computers, due to the exponential scaling in the number and statistics of quantum variables. However, by using a quantum computer, which by its very nature takes advantage of unique quantum mechanical properties to handle calculations intractable to its classical counterpart, simulations of complex chemical processes can be achieved. While today’s quantum computers are powerful enough for a clear computational advantage at some tasks, it is an open question whether such devices can be used to accelerate our current quantum chemistry simulation techniques.

In “Hartree-Fock on a Superconducting Qubit Quantum Computer”, appearing today in Science, the Google AI Quantum team explores this complex question by performing the largest chemical simulation performed on a quantum computer to date. In our experiment, we used a noise-robust variational quantum eigensolver (VQE) to directly simulate a chemical mechanism via a quantum algorithm. Though the calculation focused on the Hartree-Fock approximation of a real chemical system, it was twice as large as previous chemistry calculations on a quantum computer, and contained ten times as many quantum gate operations. Importantly, we validate that algorithms being developed for currently available quantum computers can achieve the precision required for experimental predictions, revealing pathways towards realistic simulations of quantum chemical systems.

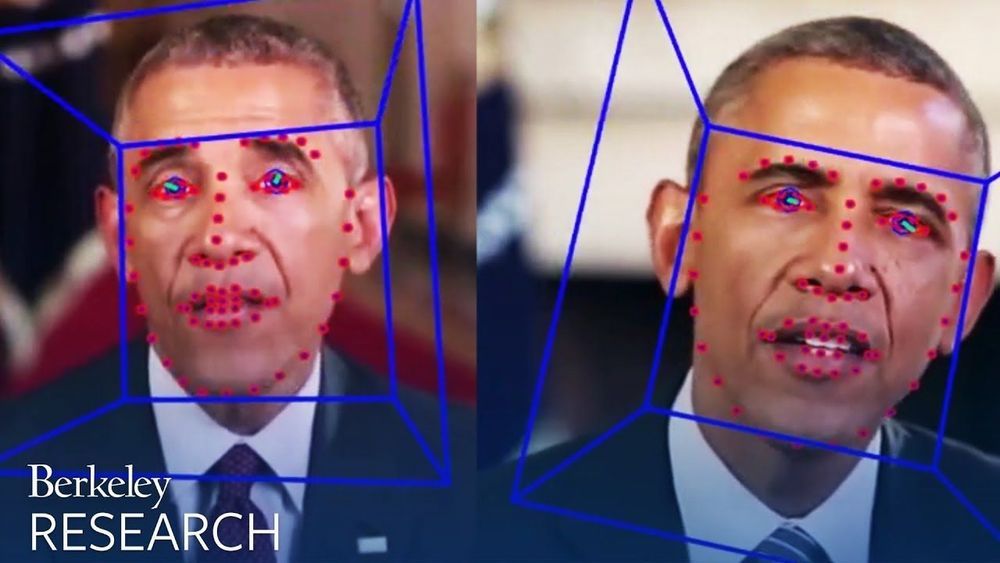

An Artificial Intelligence (AI) produced DeepFake video could show Donald Trump saying or doing something extremely outrageous and inflammatory – just imagine that! Crazy I know, and some people might find it believable and in a worst case scenario it might sway an election, trigger violence in the streets, or spark an international armed conflict.

WHY THIS MATTERS IN BRIEF We are now locked in a war as nefarious actors find new ways to weaponsise deepfakes and fake news, and defenders try to figure out how to discover and flag it. Interested in the Exponential Future? Connect, download a free E-Book, watch a keynote, or browse my.

YouTube says it took down a record number of videos in the second quarter of this year due to an increased use of AI in its content review efforts.

In total, 10.85 million of the 11.4 million videos removed from the platform between April and June were flagged by automated systems, according to YouTube‘s latest Community Guidelines Enforcement Report.

AI played an even bigger role in the removal of user comments. Of the 2.1 million comments taken down, 99.2% were detected by automated systems.

For Dr. Cecily Morrison, research into how AI can help people who are blind or visually disabled is deeply personal. It’s not only that the Microsoft Principal Researcher has a 7-year-old son who is blind, she also believes that the powerful AI-related technologies that will help people must themselves be personal, tailored to the circumstances and abilities of the people they support.

We will see new AI techniques that will enable users to personalize experiences for themselves,” says Dr. Morrison, who is based at Microsoft Research Cambridge and whose work is centered on human-computer interaction and artificial intelligence. “Everyone is different. Having a disability label does not mean a person has the same needs as another with the same label. New techniques will allow people to teach AI technologies about their information needs with just a few examples in order to get a personalized experience suited to their particular needs. Tech will become about personal needs rather than disability labels.”

🤔 “The White House today detailed the establishment of 12 new research institutes focused on AI and quantum information science. Agencies including the National Science Foundation (NSF), U.S. Department of Homeland Security, and U.S. Department of Energy (DOE) have committed to investing tens of millions of dollars in centers intended to serve as nodes for AI and quantum computing study.

Laments over the AI talent shortage in the U.S. have become a familiar refrain. While higher education enrollment in AI-relevant fields like computer science has risen rapidly in recent years, few colleges have been able to meet student demand due to a lack of staffing. In June, the Trump administration imposed a ban on U.S. entry for workers on certain visas — including for high-skilled H-1B visa holders, an estimated 35% of whom have an AI-related degree — through the end of the year. And Trump has toyed with the idea of suspending the Optional Practical Training program, which allows international students to work for up to three years in the U.S.”

The White House announced the creation of AI and quantum research institutes funded by billions in venture and taxpayer dollars.

As a child, you develop a sense of what “fairness” means. It’s a concept that you learn early on as you come to terms with the world around you. Something either feels fair or it doesn’t.

But increasingly, algorithms have begun to arbitrate fairness for us. They decide who sees housing ads, who gets hired or fired, and even who gets sent to jail. Consequently, the people who create them—software engineers—are being asked to articulate what it means to be fair in their code. This is why regulators around the world are now grappling with a question: How can you mathematically quantify fairness?

This story attempts to offer an answer. And to do so, we need your help. We’re going to walk through a real algorithm, one used to decide who gets sent to jail, and ask you to tweak its various parameters to make its outcomes more fair. (Don’t worry—this won’t involve looking at code!)

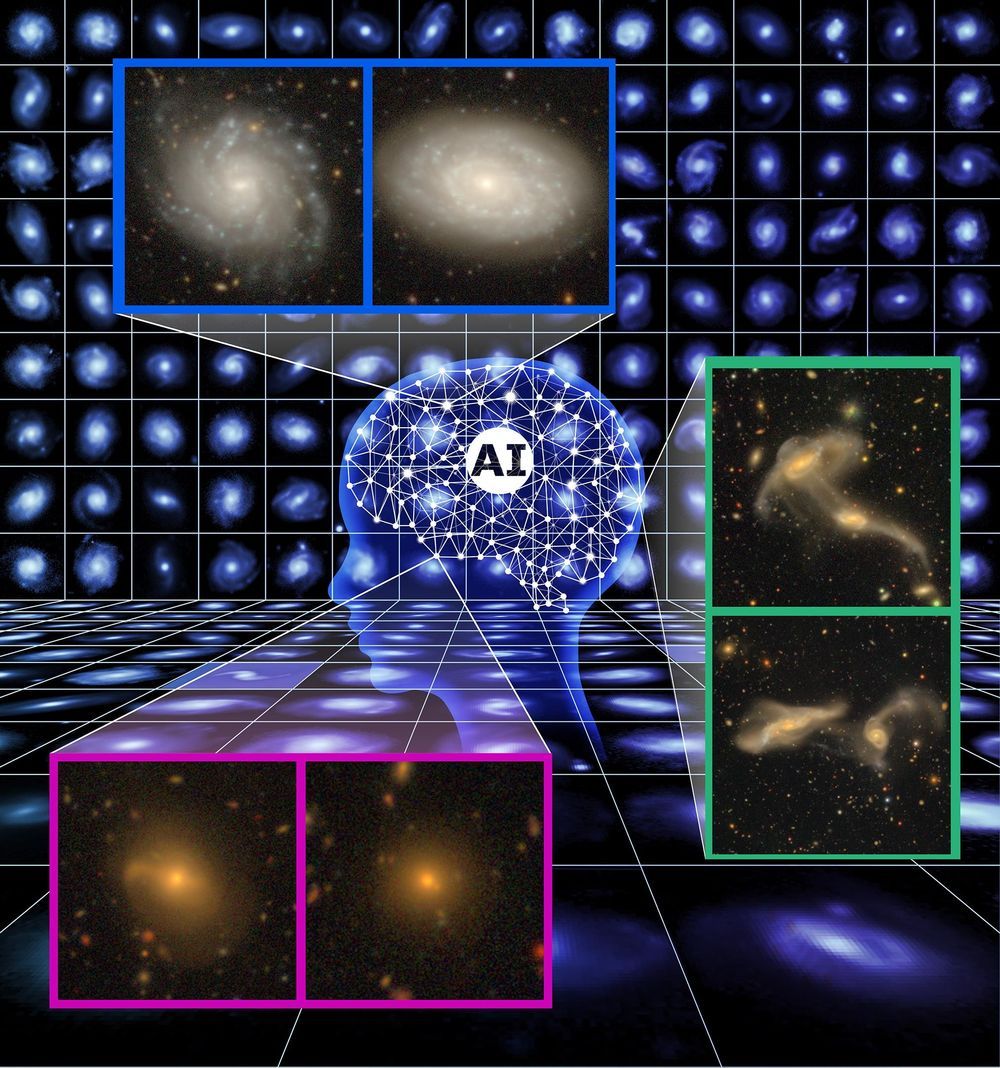

Astronomers have applied artificial intelligence (AI) to ultra-wide field-of-view images of the distant Universe captured by the Subaru Telescope, and have achieved a very high accuracy for finding and classifying spiral galaxies in those images. This technique, in combination with citizen science, is expected to yield further discoveries in the future.

A research group, consisting of astronomers mainly from the National Astronomical Observatory of Japan (NAOJ), applied a deep-learning technique, a type of AI, to classify galaxies in a large dataset of images obtained with the Subaru Telescope. Thanks to its high sensitivity, as many as 560,000 galaxies have been detected in the images. It would be extremely difficult to visually process this large number of galaxies one by one with human eyes for morphological classification. The AI enabled the team to perform the processing without human intervention.