Circa 2018

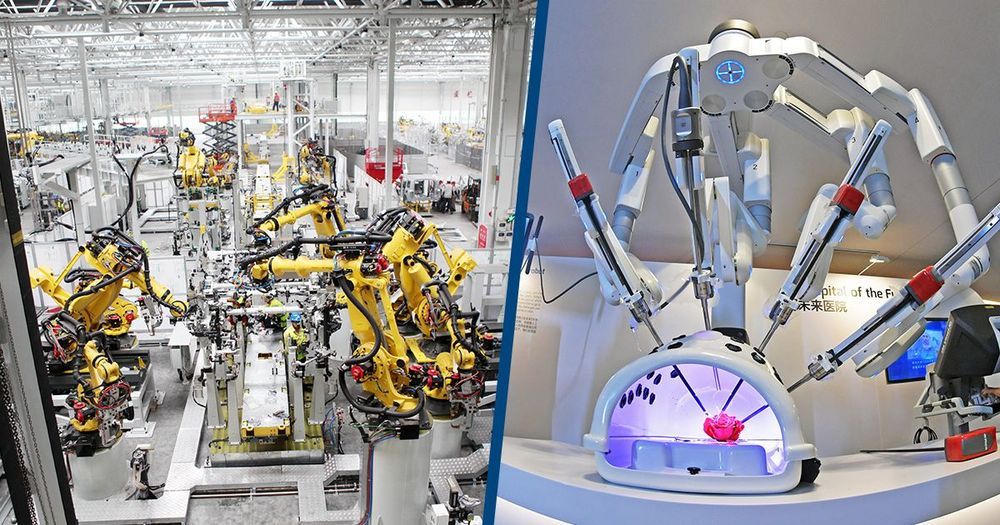

After 12 years of work, researchers at the University of Manchester in England have completed construction of a “SpiNNaker” (Spiking Neural Network Architecture) supercomputer. It can simulate the internal workings of up to a billion neurons through a whopping one million processing units.

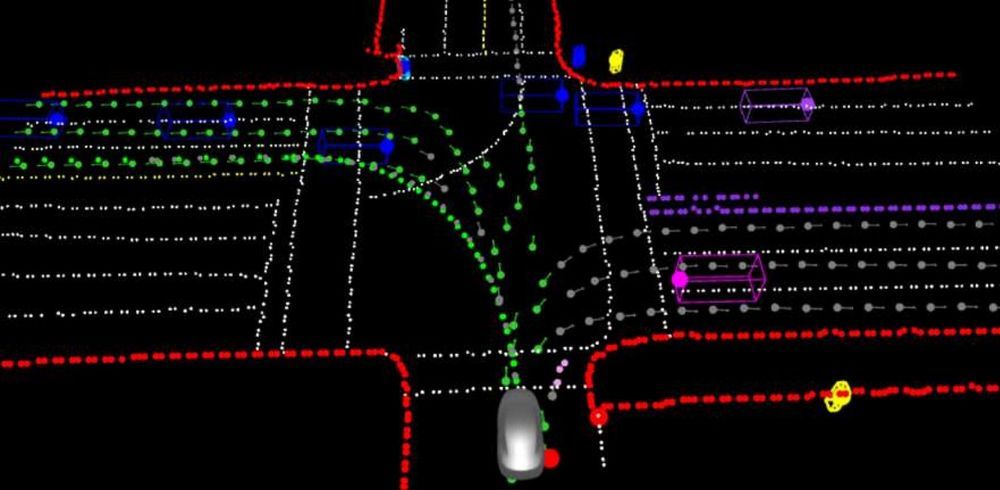

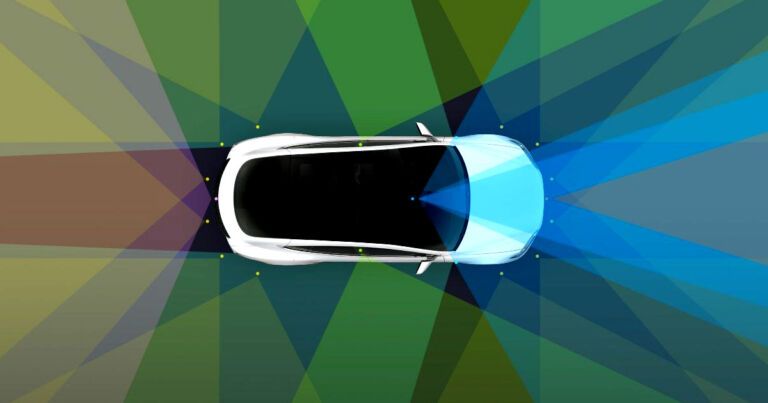

The human brain contains approximately 100 billion neurons, exchanging signals through hundreds of trillions of synapses. While these numbers are imposing, a digital brain simulation needs far more than raw processing power: rather, what’s needed is a radical rethinking of the standard computer architecture on which most computers are built.

“Neurons in the brain typically have several thousand inputs; some up to quarter of a million,” Prof. Stephen Furber, who conceived and led the SpiNNaker project, told us. “So the issue is communication, not computation. High-performance computers are good at sending large chunks of data from one place to another very fast, but what neural modeling requires is sending very small chunks of data (representing a single spike) from one place to many others, which is quite a different communication model.”