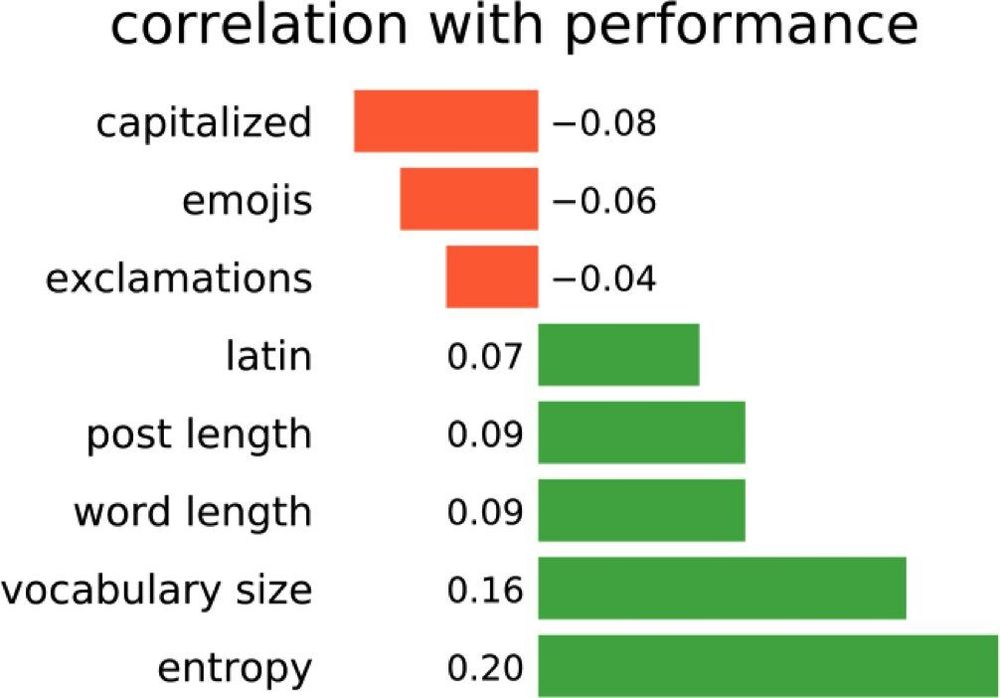

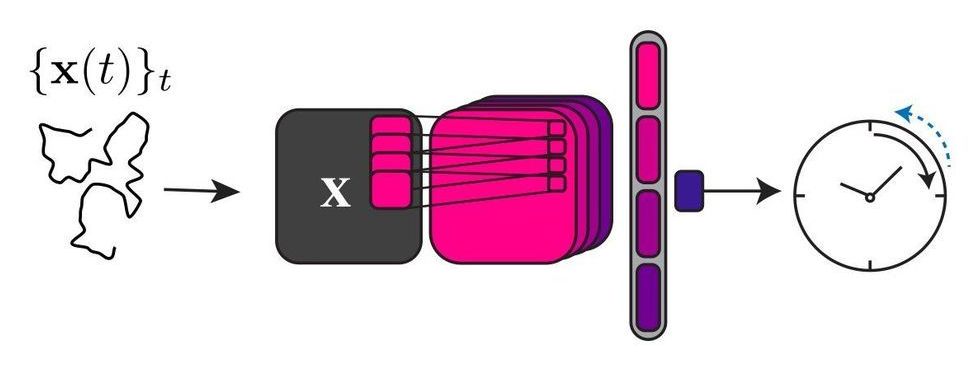

Ivan Smirnov, Leading Research Fellow of the Laboratory of Computational Social Sciences at the Institute of Education of HSE University, has created a computer model that can distinguish high academic achievers from lower ones based on their social media posts. The prediction model uses a mathematical textual analysis that registers users’ vocabulary (its range and the semantic fields from which concepts are taken), characters and symbols, post length, and word length.

Every word has its own rating (a kind of IQ). Scientific and cultural topics, English words, and words and posts that are longer in length rank highly and serve as indicators of good academic performance. An abundance of emojis, words or whole phrases written in capital letters, and vocabulary related to horoscopes, driving, and military service indicate lower grades in school. At the same time, posts can be quite short—even tweets are quite informative. The study was supported by a grant from the Russian Science Foundation (RSF), and an article detailing the study’s results was published in EPJ Data Science.

Foreign studies have long shown that users’ social media behavior—their posts, comments, likes, profile features, user pics, and photos—can be used to paint a comprehensive portrait of them. A person’s social media behavior can be analyzed to determine their lifestyle, personal qualities, individual characteristics, and even their mental health status. It is also very easy to determine a person’s socio-demographic characteristics, including their age, gender, race, and income. This is where profile pictures, Twitter hashtags, and Facebook posts come in.