Researchers at Hokkaido University and Amoeba Energy in Japan have, inspired by the efficient foraging behavior of a single-celled amoeba, developed an analog computer for finding a reliable and swift solution to the traveling salesman problem—a representative combinatorial optimization problem.

Many real-world application tasks such as planning and scheduling in logistics and automation are mathematically formulated as combinatorial optimization problems. Conventional digital computers, including supercomputers, are inadequate to solve these complex problems in practically permissible time as the number of candidate solutions they need to evaluate increases exponentially with the problem size—also known as combinatorial explosion. Thus new computers called Ising machines, including quantum annealers, have been actively developed in recent years. These machines, however, require complicated pre-processing to convert each task to the form they can handle and have a risk of presenting illegal solutions that do not meet some constraints and requests, resulting in major obstacles to the practical applications.

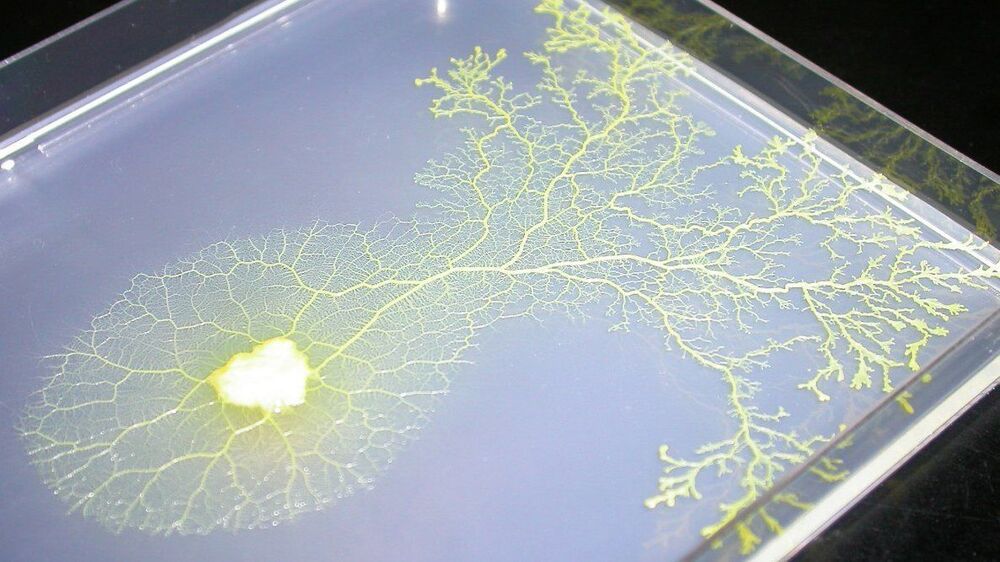

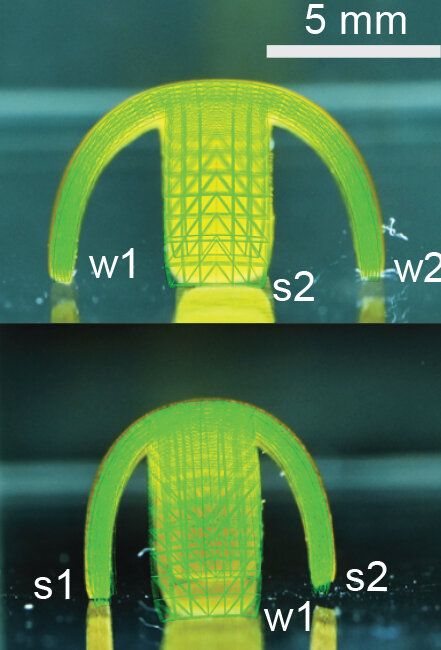

These obstacles can be avoided using the newly developed ‘electronic amoeba,’ an analog computer inspired by a single-celled amoeboid organism. The amoeba is known to maximize nutrient acquisition efficiently by deforming its body. It has shown to find an approximate solution to the traveling salesman problem (TSP), i.e., given a map of a certain number of cities, the problem is to find the shortest route for visiting each city exactly once and returning to the starting city. This finding inspired Professor Seiya Kasai at Hokkaido University to mimic the dynamics of the amoeba electronically using an analog circuit, as described in the journal Scientific Reports. “The amoeba core searches for a solution under the electronic environment where resistance values at intersections of crossbars represent constraints and requests of the TSP,” says Kasai. Using the crossbars, the city layout can be easily altered by updating the resistance values without complicated pre-processing.