Researchers have developed a new device able to run neural network computations using 100 times less energy and area than existing CMOS-based hardware.

There are some tasks that traditional robots — the rigid and metallic kind — simply aren’t cut out for. Soft-bodied robots, on the other hand, may be able to interact with people more safely or slip into tight spaces with ease. But for robots to reliably complete their programmed duties, they need to know the whereabouts of all their body parts. That’s a tall task for a soft robot that can deform in a virtually infinite number of ways.

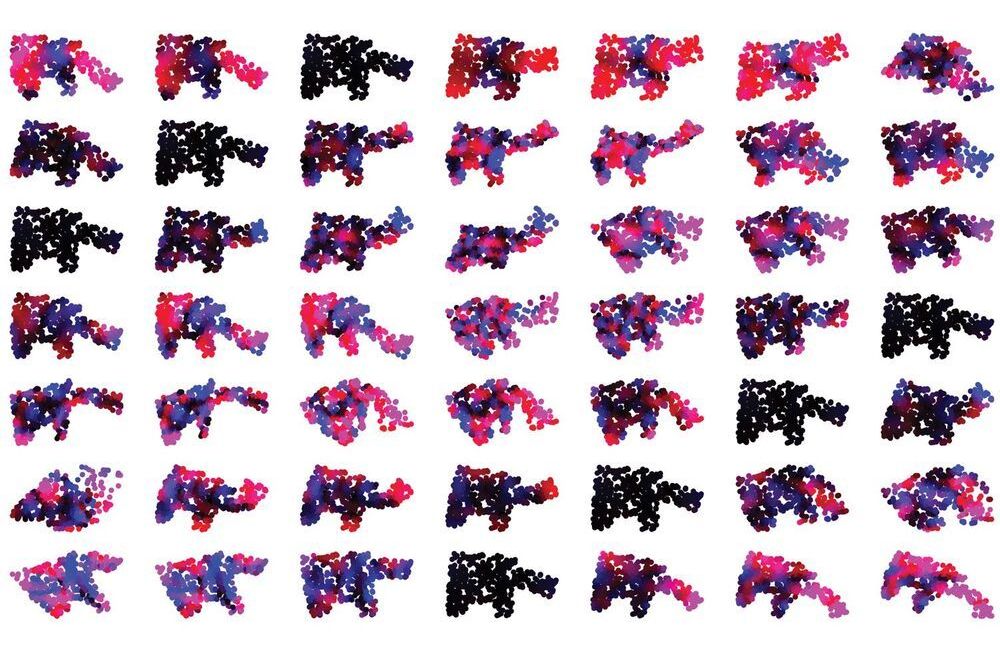

MIT researchers have developed an algorithm to help engineers design soft robots that collect more useful information about their surroundings. The deep-learning algorithm suggests an optimized placement of sensors within the robot’s body, allowing it to better interact with its environment and complete assigned tasks. The advance is a step toward the automation of robot design. “The system not only learns a given task, but also how to best design the robot to solve that task,” says Alexander Amini. “Sensor placement is a very difficult problem to solve. So, having this solution is extremely exciting.”

The research will be presented during April’s IEEE International Conference on Soft Robotics and will be published in the journal IEEE Robotics and Automation Letters. Co-lead authors are Amini and Andrew Spielberg, both PhD students in MIT Computer Science and Artificial Intelligence Laboratory (CSAIL). Other co-authors include MIT PhD student Lillian Chin, and professors Wojciech Matusik and Daniela Rus.

How recent research points the way towards defeating adversarial examples and achieving a more resilient, consistent and flexible A.I.

How recent neuroscience research points the way towards defeating adversarial examples and achieving a more resilient, consistent and flexible form of artificial intelligence.

Welcome back to The TechCrunch Exchange, a weekly startups-and-markets newsletter. It’s broadly based on the daily column that appears on Extra Crunch, but free, and made for your weekend reading. Want it in your inbox every Saturday morning? Sign up here.

Earnings season is coming to a close, with public tech companies wrapping up their Q4 and 2020 disclosures. We don’t care too much about the bigger players’ results here at TechCrunch, but smaller tech companies we knew when they were wee startups can provide startup-related data points worth digesting. So, each quarter The Exchange spends time chatting with a host of CEOs and CFOs, trying to figure what’s going on so that we can relay the information to private companies.

Sometimes it’s useful, as our chat with recent fintech IPO Upstart proved after we got to noodle with the company about rising acceptance of AI in the conservative banking industry.

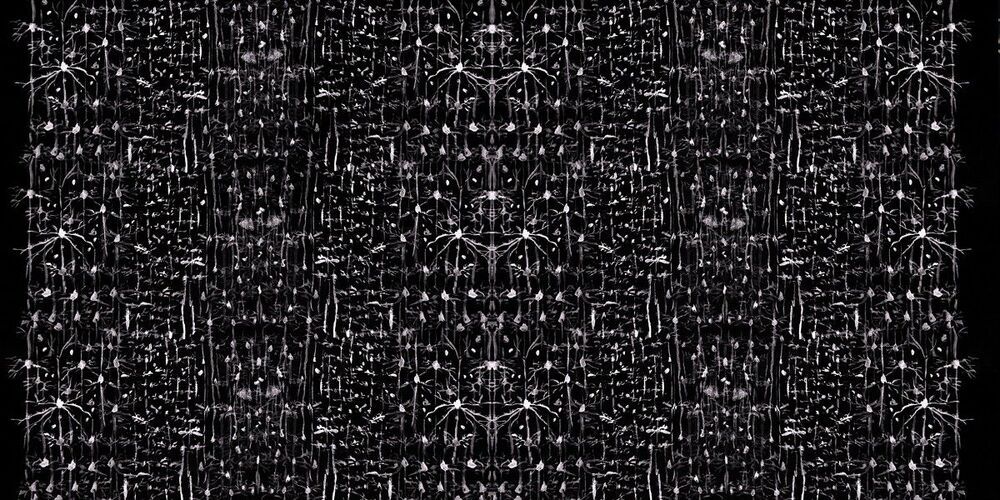

Summary: Using a range of tools from machine learning to graphical models, researchers have discovered a new way to identify cells and explore the mechanisms behind neurodegenerative diseases.

Source: Georgia Institute of Technology

In researching the causes and potential treatments for degenerative conditions such as Alzheimer’s or Parkinson’s disease, neuroscientists frequently struggle to accurately identify cells needed to understand brain activity that gives rise to behavior changes such as declining memory or impaired balance and tremors.

Robots are unable to perform everyday manipulation tasks, such as grasping or rearranging objects, with the same dexterity as humans. But Brazilian scientists have moved this research a step further by developing a new system that uses deep learning algorithms to improve a robot’s ability to independently detect how to grasp an object, known as autonomous robotic grasp detection.

In a paper published Feb. 24 in Robotics and Autonomous Systems, a team of engineers from the University of São Paulo addressed existing problems with the visual perception phase that occurs when a robot grasps an object. They created a model using deep learning neural networks that decreased the time a robot needs to process visual data, perceive an object’s location and successfully grasp it.

Deep learning is a subset of machine learning, in which computer algorithms are trained how to learn with data and to improve automatically through experience. Inspired by the structure and function of the human brain, deep learning uses a multilayered structure of algorithms called neural networks, operating much like the human brain in identifying patterns and classifying different types of information. Deep learning models are often based on convolutional neural networks, which specialize in analyzing visual imagery.

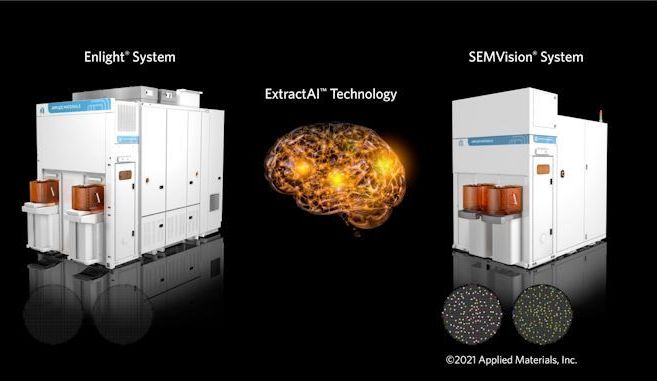

Advanced system-on-chip designs are extremely complex in terms of transistor count and are hard to build using the latest fabrication processes. In a bid to make production of next-generation chips economically feasible, chip fabs need to ensure high yields early in their lifecycle by quickly finding and correcting defects.

But finding and fixing defects is not easy today, as traditional optical inspection tools don’t offer sufficiently detailed image resolution, while high-resolution e-beam and multibeam inspection tools are relatively slow. Looking to bridge the gap on inspection costs and time, Applied Materials has been developing a technology called ExtractAI technology, which uses a combination of the company’s latest Enlight optical inspection tool, SEMVision G7 e-beam review system, and deep learning (AI) to quickly find flaws. And surprisingly, this solution has been in use for about a year now.

“Applied’s new playbook for process control combines Big Data and AI to deliver an intelligent and adaptive solution that accelerates our customers’ time to maximum yield,” said Keith Wells, group vice president and general manager, Imaging and Process Control at Applied Materials. “By combining our best-in-class optical inspection and eBeam review technologies, we have created the industry’s only solution with the intelligence to not only detect and classify yield-critical defects but also learn and adapt to process changes in real-time. This unique capability enables chipmakers to ramp new process nodes faster and maintain high capture rates of yield-critical defects over the lifetime of the process.”