And they say computers can’t create art.

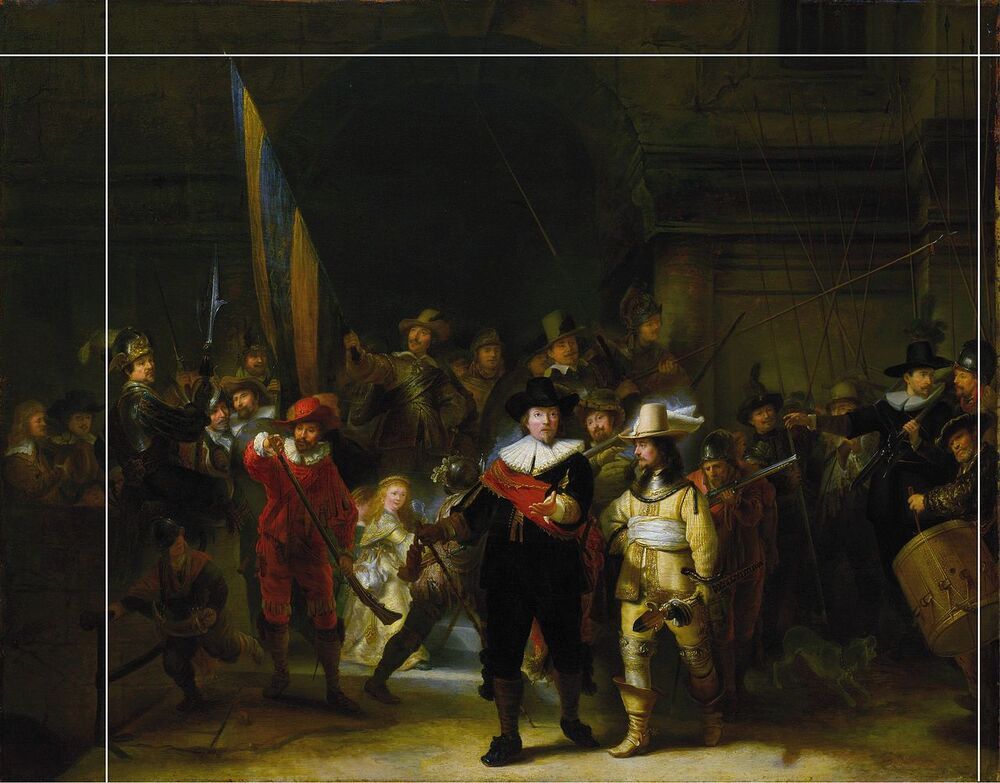

In 1642, famous Dutch painter Rembrandt van Rijn completed a large painting called Militia Company of District II under the Command of Captain Frans Banninck Cocq — today, the painting is commonly referred to as The Night Watch. It was the height of the Dutch Golden Age, and The Night Watch brilliantly showcased that.

The painting measured 363 cm × 437 cm (11.91 ft × 14.34 ft) — so big that the characters in it were almost life-sized, but that’s only the start of what makes it so special. Rembrandt made dramatic use of light and shadow and also created the perception of motion in what would normally be a stationary military group portrait. Unfortunately, though, the painting was trimmed in 1715 to fit between two doors at Amsterdam City Hall.

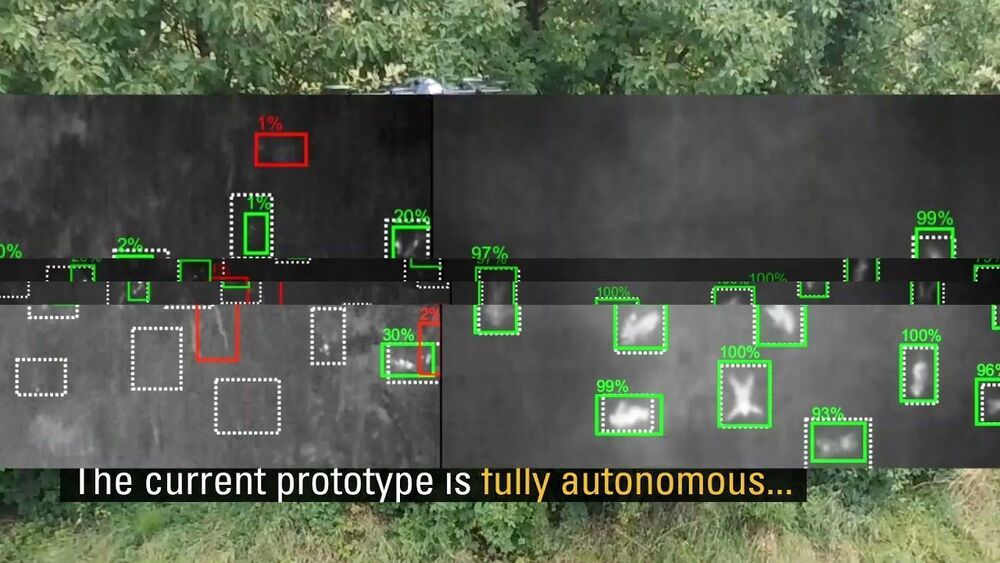

For over 300 years, the painting has been missing 60cm (2ft) from the left, 22cm from the top, 12cm from the bottom and 7cm from the right. Now, computer software has restored the missing parts.