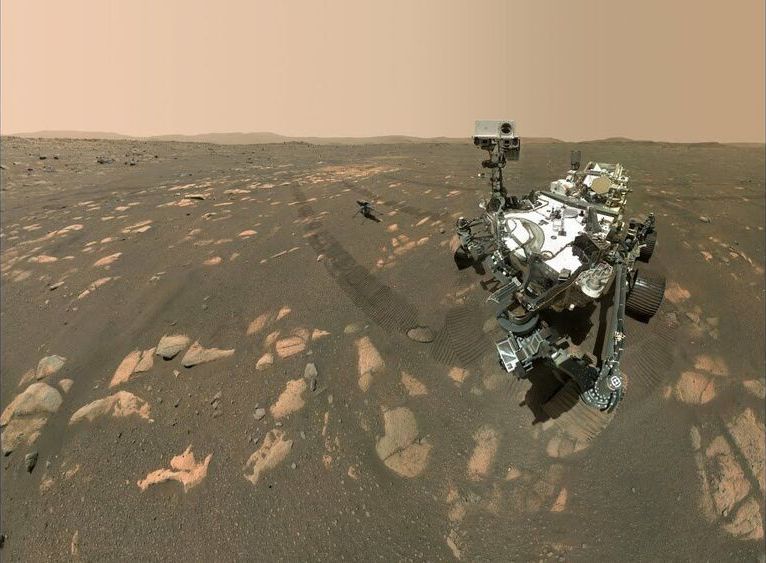

The challenges of making AI work at the edge—that is, making it reliable enough to do its job and then justifying the additional complexity and expense of putting it in our devices—are monumental. Existing AI can be inflexible, easily fooled, unreliable and biased. In the cloud, it can be trained on the fly to get better—think about how Alexa improves over time. When it’s in a device, it must come pre-trained, and be updated periodically. Yet the improvements in chip technology in recent years have made it possible for real breakthroughs in how we experience AI, and the commercial demand for this sort of functionality is high.

AI is moving from data centers to devices, making everything from phones to tractors faster and more private. These newfound smarts also come with pitfalls.