Category: robotics/AI

Marines test Google’s latest military robot

The latest version of a walking, quadruped battlefield robot from Boston Dynamics, the military robotics maker owned by Google X, was tested by U.S. Marines last week.

Spot weighs about 70kgs, is electrically operated and walks on four hydraulically-actuated legs. It’s controlled via wireless by an operator who can be up to 500 meters away.

It underwent trials and testing at Marine Corps Base Quantico in Virginia as part of evaluations by the Marines on future military uses of robotic technology. In a series of missions, it was evaluated in different terrains including hills, woodlands and urban areas.

First driverless pods to travel public roads arrive in the Netherlands

The WEpod will be the first self-driving electric shuttle to run in regular traffic, and take bookings via a dedicated app.

Elon Musk, CEO of Tesla and SpaceX, Wants to Change How (and Where) Humans Live

Making spaceships and electric supercars isn’t enough for Elon Musk. Meghan Daum meets the entrepreneur who wants to save the world.

The name sounds like a men’s cologne. Or a type of ox. It sounds possibly made up. But then, so much about Elon Musk seems the creation of a fiction writer—and not necessarily one committed to realism. At 44, Musk is both superstar entrepreneur and mad scientist. Sixteen years after cofounding a company called X.com that would, following a merger, go on to become PayPal, he’s launched the electric carmaker Tesla Motors and the aerospace manufacturer SpaceX, which are among the most closely watched—some would say obsessed-over—companies in the world. He has been compared to the Christian Grey character in the Fifty Shades of Grey movie, though not as often as he’s been called “the real Tony Stark,” referring to the playboy tech entrepreneur whose alter ego, Iron Man, rescues the universe from various manifestations of evil.

The Iron Man comparison is, strangely, as apt as it is hyperbolic, since Musk has the boyish air of a nascent superhero and says his ultimate aim is to save humanity from what he sees as its eventual and unavoidable demise—from any number of causes, carbon consumption high among them. (As it happens, he met with Robert Downey, Jr., to discuss the Tony Stark role, and his factory doubled as the villain’s hideaway in Iron Man 2.) To this end he’s building his own rockets, envisioning a future in which we colonize Mars, funding research aimed at keeping artificial intelligence beneficial to humanity, and making lithium-ion electric batteries that might, one day, put the internal-combustion engine out to pasture.

The Marines Are Sending This Robotic Dog Into Simulated Combat

The battlefield can be one of the most useful places for robots. And now, the US Marines are testing out Spot, a robo dog built by Boston Dynamics to see how helpful the ‘bot could be in combat.

Remember Big Dog, also from Google-owned robotics company Boston Dynamics? Well, Spot is a tinier, more agile iteration: At 160 pounds, it’s hydraulically actuated with a sensor on its noggin that aids in navigation. It’s controlled by a laptop-connected game controller, which a hidden operator can use up to 1,600 feet away. The four-legged all-terrain robo pup was revealed in February. Robots in combat aren’t new, but Spot signals a quieter, leaner alternative that hints at the strides made in this arena.

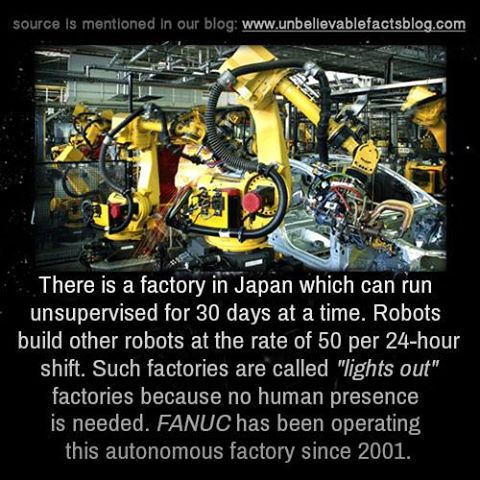

Atom-Sized Construction Could Shrink Future Gadgets

The U.S. military doesn’t just build big, scary tanks and giant warplanes; it’s also interested in teeny, tiny stuff. The Pentagon’s latest research project aims to improve today’s technologies by shrinking them down to microscopic size.

The recently launched Atoms to Product (A2P) program aims to develop atom-size materials to build state-of-the-art military and consumer products. These tiny manufacturing methods would work at scales 100,000 times smaller than those currently being used to build new technologies, according to the Defense Advanced Research Projects Agency, or DARPA.

The tiny, high-tech materials of the future could be used to build things like hummingbird-size drones and super-accurate (and super-small) atomic clocks — two projects already spearheaded by DARPA. [Humanoid Robots to Flying Cars: 10 Coolest DARPA Projects].

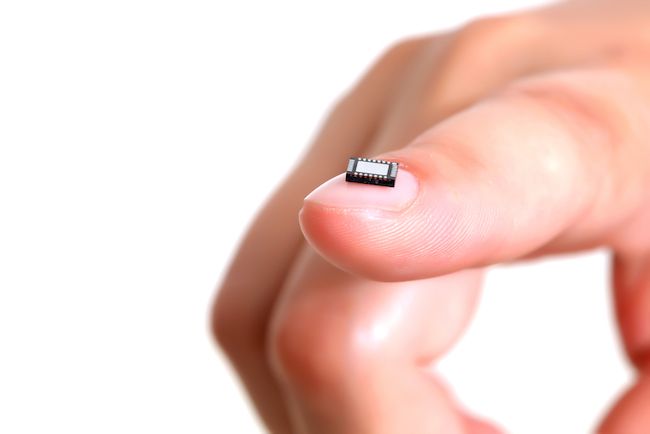

Researchers enable robots to see through solid walls with Wi-Fi (w/ Video)

(Phys.org) —Wi-Fi makes all kinds of things possible. We can send and receive messages, make phone calls, browse the Internet, even play games with people who are miles away, all without the cords and wires to tie us down. At UC Santa Barbara, researchers are now using this versatile, everyday signal to do something different and powerful: looking through solid walls and seeing every square inch of what’s on the other side. Built into robots, the technology has far-reaching possibilities.

“This is an exciting time to be doing this kind of research,” said Yasamin Mostofi, professor of electrical and computer engineering at UCSB. For the past few years, she and her team have been busy realizing this X-ray vision, enabling robots to see objects and humans behind thick walls through the use of radio frequency signals. The patented technology allows users to see the space on the other side and identify not only the presence of occluded objects, but also their position and geometry, without any prior knowledge of the area. Additionally, it has the potential to classify the material type of each occluded object such as human, metal or wood.

The combination of imaging technology and automated mobility can make these robots useful in situations where human access is difficult or risky, and the ability to determine what is in a given occluded area is important, such as search and rescue operations for natural or man-made disasters.

Pentagon’s Project ‘Avatar’: Same as the Movie, but With Robots Instead of Aliens

Soldiers practically inhabiting the mechanical bodies of androids, who will take the humans’ place on the battlefield. Or sophisticated tech that spots a powerful laser ray, then stops it from obliterating its target.

If you’ve got Danger Room’s taste in movies, you’ve probably seen both ideas on the big screen. Now Darpa, the Pentagon’s far-out research arm, wants to bring ’em into the real world.

In the agency’s $2.8 billion budget for 2013, unveiled on Monday, they’ve allotted $7 million for a project titled “Avatar.” The project’s ultimate goal, not surprisingly, sounds a lot like the plot of the same-named (but much more expensive) flick.

Why we really should ban autonomous weapons: a response

We welcome Sam Wallace’s contribution to the discussion on a proposed ban on offensive autonomous weapons. This is a complex issue and there are interesting arguments on both sides that need to be weighed up carefully.

His article, written as a response to an open letter signed by over 2500 AI and robotics researchers, begins with the claim that such a ban is as “unrealistic as the broad relinquishment of nuclear weapons would have been at the height of the cold war.”

This argument misses the mark. First, the letter proposes not unilateral relinquishment but an arms control treaty. Second, nuclear weapons were successfully curtailed by a series of arms-control treaties during the cold war, without which we might not have been here to have this conversation.

Microsoft demos English-to-Chinese universal translator that keeps your voice and accent Alternative World News Network At an event in China

Microsoft demos English-to-Chinese universal translator that keeps your voice and accent.

Alternative World News Network.

At an event in China, Microsoft Research chief Rick Rashid has demonstrated a real-time English-to-Mandarin speech-to-speech translation engine. Not only is the translation very accurate, but the software also preserves the user’s accent and intonation. We’re not just talking about a digitized, robotic translator here — this is firmly within the realms of Doctor Who or Star Trek universal translation.

The best way to appreciate this technology is to watch the video below. The first six minutes or so is Rick Rashid explaining the fundamental difficulty of computer translation, and then the last few minutes actually demonstrate the software’s English-to-Mandarin speech-to-speech translation engine. Sadly I don’t speak Chinese, so I can’t attest to the veracity of the translation, but the audience — some 2,000 Chinese students — seems rather impressed. A professional English/Chinese interpreter also remarked to me that the computer translation is surprisingly good; not quite up to the level of human translation, but it’s getting close.